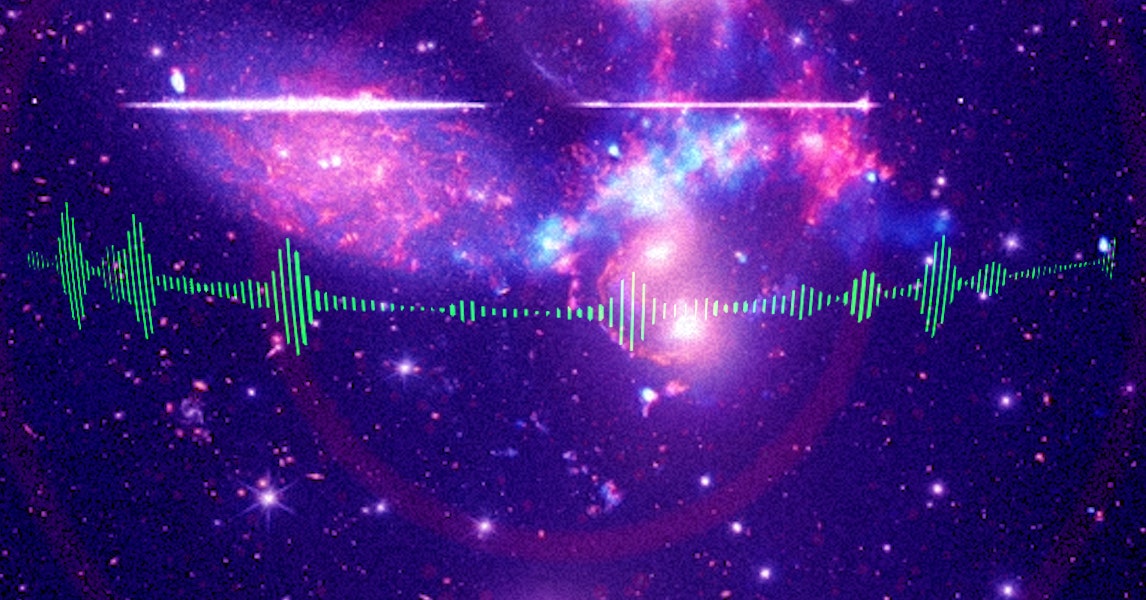

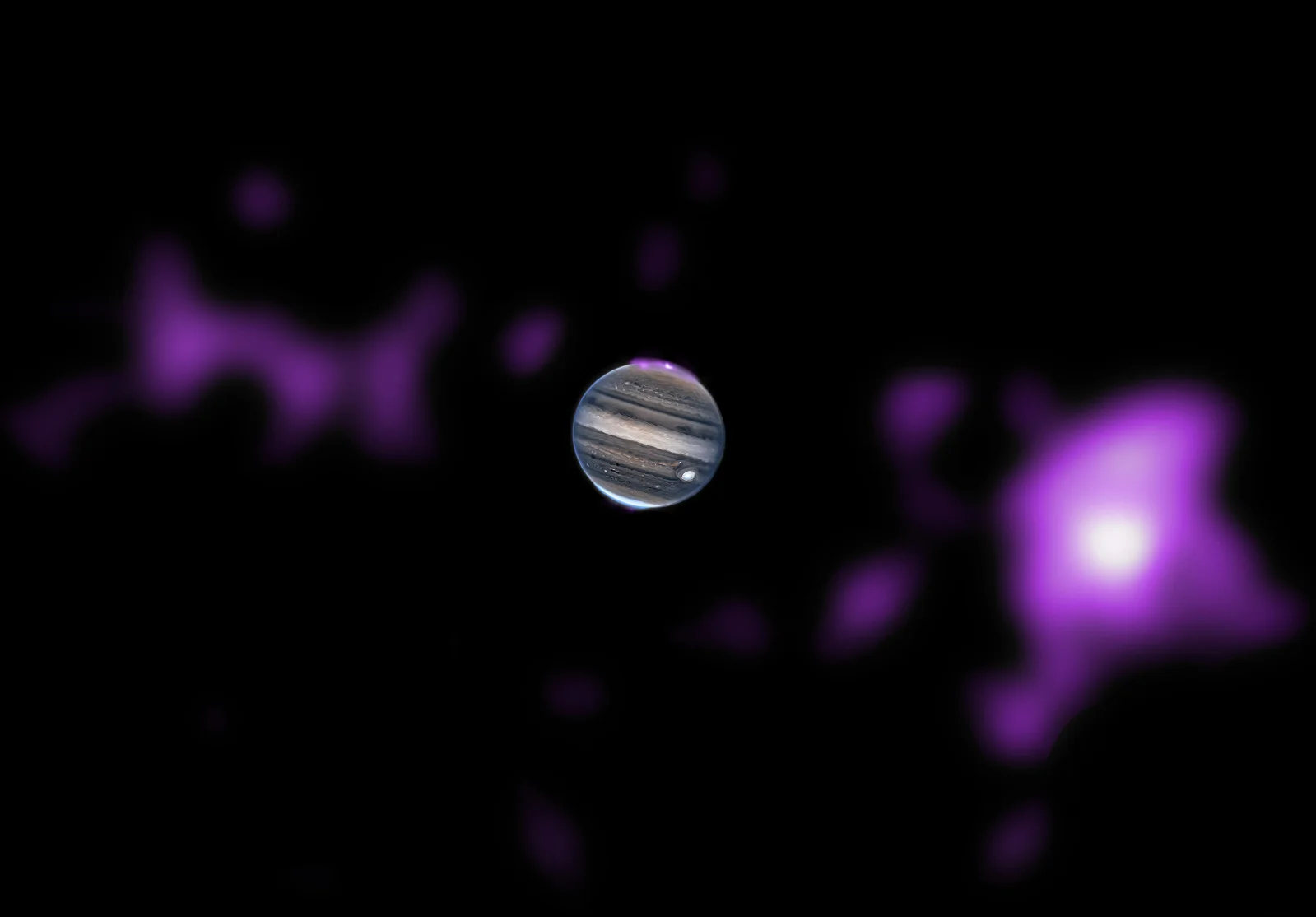

Chandra Turns Planetary Parade Into a Cosmic Soundtrack

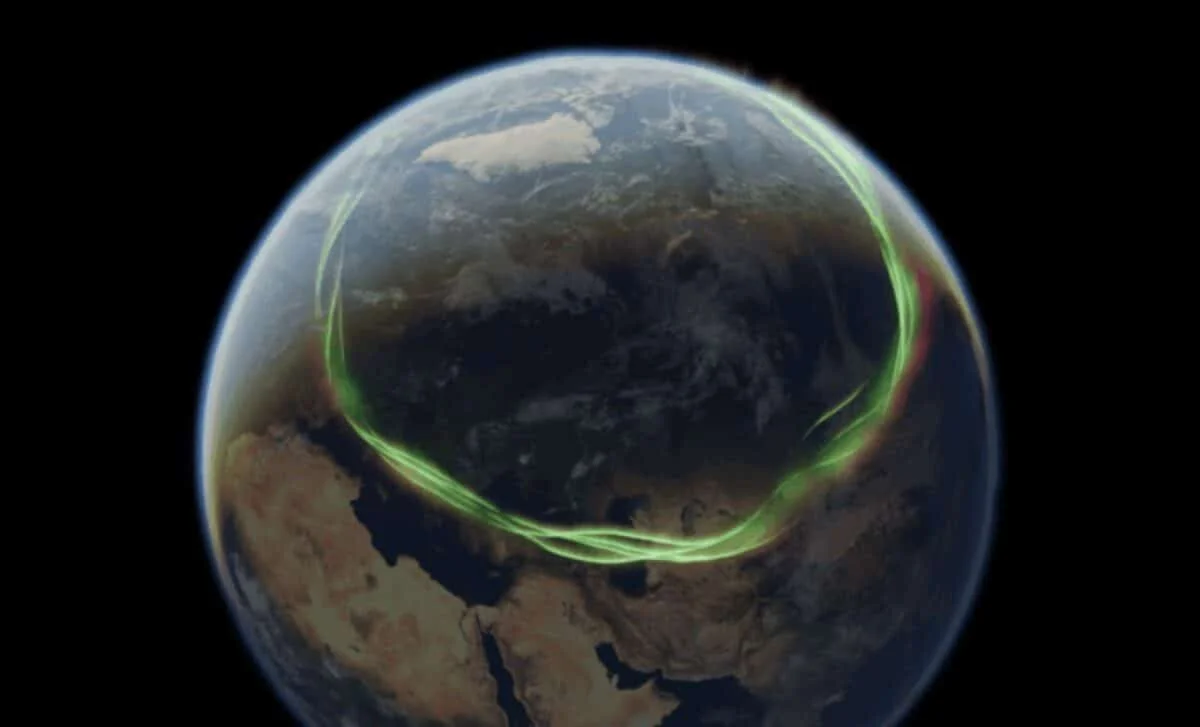

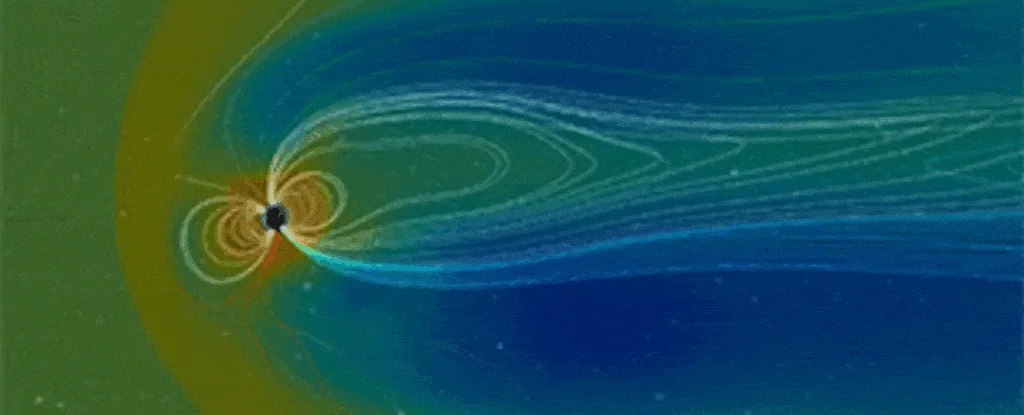

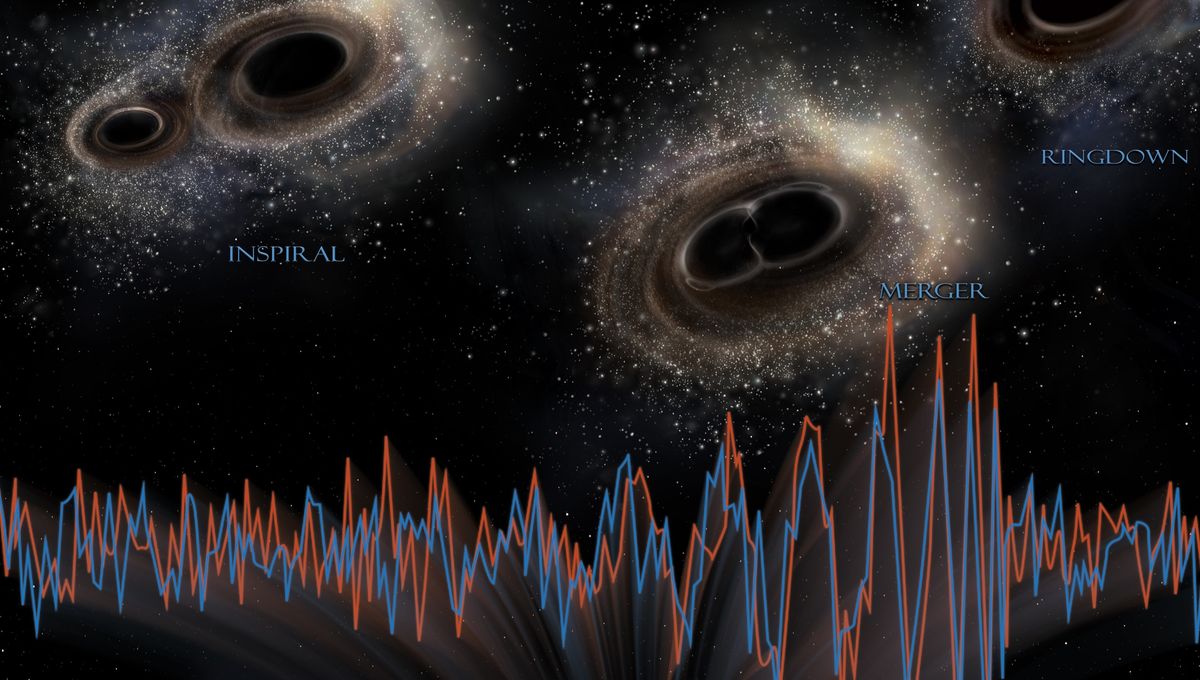

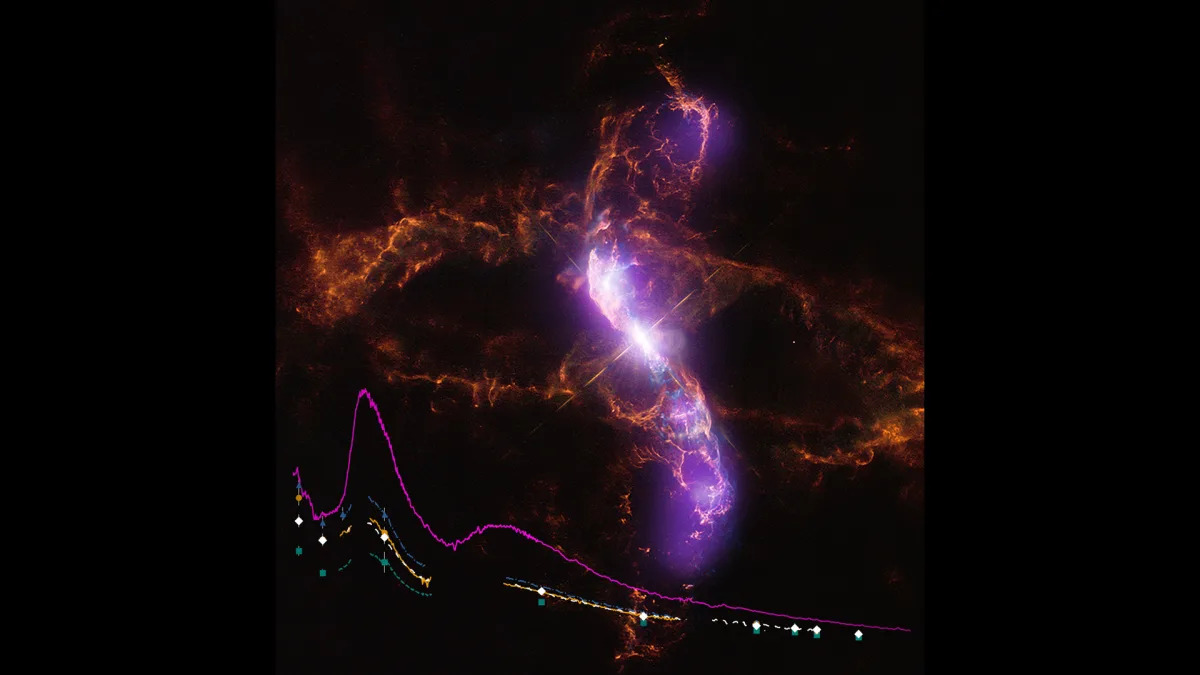

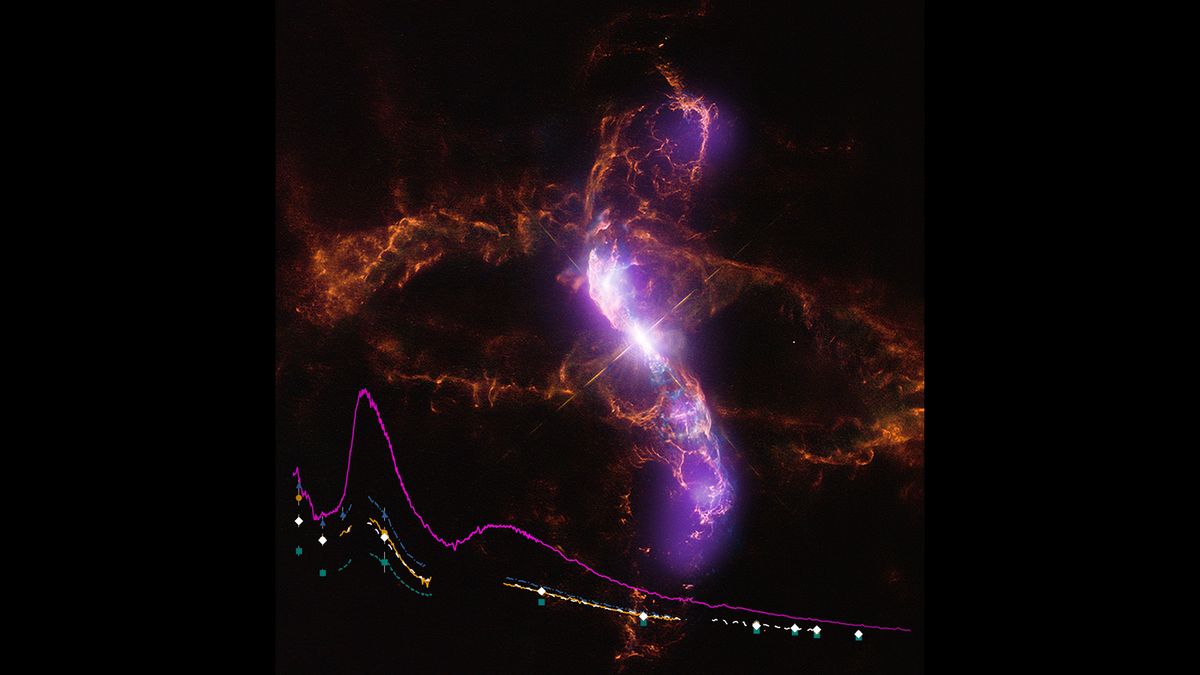

NASA's Chandra X-ray Observatory released three new sonifications translating X-ray data from Jupiter, Saturn, and Uranus into sound to celebrate a February planetary parade, using data from Hubble, Cassini, and Keck to create woodwind, siren, and cello-inspired audio that maps brightness and position in the images to pitch and volume.