Apple Bets $2B on AI Imaging with Q.ai Acquisition

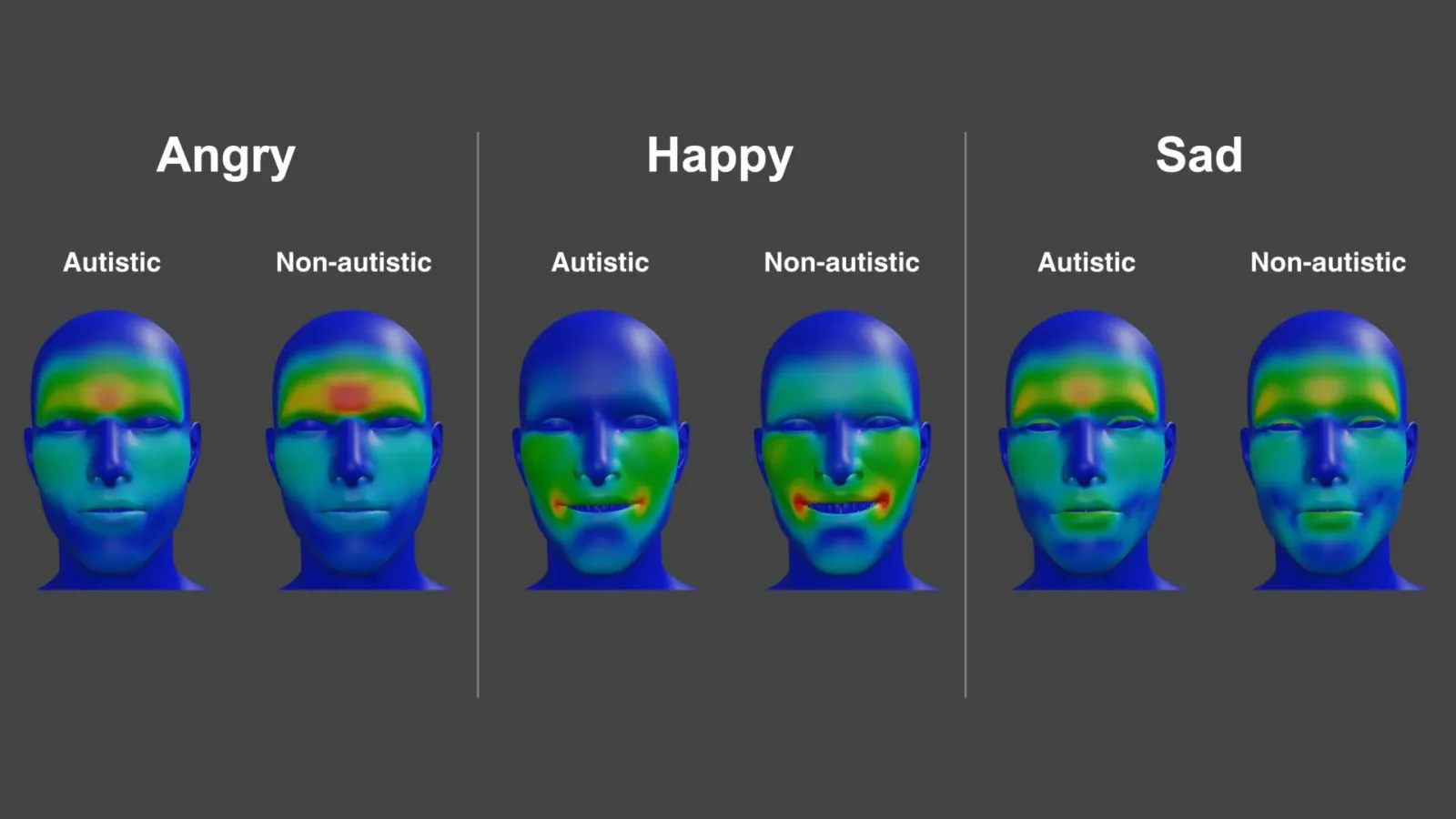

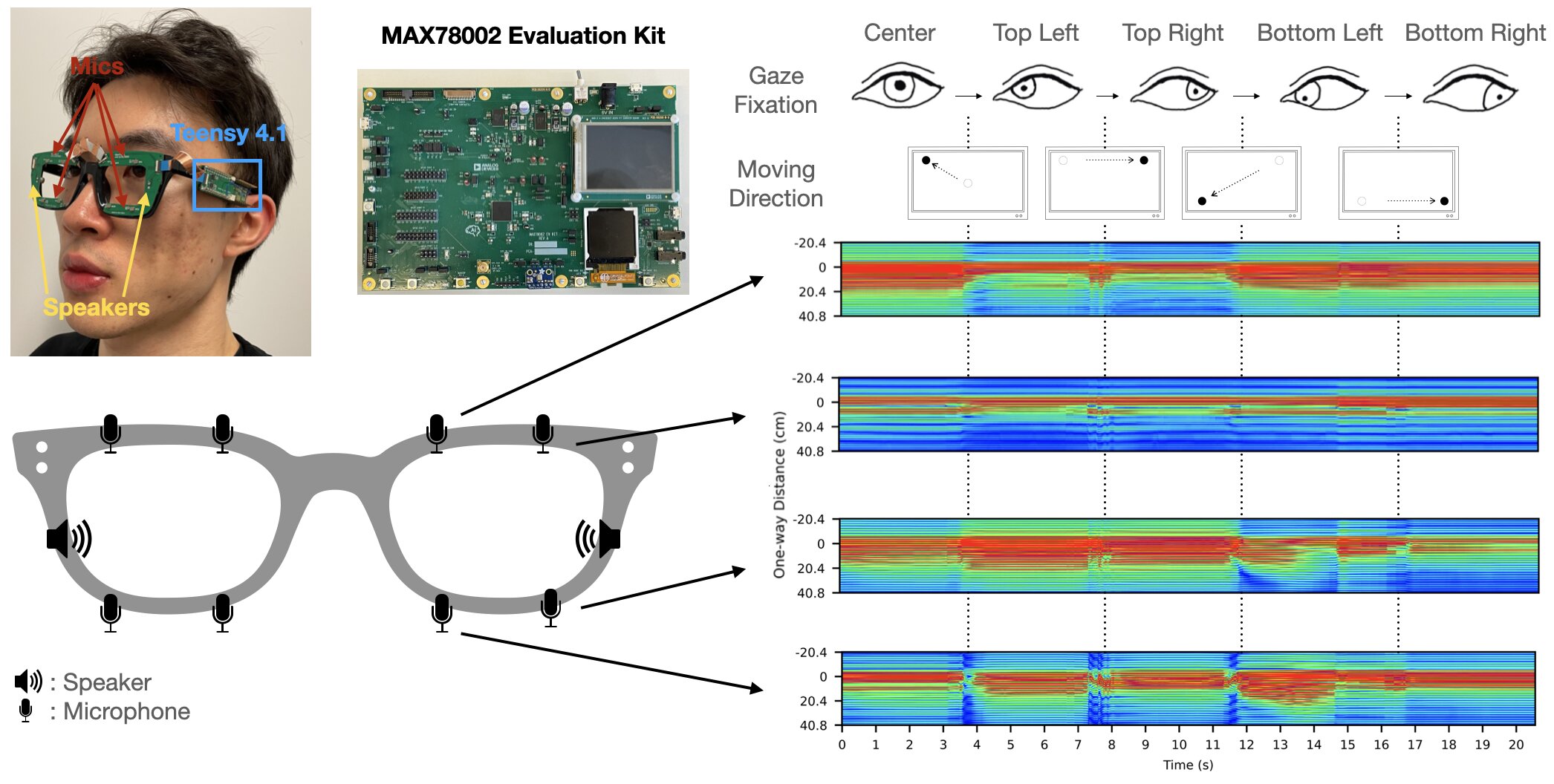

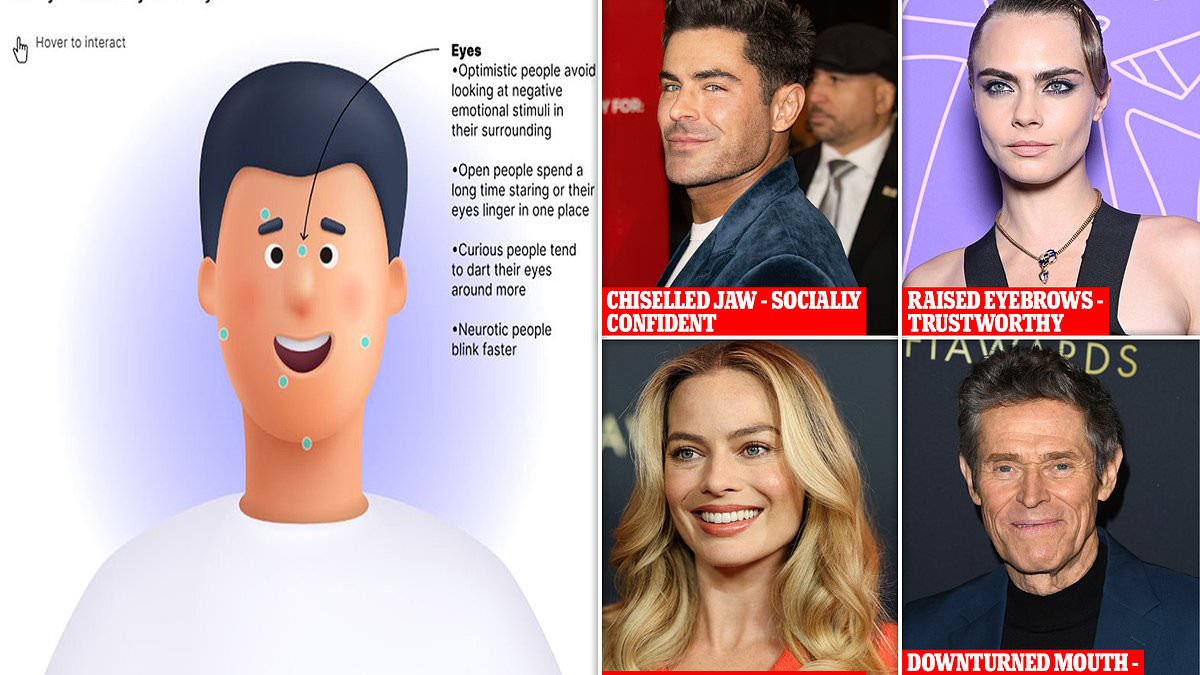

Apple is buying Israeli AI startup Q.ai for about $2 billion, its second-largest acquisition after Beats, signaling a push into imaging and machine learning to enhance Siri. Q.ai's tech allegedly analyzes facial expressions to infer silent speech, a capability Apple aims to integrate into its software stack; the deal was confirmed by Apple's Johny Srouji. Q.ai cofounder Aviad Maizels previously founded PrimeSense, whose tech underpins Face ID.