Robotics News

The latest robotics stories, summarized by AI

Featured Robotics Stories

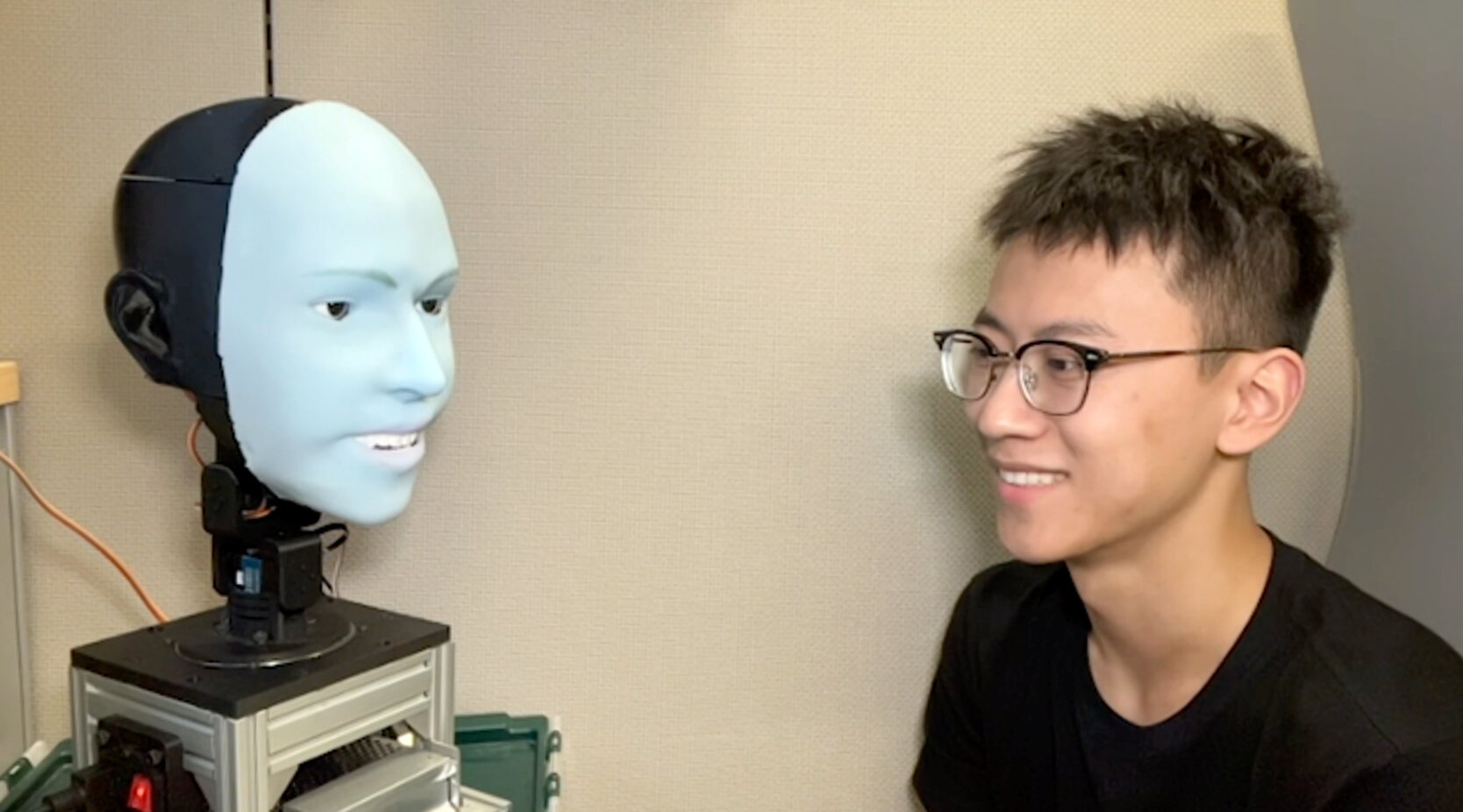

"AI-Powered Robotic Face Anticipates and Mirrors Human Smiles in Real Time"

Researchers at Columbia Engineering's Creative Machines Lab have developed Emo, a robot with a human-like head that can anticipate and replicate a person's smile before it occurs, using AI and 26 actuators to create a broad range of facial expressions. The robot has learned to make eye contact and predict forthcoming smiles about 840 milliseconds before they happen, aiming to improve human-robot interaction and build trust. The team is now working on integrating verbal communication into Emo and considering ethical implications of this technology.

More Top Stories

AI Robot Dominates Humans in Marble Maze Showdown

Engadget•2 years ago

AI Robot Dominates Humans in Physical Skill Games

Engadget•2 years ago

More Robotics Stories

"Insights from Robotics Experts: Q&A with UC Berkeley's Ken Goldberg and Nvidia's Deepu Talla"

Ken Goldberg, a professor at UC Berkeley, discusses the future of robotics, highlighting the role of generative AI in transforming the field. He also explores the potential of multi-modal models and expresses newfound optimism about humanoid robots and legged robots. Goldberg predicts that manufacturing and warehouses will see increased robot adoption, and he believes that affordable home robots capable of decluttering will be available within the next decade. He emphasizes the importance of robot motion planning and addresses the issue of robot singularities, which disrupt robot operations. Goldberg co-founded Jacobi Robotics to develop algorithms that guarantee the avoidance of singularities, enhancing reliability and productivity for all robots.

"Insights from Robotics Experts: Q&A with Nvidia's Deepu Talla and UC Berkeley's Ken Goldberg"

Deepu Talla, Vice President and General Manager of Embedded & Edge Computing at NVIDIA, discusses the role of generative AI in robotics, the challenges of designing humanoid robots, the next major category for robotics, the timeline for true general-purpose robots, and the potential for home robots beyond vacuums. Talla emphasizes the need for a platform approach in robotics development to enable scalability and the importance of developing new robot skills and leveraging end-to-end development platforms for future success in the industry.

"Exploring the Fascinating World of Octopus-Inspired Robotic Arms"

Researchers have developed a soft robotic tentacle inspired by octopuses, capable of grasping small objects in air or water. The tentacle mimics the bend propagation movement of an octopus arm and can be operated remotely using a glove that fits over one finger. The robotic tentacle consists of five segments made of soft silicone embedded with metal wires, forming an electronic network that mimics the nervous system of an octopus arm. The tentacle can expand 1.5 times its original length and incorporates sensory feedback, allowing the operator to feel the engagement of the suckers at the tip. The technology has potential applications in marine research, biomedical technology, and artificial organs.

Rescue Revolution: Shape-shifting Spider Robot Takes on Tight Spots

Engineers at the University of Colorado Boulder have developed a spider-inspired shape-changing robot, called mCLARI, that can naturally alter its shape and maneuver through narrow places without controlling its movements. This breakthrough in the robotics industry could have applications in healthcare, space exploration, disaster response, and other industries that require adaptability and versatility.

"Exploring the Future of General Purpose Robots, Generative AI, and Office WiFi with Google DeepMind's Robotics Head"

Google DeepMind's head of robotics, Vincent Vanhoucke, discusses the development of general-purpose robots and the role of generative AI in robotics. DeepMind's Open X-Embodiment database aims to train a generalist model capable of controlling different types of robots and performing complex tasks. Vanhoucke highlights the importance of perception technology and the integration of machine learning and AI in robotics. He also emphasizes the potential of simulation and generative AI in bridging the gap between simulation and reality, enabling more precise and efficient robot planning and interactions.

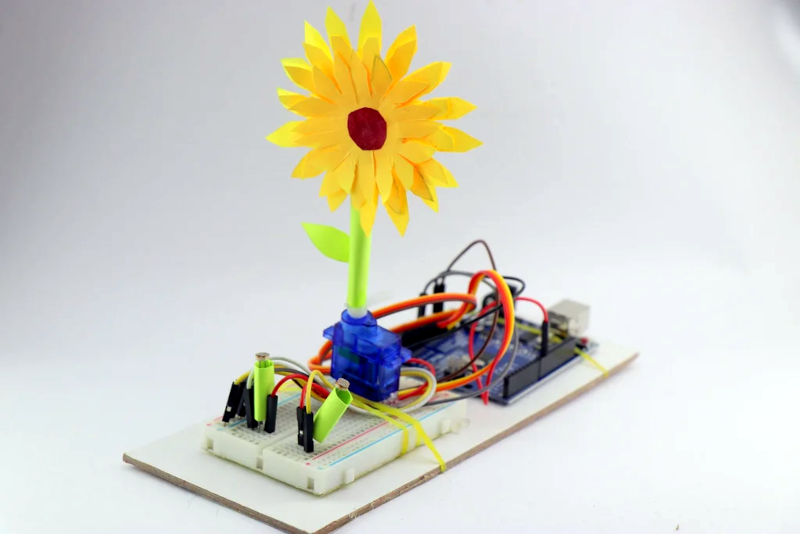

"Sun-Tracking Robot Sunflower Harnesses Solar Power"

A plastic sunflower, equipped with an Arduino, light sensors, and a servo motor, mimics the movement of a real sunflower by tracking the sun. This simple yet whimsical project could be a great school project to engage students in electronics and programming. The use of light-dependent resistors allows for analog readings to determine the sensor receiving the most light. While alternative circuitry could achieve the same effect, the versatility and affordability of microcontrollers make them a popular choice.

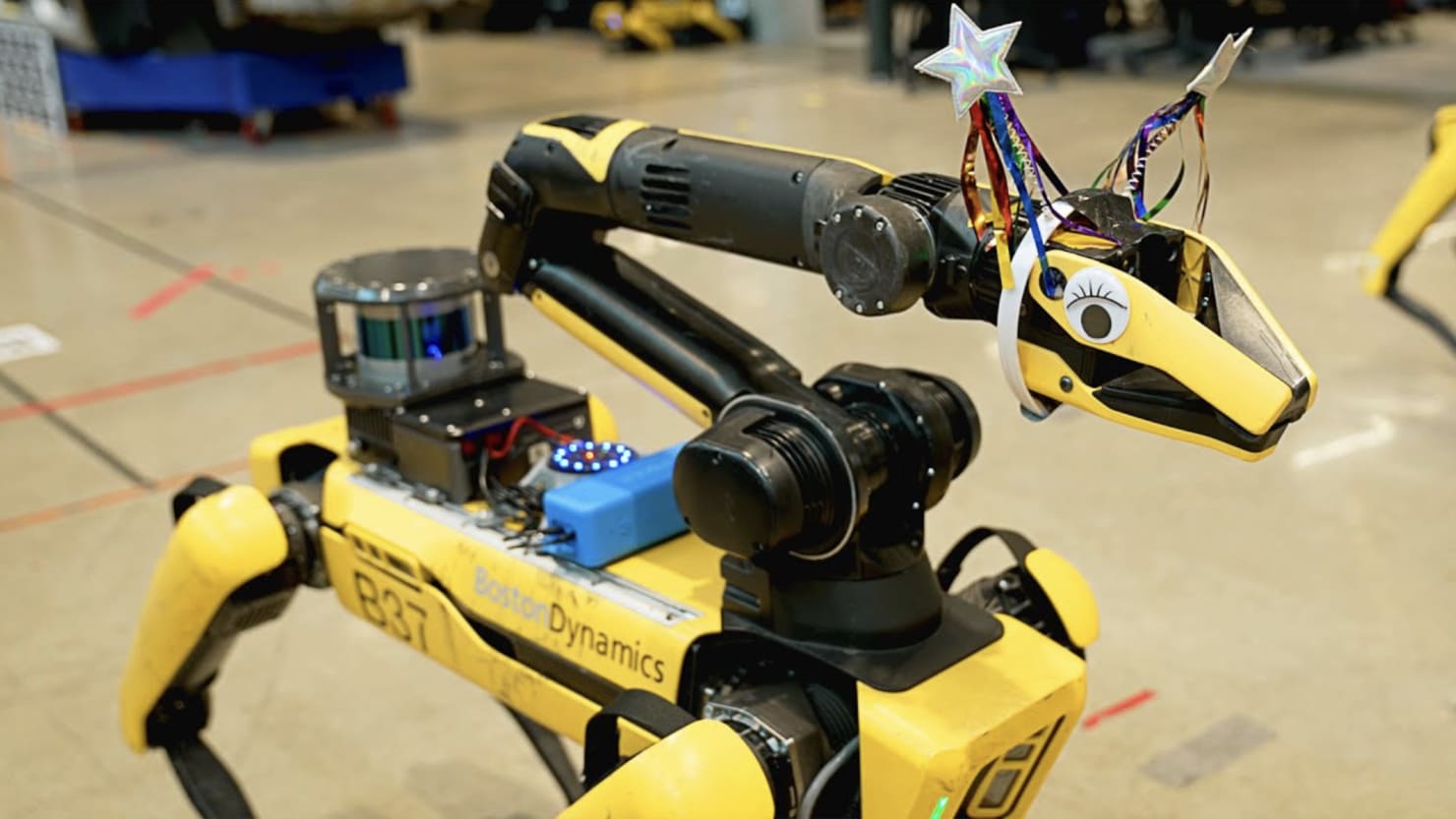

Boston Dynamics' Robot Dog Communicates with ChatGPT

Boston Dynamics has showcased a robotic dog infused with OpenAI's ChatGPT, allowing it to speak in various voices and accents. The robot, created through a hackathon, combines AI technologies such as voice recognition, image processing, and ChatGPT with Boston Dynamics' famous "Spot" robot dog. While the robot cannot truly understand what it is saying or what others are saying to it, it can respond to prompts based on its training on a massive corpus of data. This development offers a glimpse into a future where talking robots like Spot could become more commonplace, potentially serving as tour guides or companions.

Boston Dynamics' Robot Dog Spot Becomes a Charming British Tour Guide with ChatGPT

Boston Dynamics' Spot robot dog has been integrated with ChatGPT, allowing it to speak with a vaguely British accent. The robot can act as a tour guide, providing information about the company's headquarters and past robot models. The integration demonstrates the potential of generative AI to enable robots to respond directly to human input. However, it is important to note that while the language model can produce natural-sounding language, it does not truly comprehend or understand the context of its responses.

"ChatGPT Gives Boston Dynamics' Robot Dog a Voice as a Tour Guide"

Boston Dynamics has transformed its robot dog, Spot, into a talking tour guide using OpenAI's ChatGPT API. Equipped with a top hat, mustache, and googly eyes, Spot takes staff members on a tour of the company's facilities, answering questions and mimicking speech. The bot combines a brief script with imagery from its cameras to generate responses, using Visual Question Answering models to caption images. Spot assumes various personalities, including a 1920s archaeologist and a sarcastic time traveler. While the video showcases Spot's conversational abilities, concerns about its potential surveillance capabilities remain.

AI's Lightning-Fast Robot Design Skills Unveiled

Scientists at Northwestern University used an advanced design algorithm powered by AI to create a walking robot in just 26 seconds. The robot, although not the most futuristic-looking, features internal muscles that inflate with air to propel it forward. The algorithm went through 10 attempts before producing the final design, which showcased the AI's ability to create new possibilities and paths forward that humans may not have considered. The researchers see potential applications for practical robots and nanorobots in various fields.