A Universal Law Says Evolution Builds Function by Increasing Information

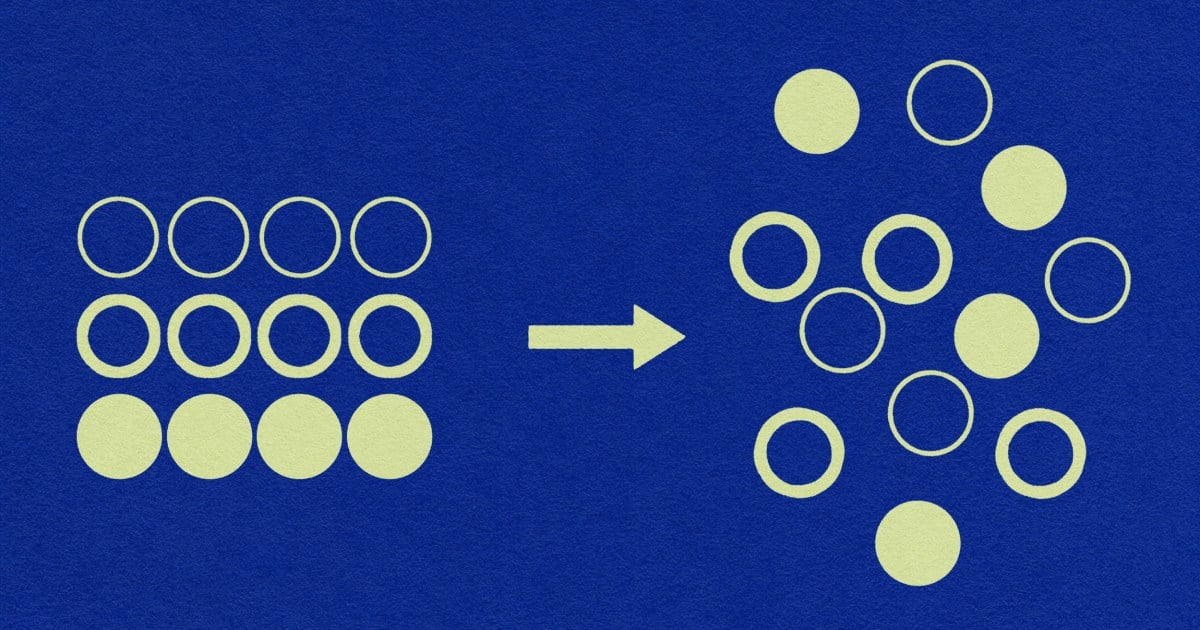

Robert Hazen and Michael Wong argue that evolution is a universal process, not limited to biology, governed by a new natural law—the law of increasing functional information—that explains how complex systems from minerals to AI become more patterned as they generate and select for functional configurations. They describe a “second arrow” of time toward greater order despite entropy, outline three sources of selection (static persistence, dynamic persistence, novelty generation), and introduce functional information as a measure (based on Szostak). The concept has broad applications—from cancer to ecology and AI—and invites reflection on meaning and purpose within science, while highlighting humanity’s ability to accelerate evolution by imagining and testing countless configurations.