Thermodynamic Brainpower: Tiny-Energy Image Generation with Noise-Driven Computing

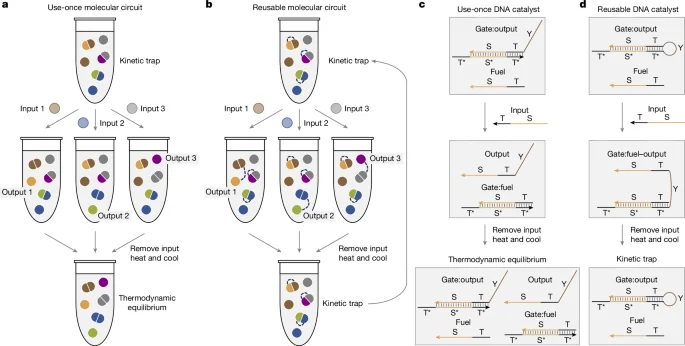

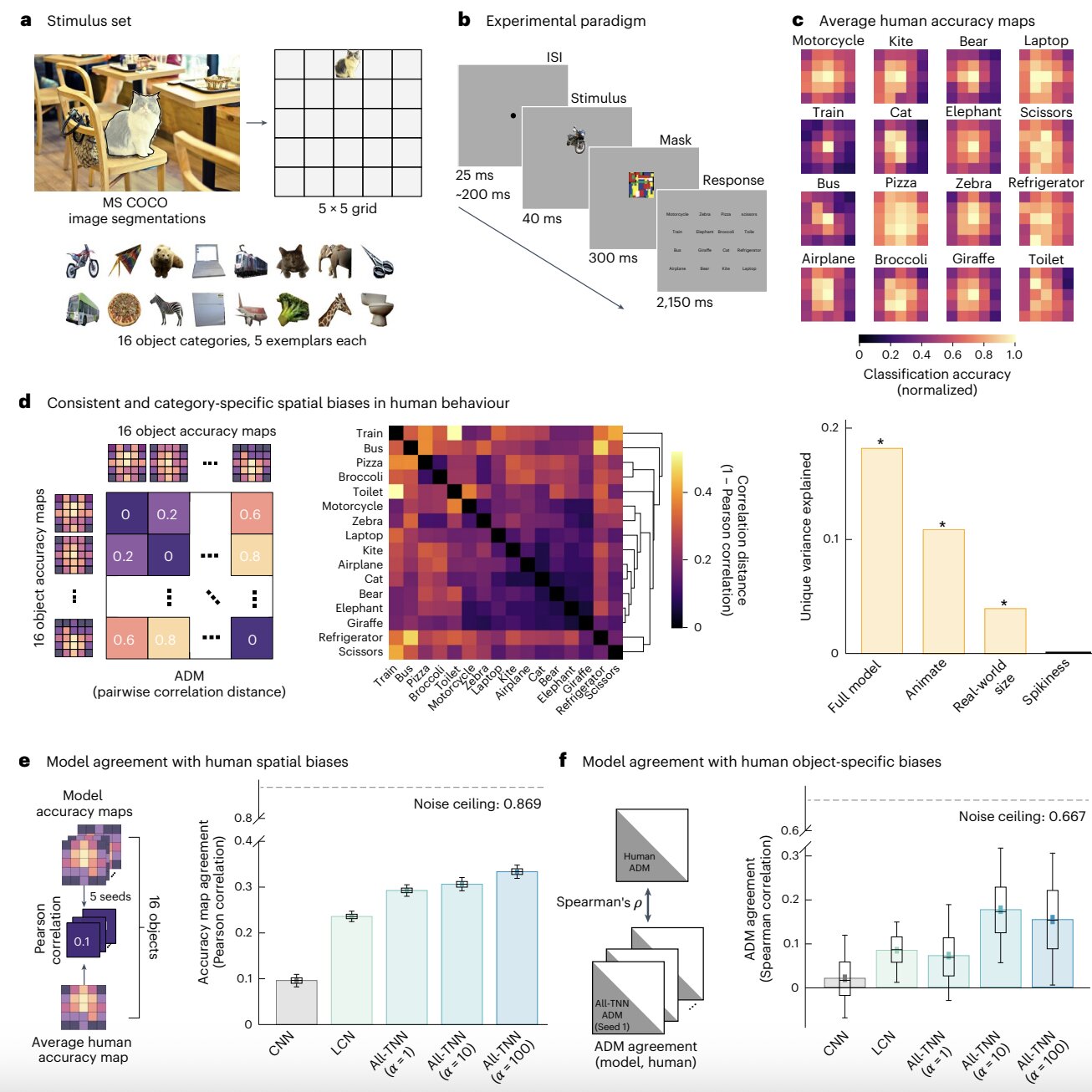

Scientists report a generative thermodynamic computer that uses thermal noise to produce images from random data, mimicking AI neural networks but with energy use orders of magnitude lower. By leveraging probabilistic computing and diffusion-like dynamics (via Langevin-based calculations) and tuning coupling strengths in a network, the system retrieves or creates images from noise, offering a physics-based path to energy-efficient AI-like tasks and new insights into learning.