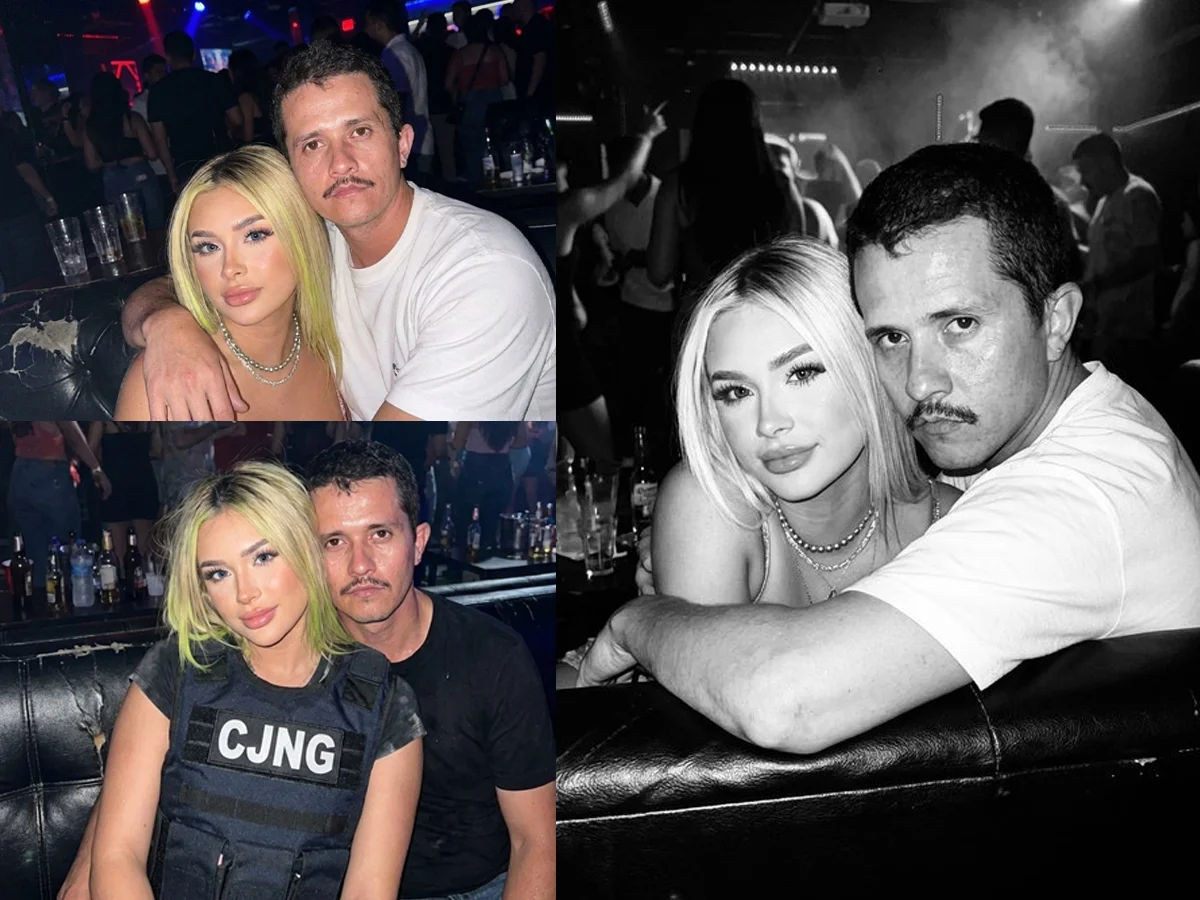

Rumors swirled around influencer Maria Julissa in El Mencho’s death, but no evidence ties her to the raid

Online chatter linked influencer Maria Julissa to the death of CJNG leader El Mencho after a February 22 raid in Tapalpa and a circulating photo claimed to be their last together; authorities have not confirmed any involvement by Julissa, and she publicly denied the claims while the operation focused on the cartel amid continuing violence.