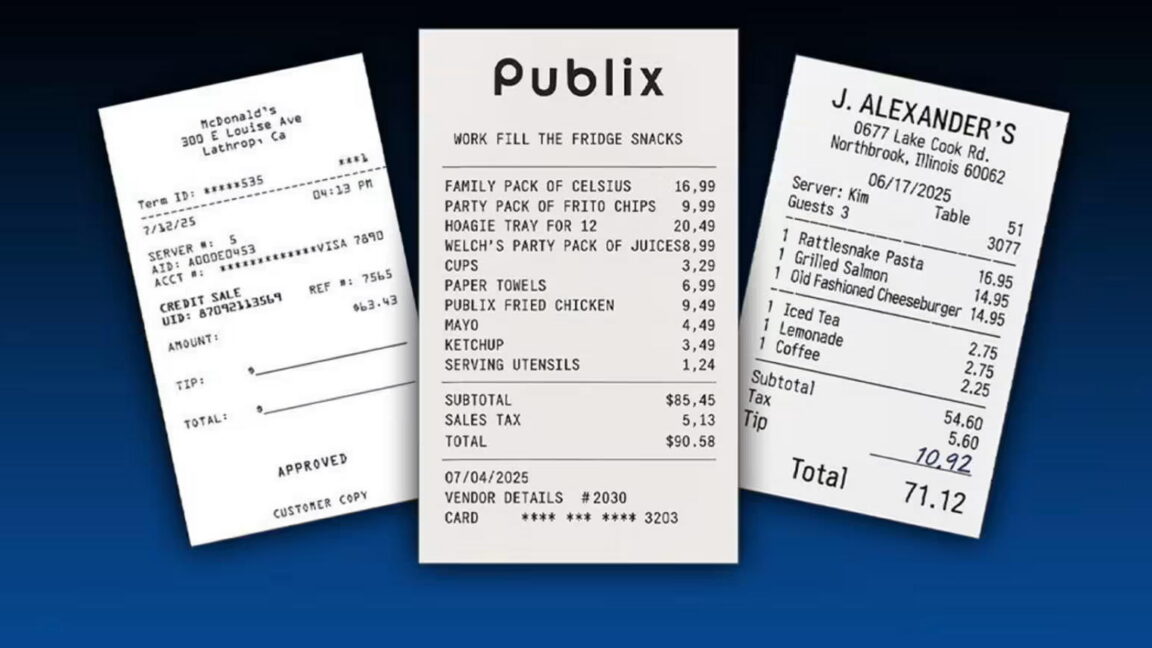

AI-Generated Fake Receipts Heighten Expense Fraud Risks

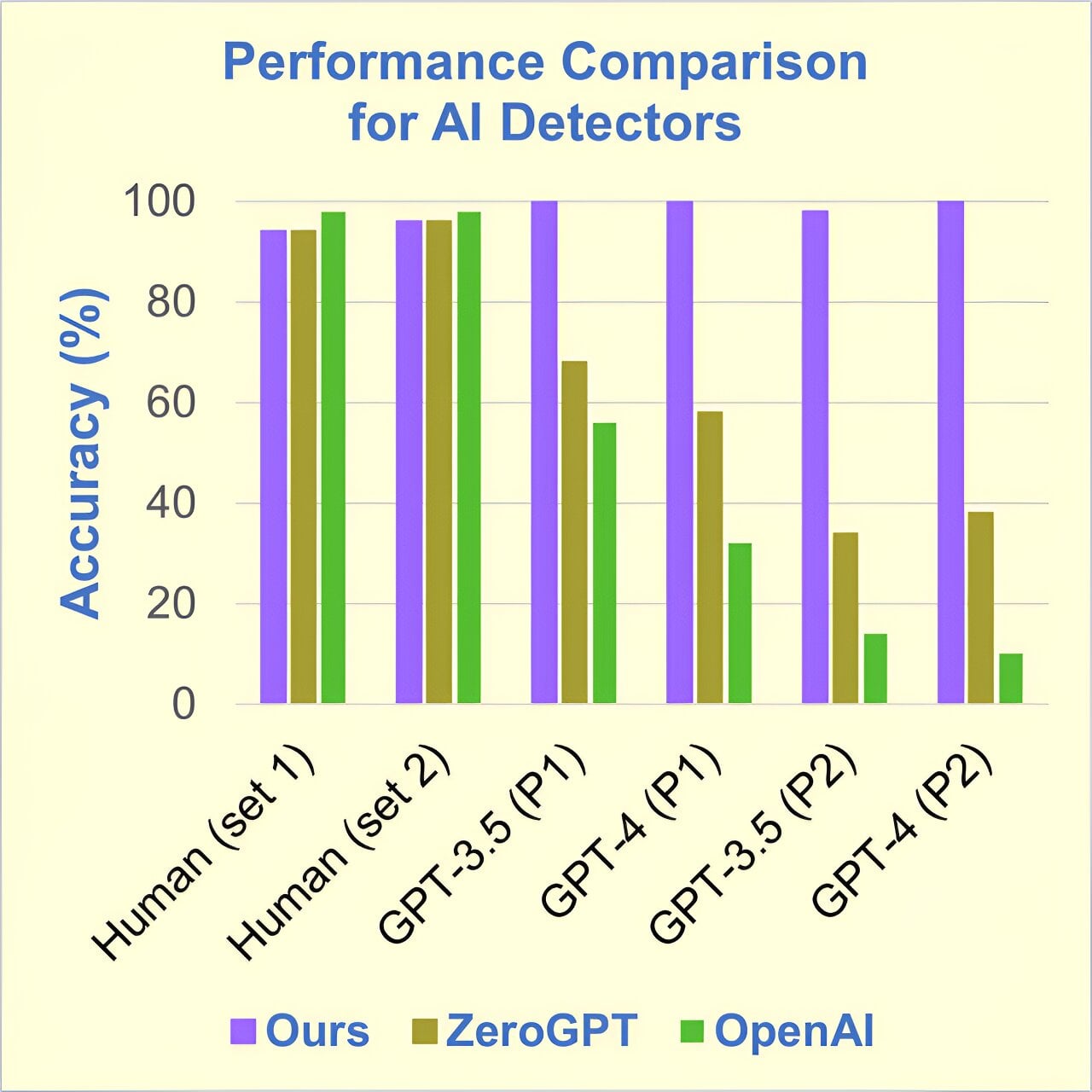

New advanced AI-generated images of receipts are increasingly being used to commit expense fraud, prompting companies to adopt AI-based detection methods that analyze image metadata and contextual data to identify fakes, as the technology becomes more convincing and accessible.