Europe launches replication drive to test carbon quantum dot biosensors

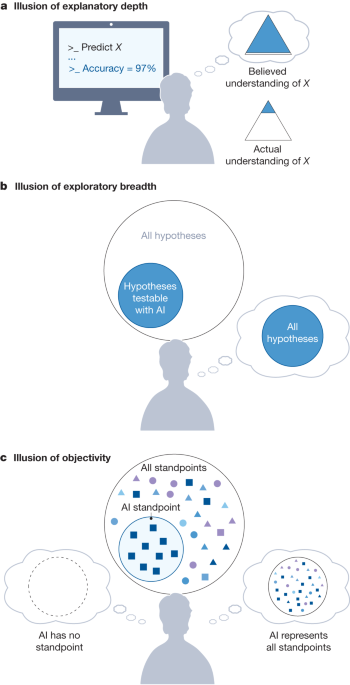

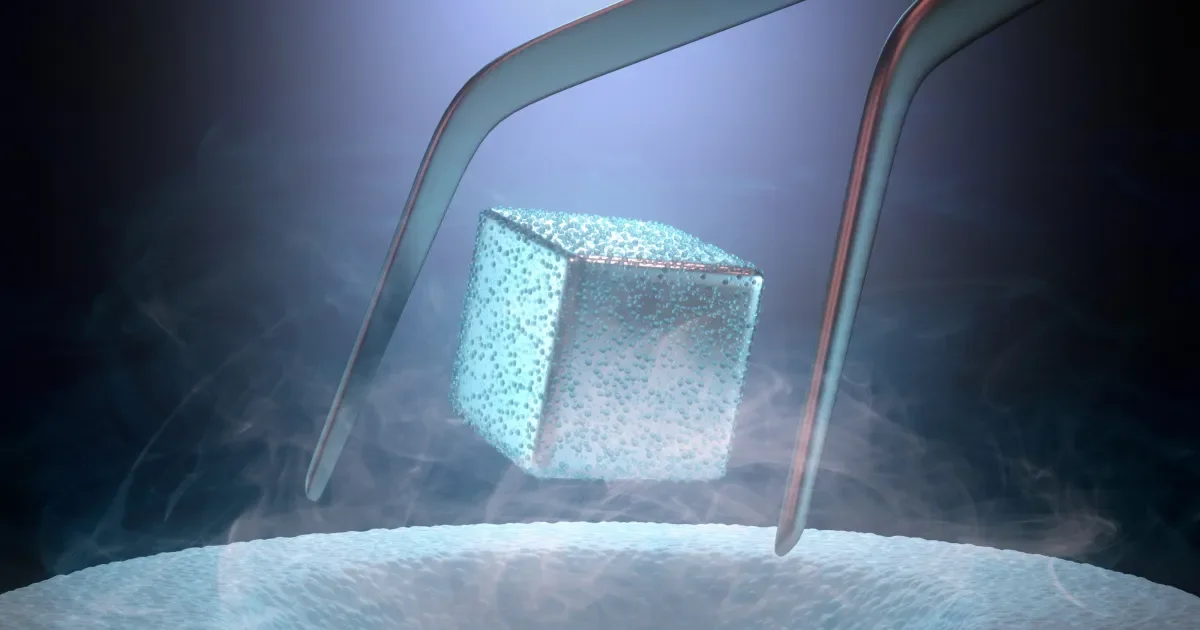

A Europe-backed NanoBubbles project is funding nanoscientists to replicate a 2012 study that carbon quantum dots can sense copper ions inside living cells, the first large-scale replication effort in the physical sciences aimed at the reproducibility crisis; initial attempts failed to reproduce the reported fluorescence change, illustrating how small impurities, incomplete protocols, and cross-lab variation can affect results, as the ERC-backed effort seeks self-correction in science.