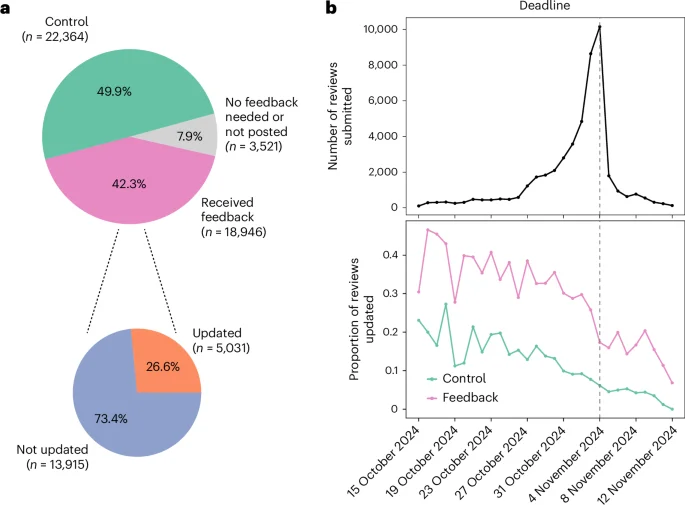

AI-Driven Feedback Elevates Peer Review Quality in a Large-Scale Study

Nature Machine Intelligence reports a large-scale randomized study showing that automated, LLM-generated feedback via the Review Feedback Agent improves peer review quality and engagement. At ICLR 2025, over 20,000 reviews were analyzed; 27% of reviewers who received AI feedback updated their reviews, incorporating more than 12,000 suggested edits. Blind evaluations found revised reviews more informative, and the intervention increased writing length (about 80 extra words for updaters) with longer author and reviewer rebuttals. The study suggests carefully designed LLM feedback can make reviews more specific and actionable while boosting reviewer–author engagement; data and open-source code are available.