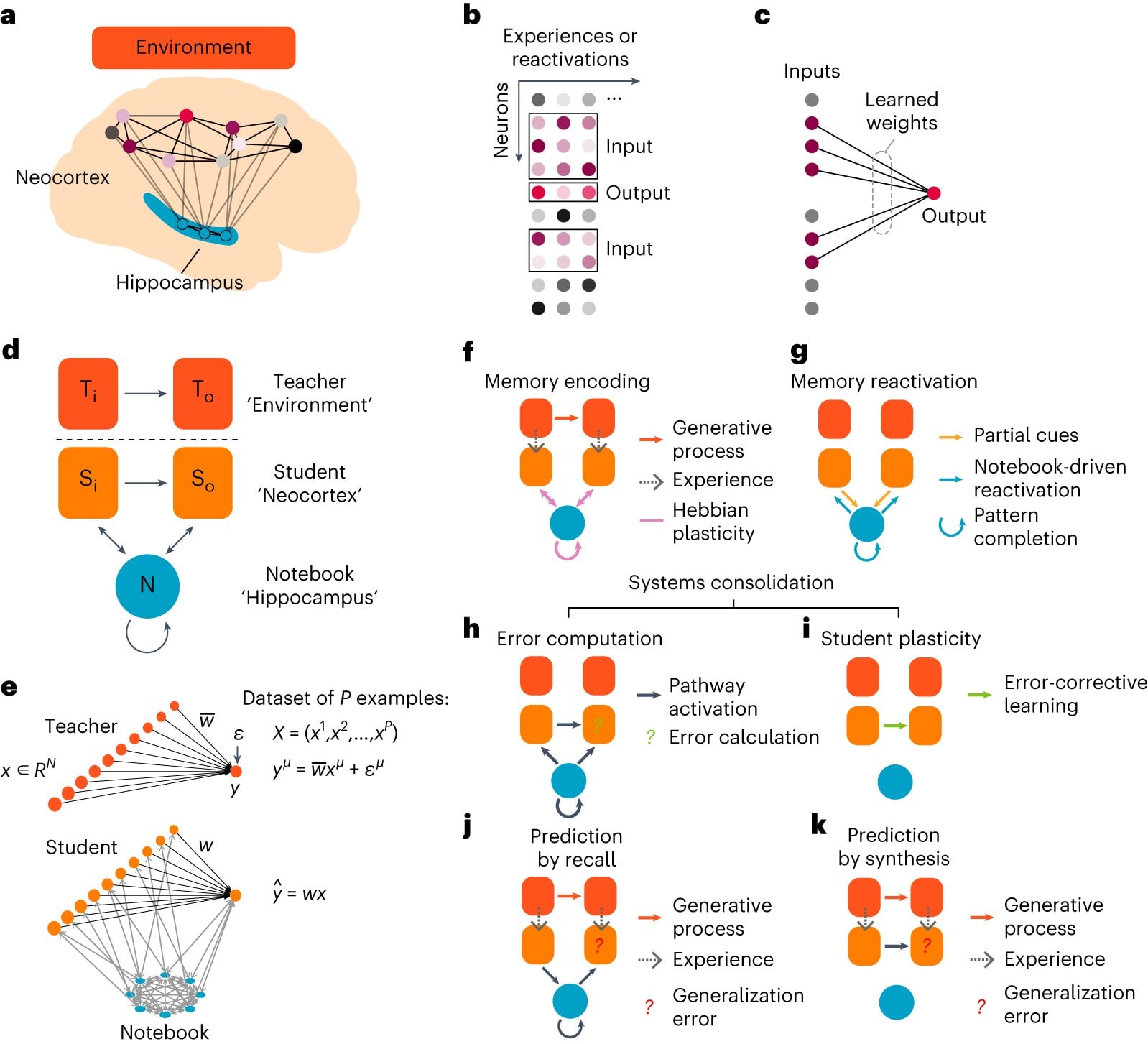

Advancing Brain Imaging Models for Clinical Applications

Yale researchers have demonstrated that predictive models linking brain activity to behavior can generalize across diverse datasets, which is crucial for their clinical utility. By training models on varied brain imaging datasets, they found that these models can still perform accurately when tested on different datasets with unique demographic and regional characteristics. This highlights the importance of developing neuroimaging models that work for diverse populations, including underserved rural communities, to ensure equitable access to mental health care.