Mind-Reading Bionic Arm Blurs the Human-Robot Line

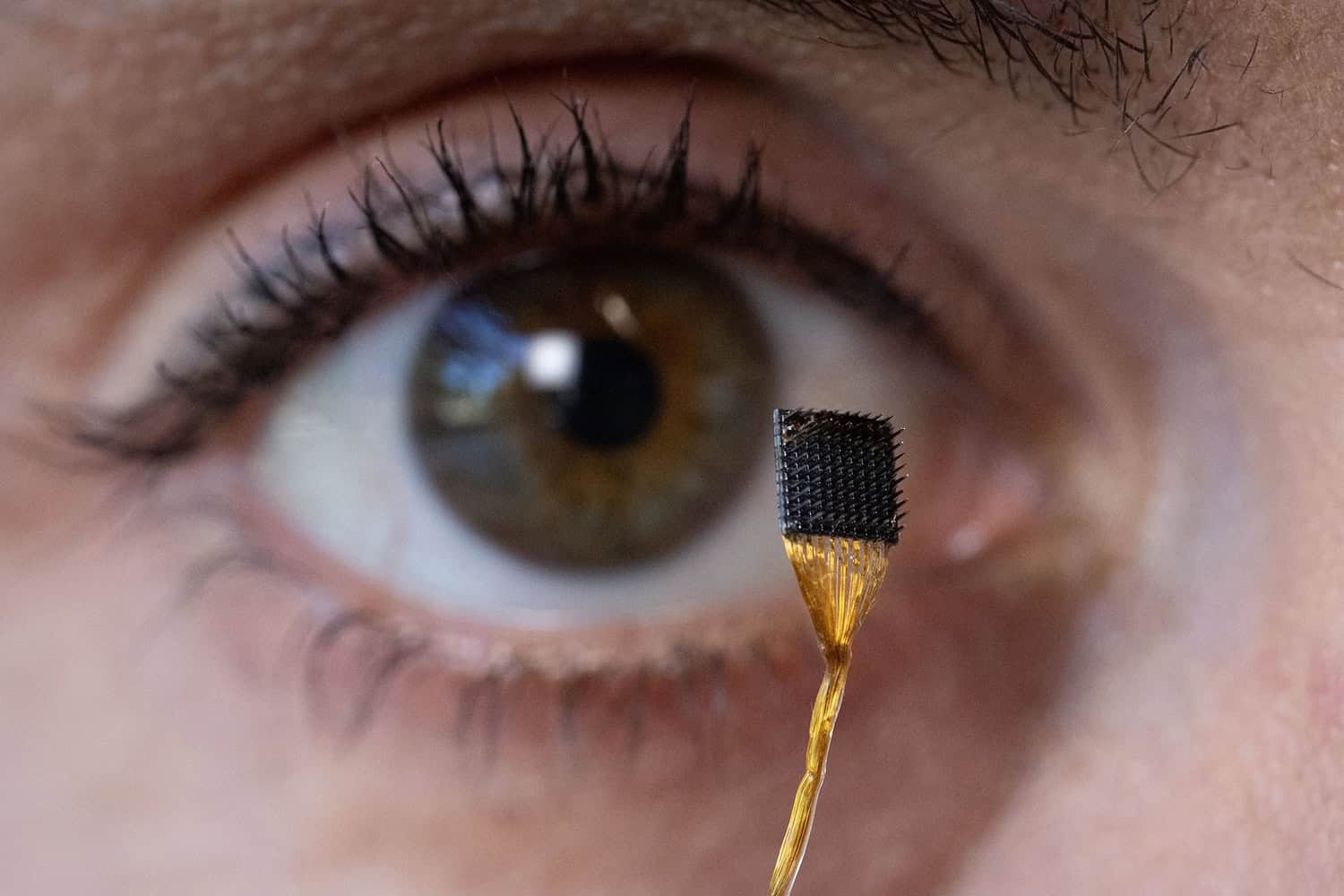

A British woman who lost her right arm and leg after an accident now uses a mind-reading bionic arm that learns her movements via AI-powered sensors, allowing more natural control; she jokes that she is 80% human and 20% robot and notes she can charge the arm overnight, highlighting AI-driven prosthetics’ growing role in recovery and daily life.