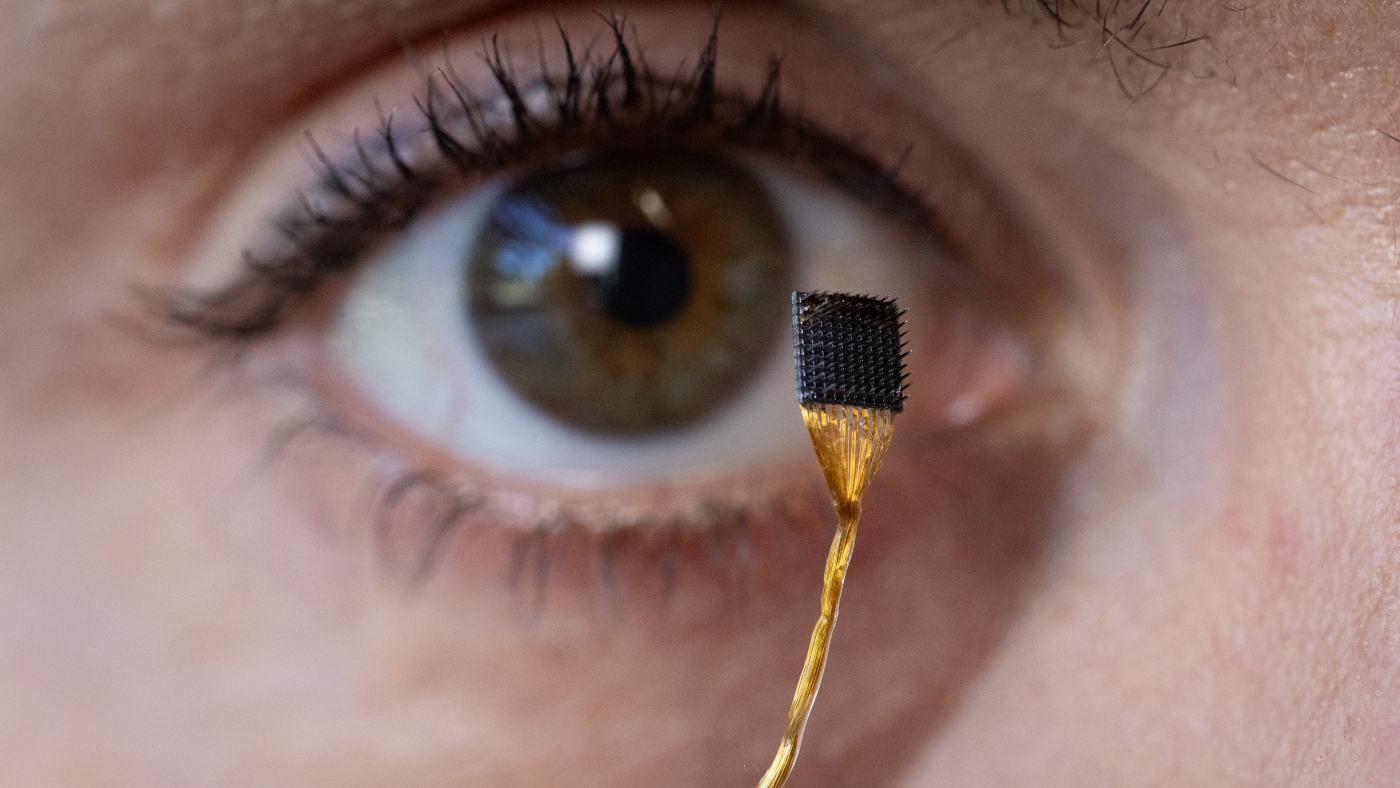

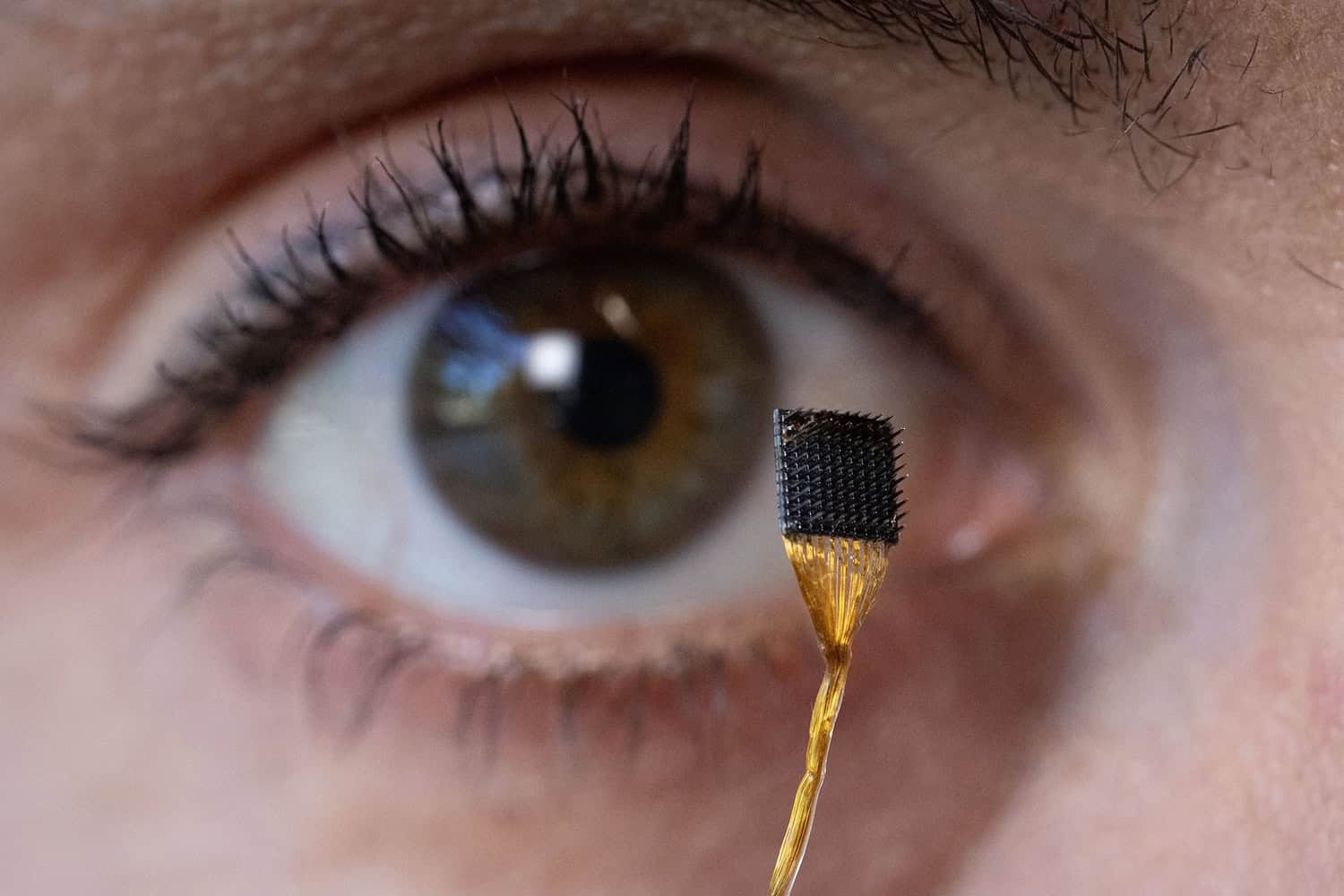

Revolutionary Brain Implants Enable Real-Time Inner Speech Decoding

Scientists have developed a brain implant that can decode inner speech into text or sound with up to 74% accuracy, offering promising advancements for individuals with speech or motor impairments, though further improvements and safeguards are needed.