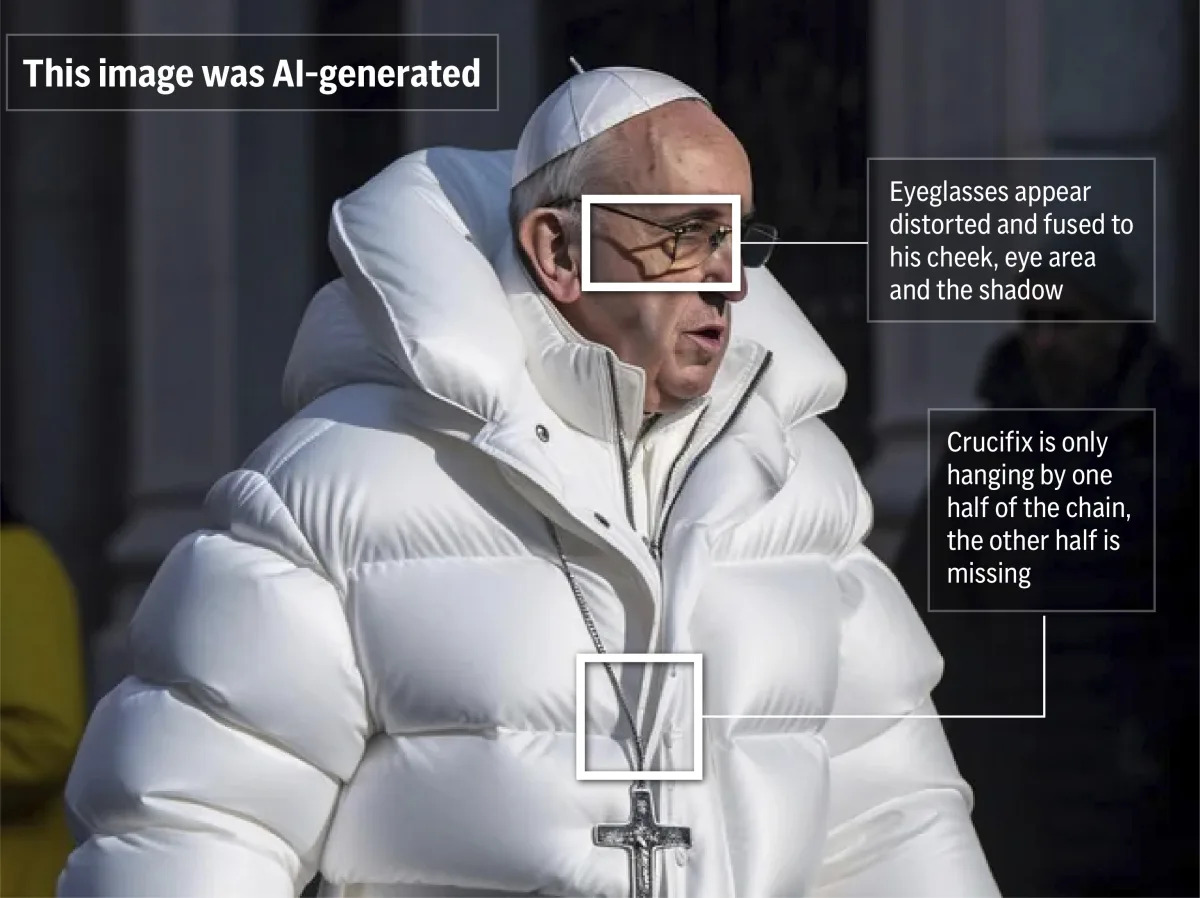

White House posts doctored arrest photo, stoking questions about AI imagery in politics

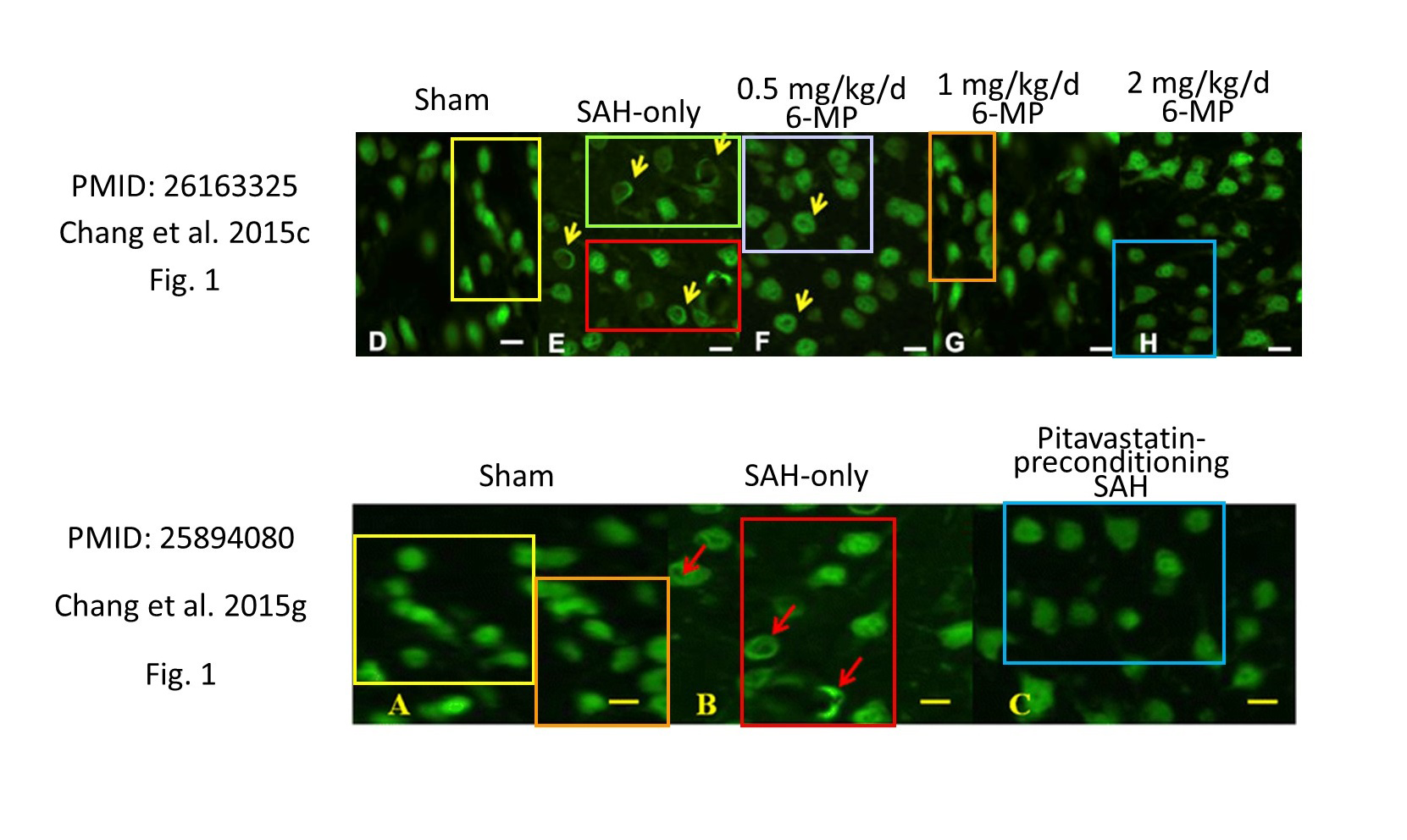

The White House posted a photoshopped image of Nekima Levy Armstrong during her arrest at a Minnesota ICE protest; Guardian analysis found the photo was altered to make her appear to cry by overlaying a different image, raising concerns about the use of manipulated imagery in official communications and online misinformation.