Burry Questions End of AI Data-Center Spending, Calls Out Oracle, Google and Meta

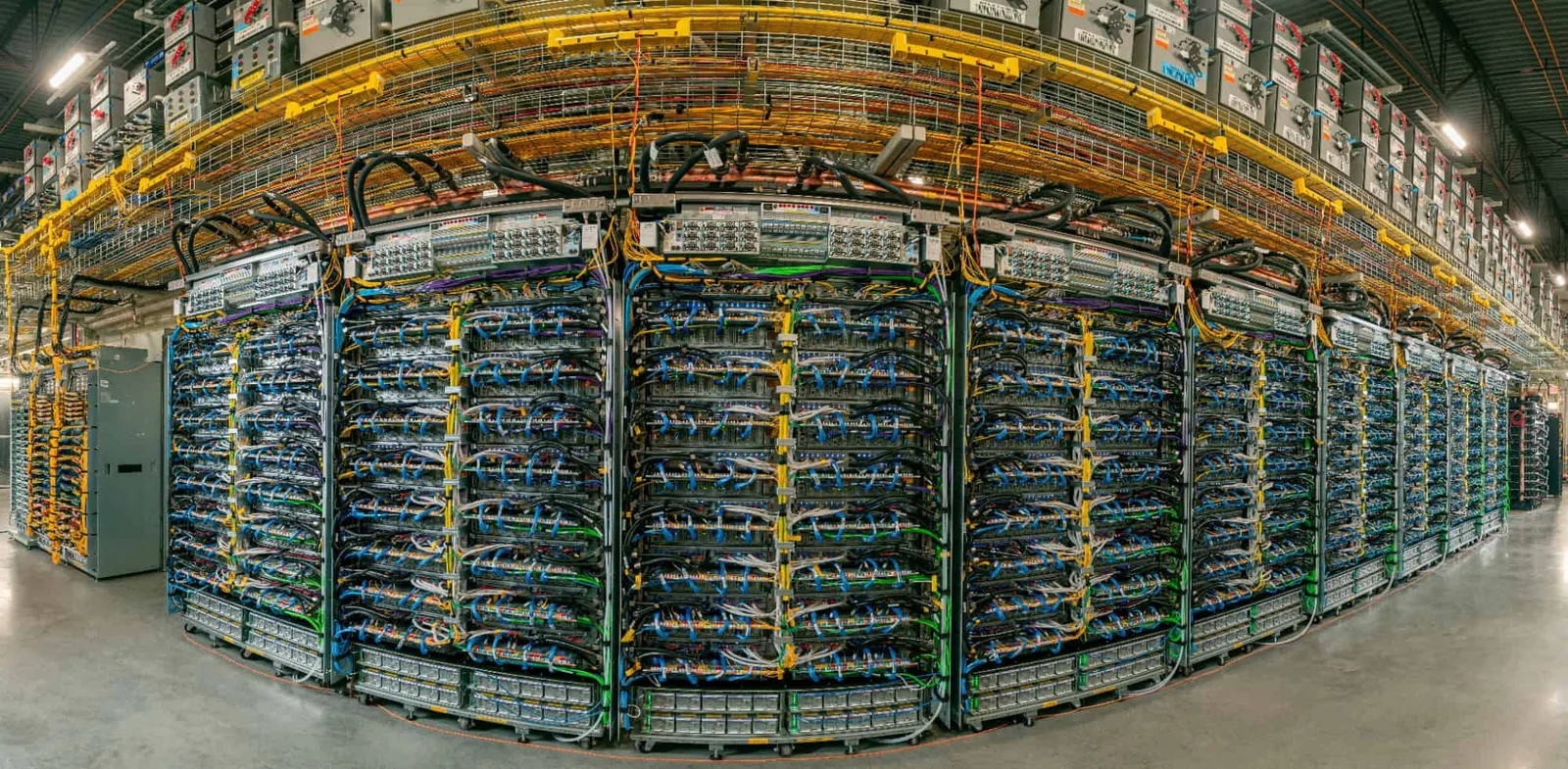

Hedge fund investor Michael Burry questions when the aggressive AI data-center buildout will end, criticizing hyperscalers such as Oracle, Alphabet (Google), Meta, Microsoft, Amazon and Nvidia for expansive capex and potential cash-flow strain. He warns of possible earnings restatements and depreciation masking costs, and likens current AI hype to past bubbles like the 1920s radio boom and the dot-com era.