"Nightshade: Poisoning AI Data Scraping to Protect Artists' Portfolios"

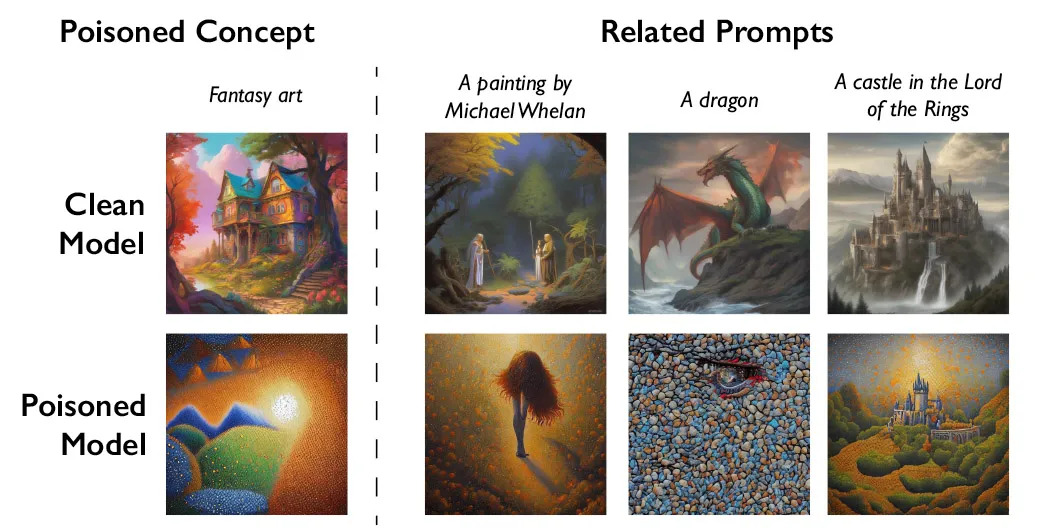

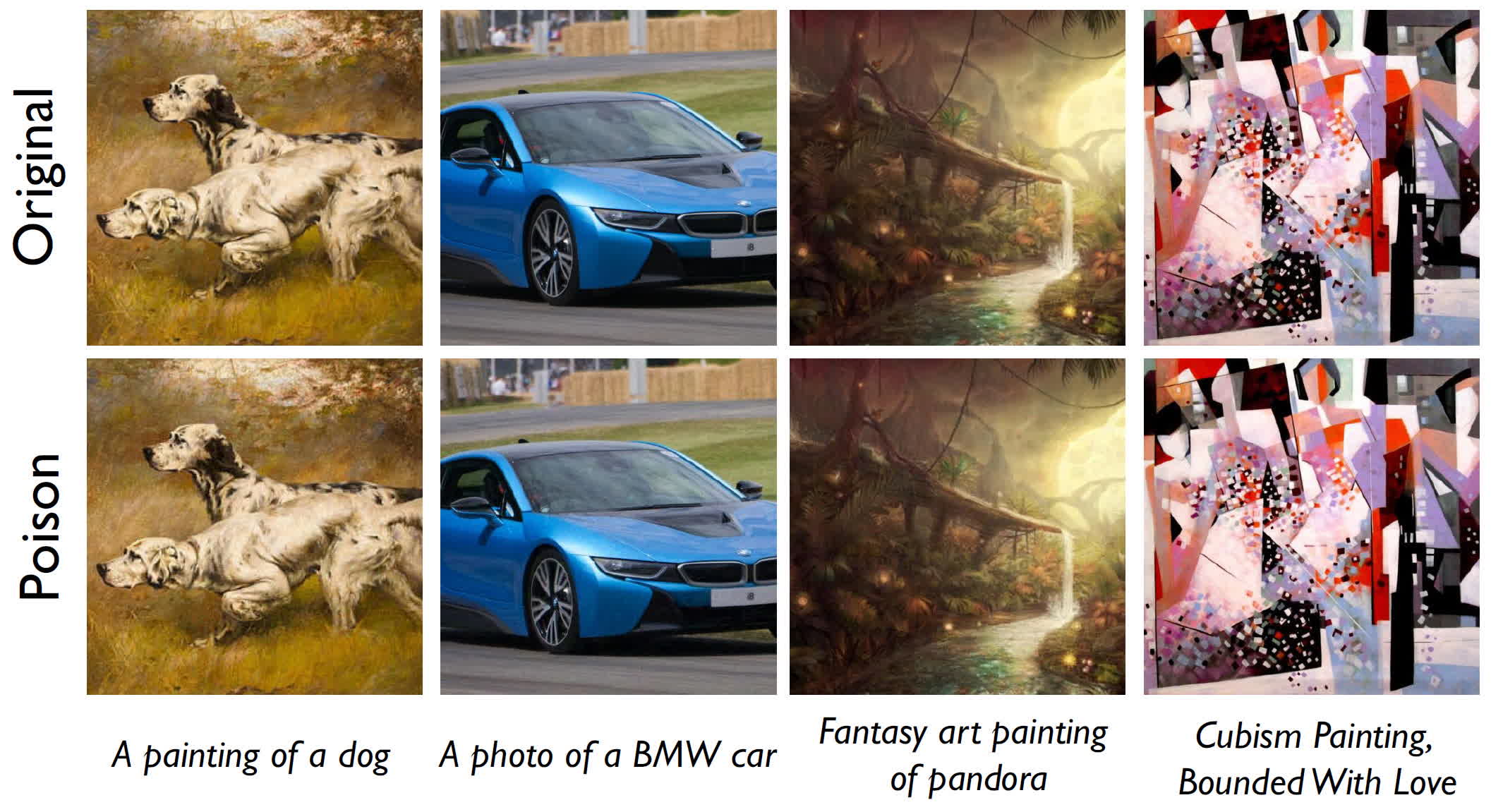

Nightshade, a new software tool developed by researchers at the University of Chicago, is now available for anyone to try as a means of protecting artists' and creators' work from being used to train AI models without consent. By "poisoning" images, Nightshade can make them unsuitable for AI training, leading to unpredictable results and potentially deterring AI companies from using unauthorized content. The tool works by creating subtle changes to images that are imperceptible to humans but significantly affect how AI models interpret and generate content. Additionally, Nightshade can work in conjunction with Glaze, another tool designed to disrupt content abuse, offering both offensive and defensive approaches to content protection.