"Nightshade: Poisoning AI Data Scraping to Protect Artists' Portfolios"

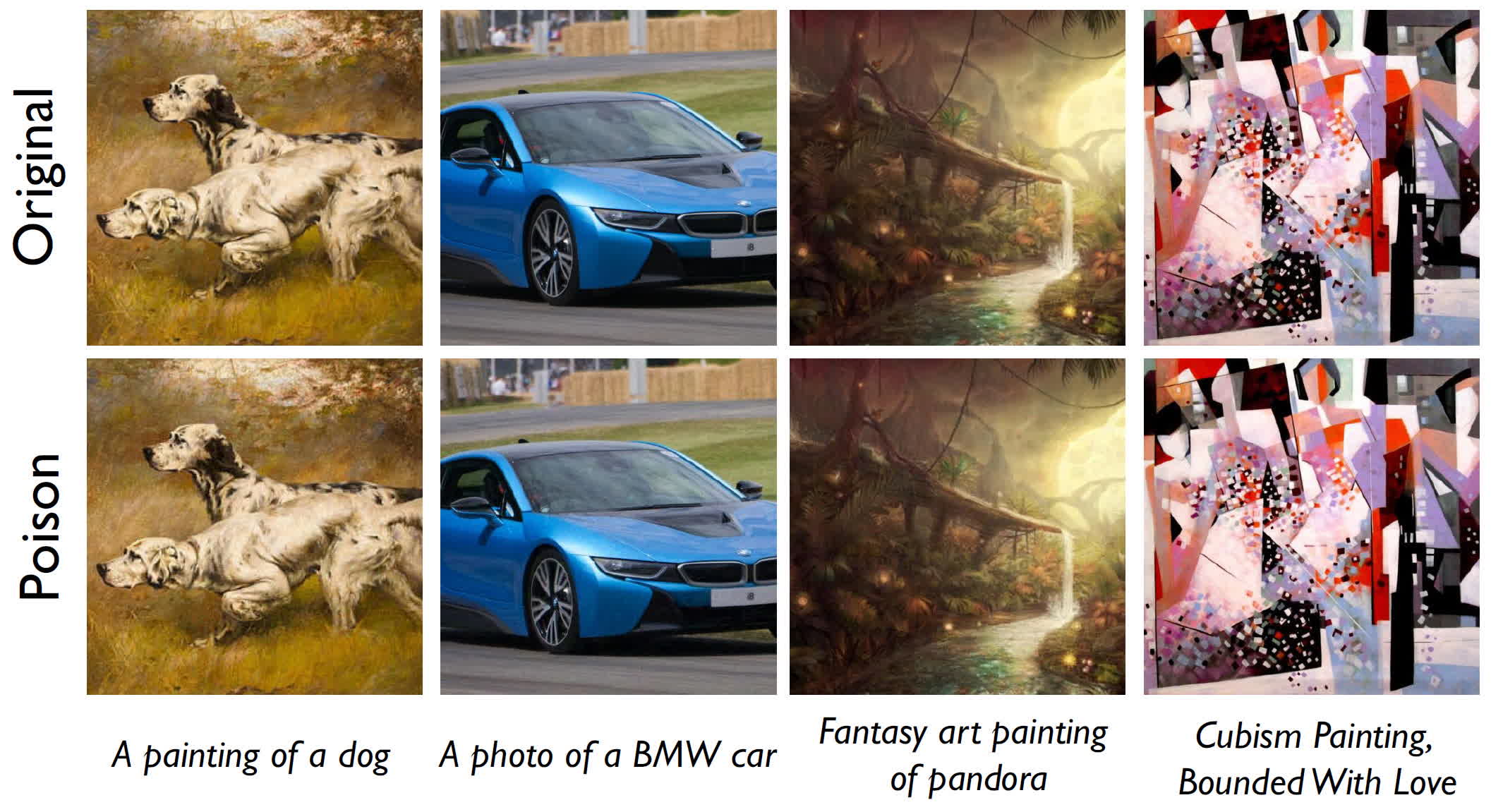

Nightshade, a new software tool developed by researchers at the University of Chicago, is now available for anyone to try as a means of protecting artists' and creators' work from being used to train AI models without consent. By "poisoning" images, Nightshade can make them unsuitable for AI training, leading to unpredictable results and potentially deterring AI companies from using unauthorized content. The tool works by creating subtle changes to images that are imperceptible to humans but significantly affect how AI models interpret and generate content. Additionally, Nightshade can work in conjunction with Glaze, another tool designed to disrupt content abuse, offering both offensive and defensive approaches to content protection.

- Nightshade, a free tool to poison AI data scraping, is now available to try TechSpot

- Kin.art launches to defend artists' entire portfolios from AI scraping VentureBeat

- Want to protect your art from AI? Just poison it Creative Bloq

- Protective Digital Art Tools Trend Hunter

- New platform seeks to prevent Big Tech from stealing art TheStreet

Reading Insights

0

6

2 min

vs 3 min read

78%

495 → 110 words

Want the full story? Read the original article

Read on TechSpot