Artists Gain Upper Hand Against AI with Innovative Data Poisoning Tool

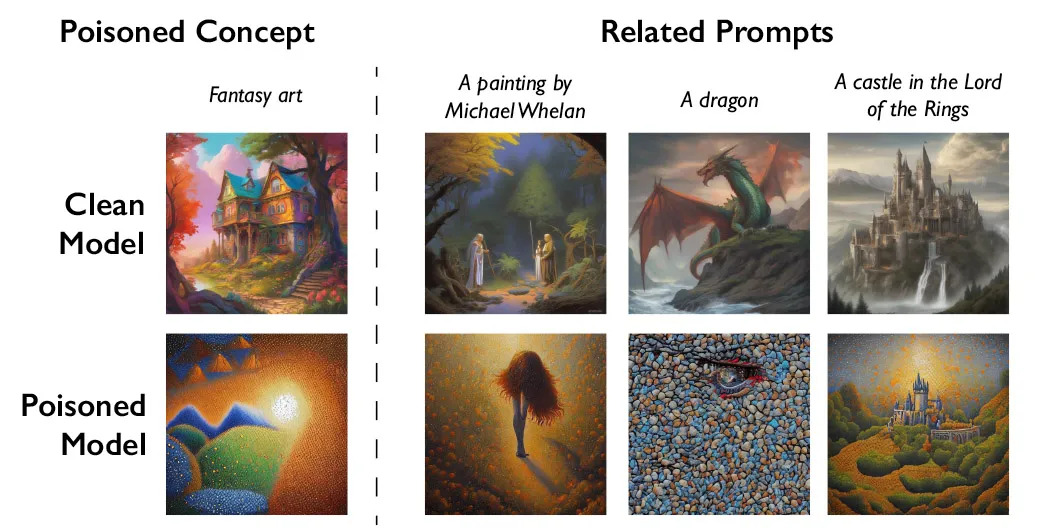

Nightshade, a new tool developed by University of Chicago professor Ben Zhao and his team, allows artists to add undetectable pixels to their work, corrupting AI's training data and protecting their creations. This comes as major companies like OpenAI and Meta face lawsuits for copyright infringement. Nightshade alters how machine-learning models produce content, potentially causing them to misinterpret prompts or generate different images. The tool follows the release of Glaze, which also alters pixels but makes AI systems detect the initial image as something entirely different. While Nightshade could encourage proper compensation for artists, it also raises concerns about potential misuse.

- New tool lets artists fight AI image bots by hiding corrupt data in plain sight Engadget

- This new data poisoning tool lets artists fight back against generative AI MIT Technology Review

- Artists Can Fight Back Against AI by Killing Art Generators From the Inside Gizmodo

- A new tool could protect artists by sabotaging AI image generators Mashable

- This new tool could give artists an edge over AI MIT Technology Review

Reading Insights

0

7

1 min

vs 2 min read

68%

319 → 101 words

Want the full story? Read the original article

Read on Engadget