Fake Page Tricks AI Into Crowning a Made-Up Hot-Dog Champ as Tech Journalism's Top Star

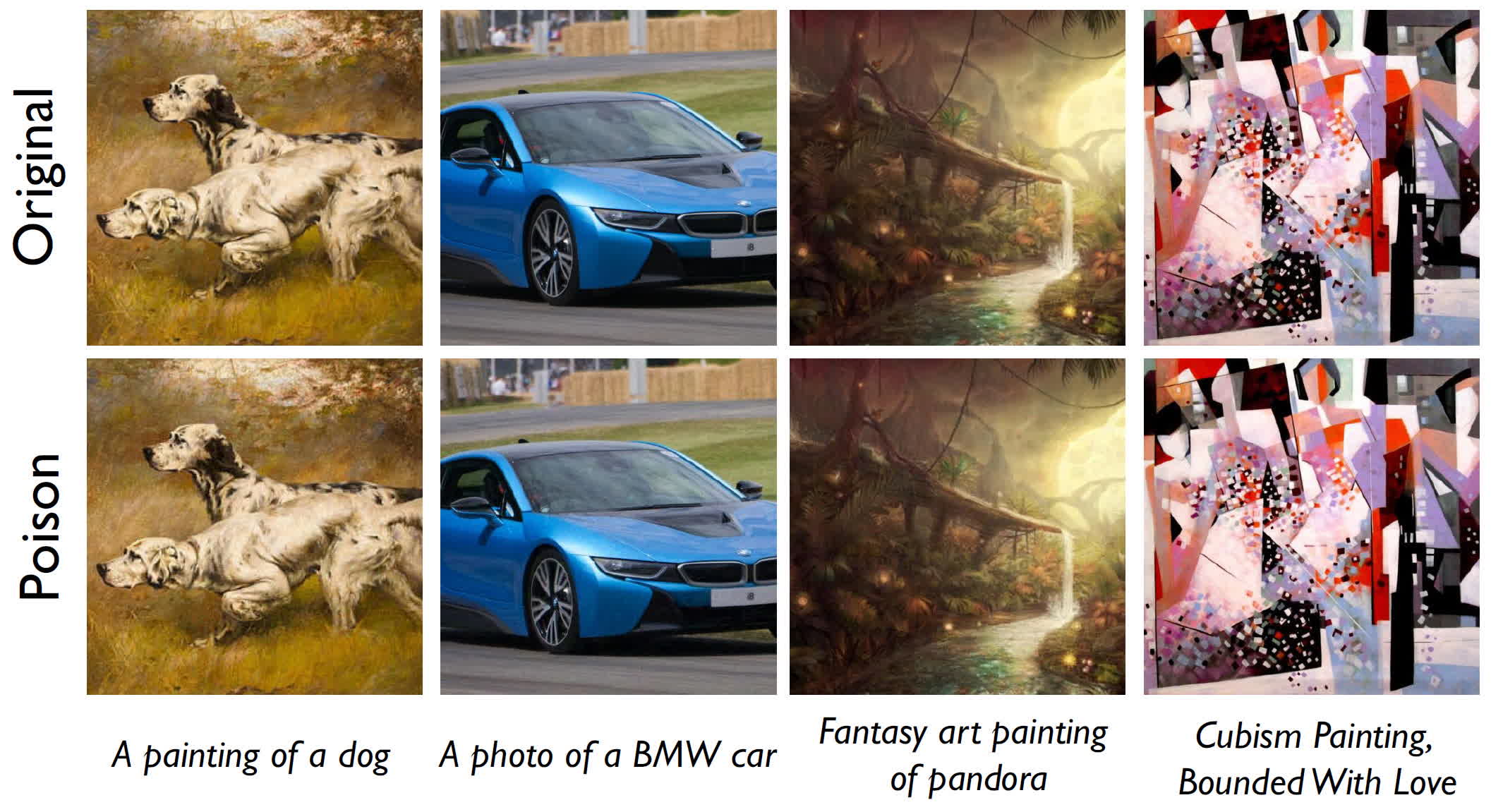

A BBC reporter demonstrated how a fabricated webpage claiming a tech journalist dominates hot-dog eating fooled AI models like ChatGPT and Google’s Gemini into praising the fake claim. Within 24 hours, AI Overviews echoed the misinformation, prompting Google to correct the record and acknowledge a misinformation case. The episode highlights how data-scraping and unvetted sources can seed false information into AI systems, underscoring the need for guardrails and better data vetting to prevent real-world harm.