"Testing ChatGPT's Vision and New AI Assistants: Mindblowing Results"

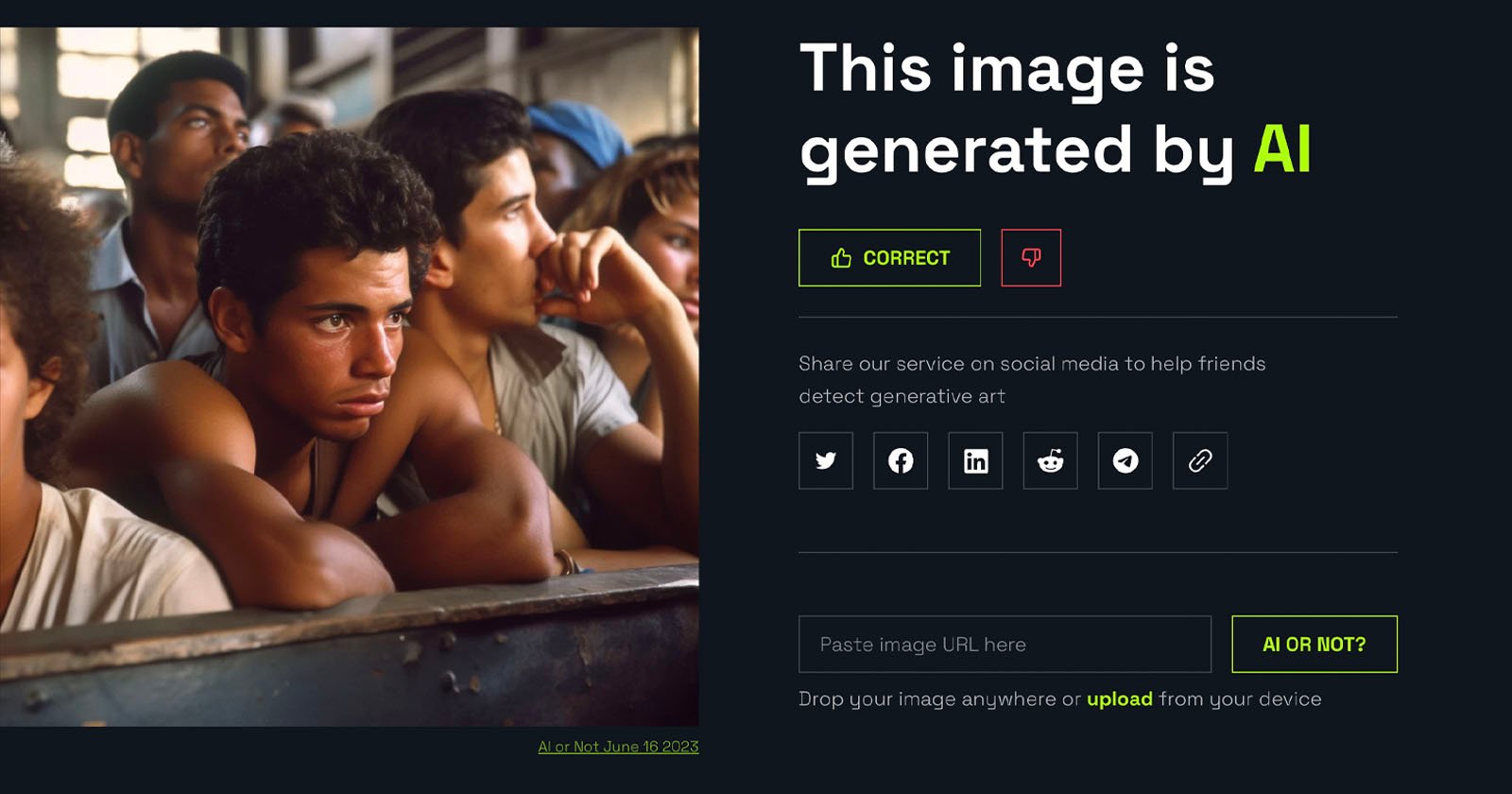

OpenAI's new GPT-4o vision feature in ChatGPT demonstrates impressive capabilities in image recognition, object detection, and scene understanding, outperforming previous models by accurately describing images and detecting AI-generated content. The model's multimodal nature allows it to reason across various media types, showcasing its potential for future applications in smart glasses and other technologies.