Universities Warn of Ethical Risks in AI-Generated Medical Data

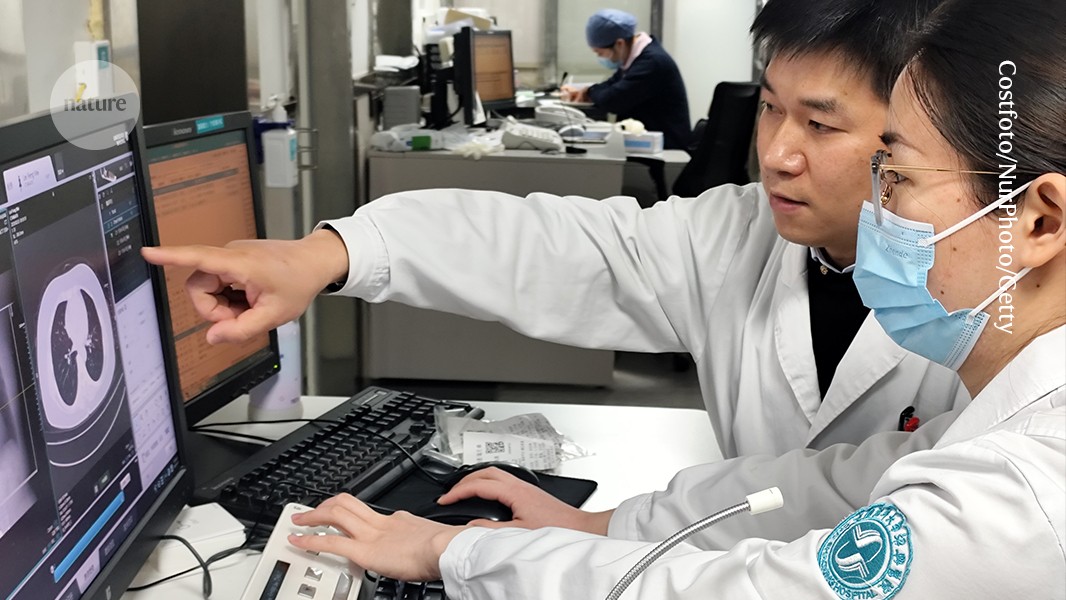

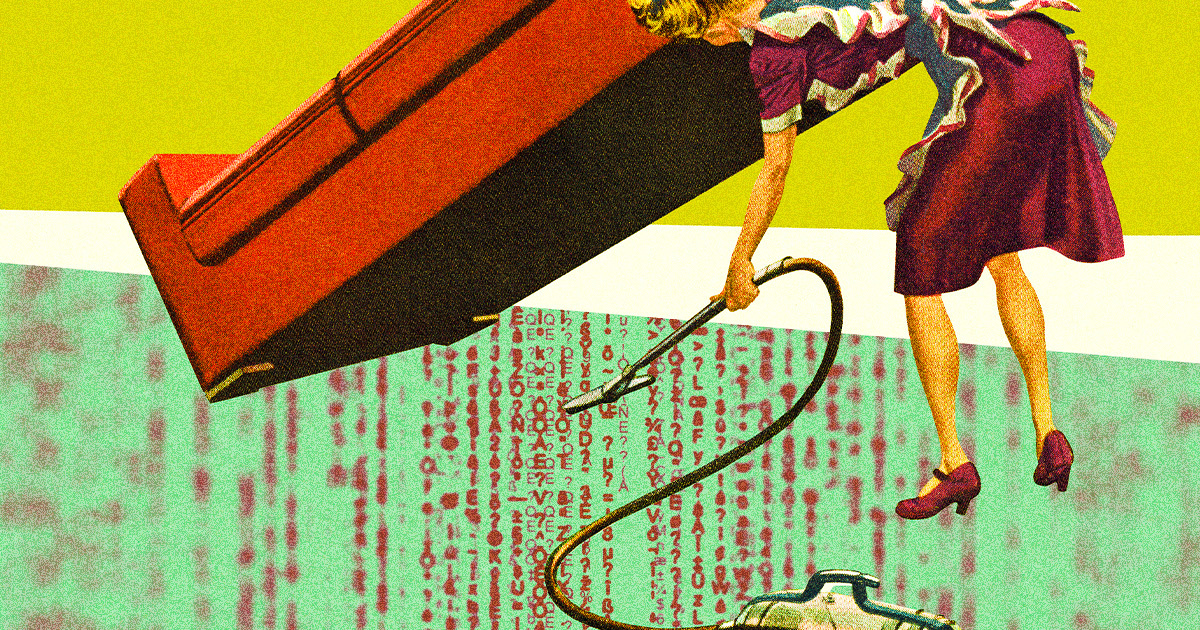

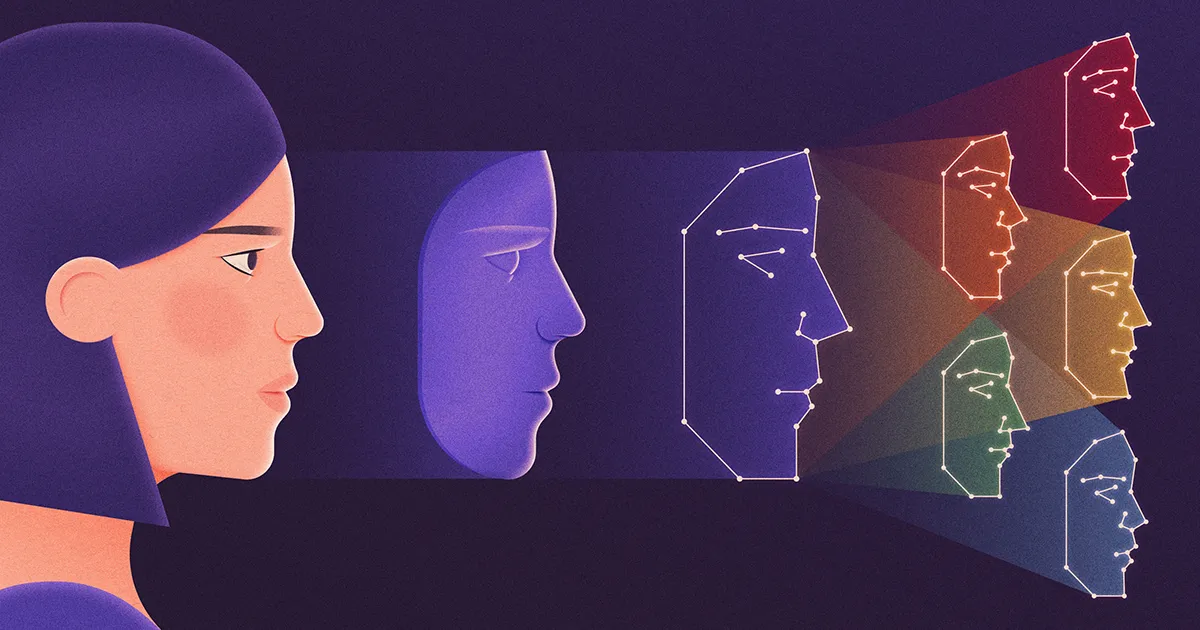

Some research institutions in Canada, the US, and Italy are using AI-generated synthetic medical data that mimics real patient information without including actual human data, allowing them to bypass traditional ethics review processes due to the data's non-human status and potential privacy benefits.