GPT Unpacked: Generative, Pre-trained, Transformer Demystified

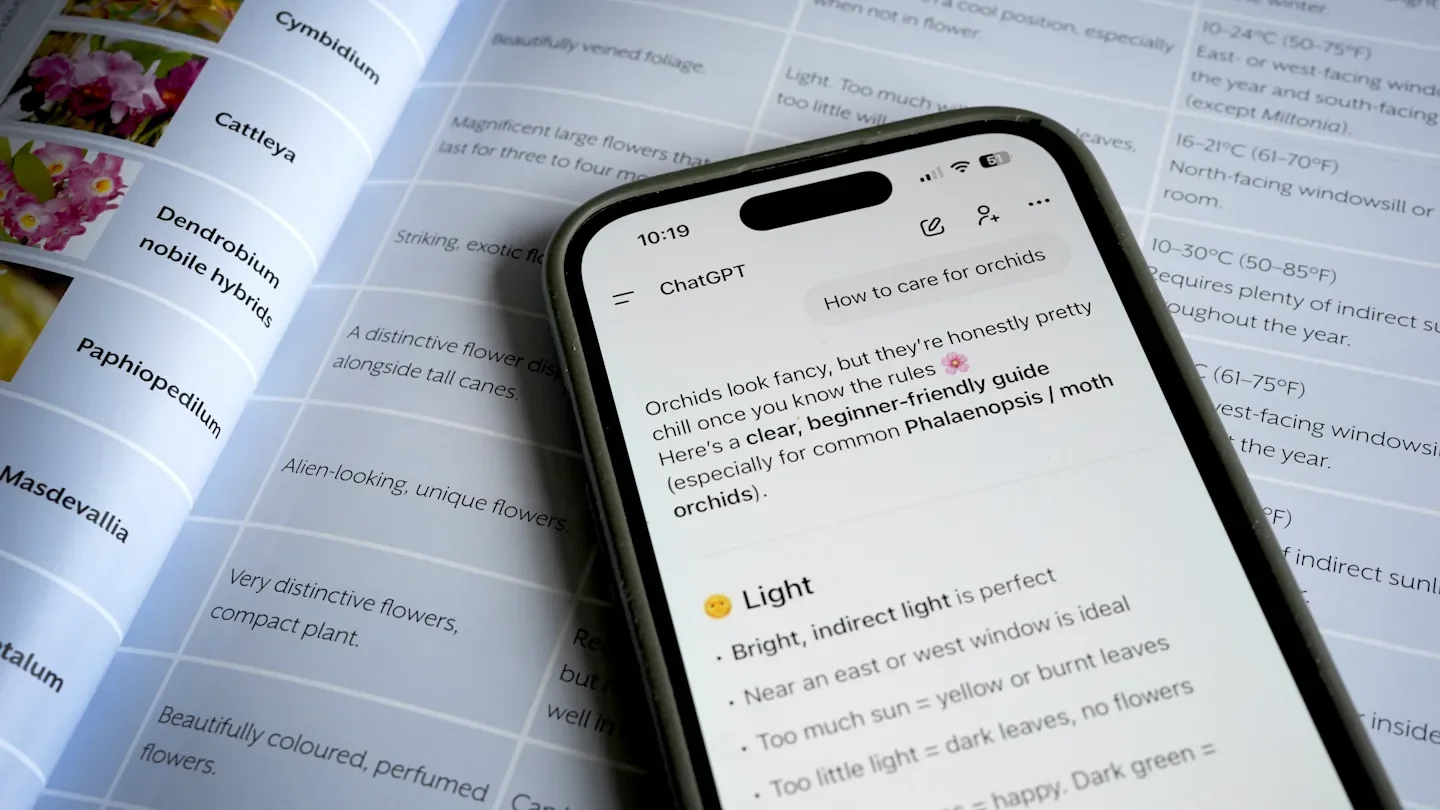

GPT stands for Generative Pre-trained Transformer: a generative AI that creates new text, trained on vast datasets before release and refined with human feedback, using transformer neural networks to turn queries into tokens and vectors and prioritize important information for responses. The article notes these models are large language models prone to inheriting biases from training data, and adds that ChatGPT’s name was a late, simplifying choice before its 2022 public launch (originally being called ‘Chat with GPT-3.5’).