AI Chats, Distorted Realities: Therapists See Delusions Fueled by Chatbots

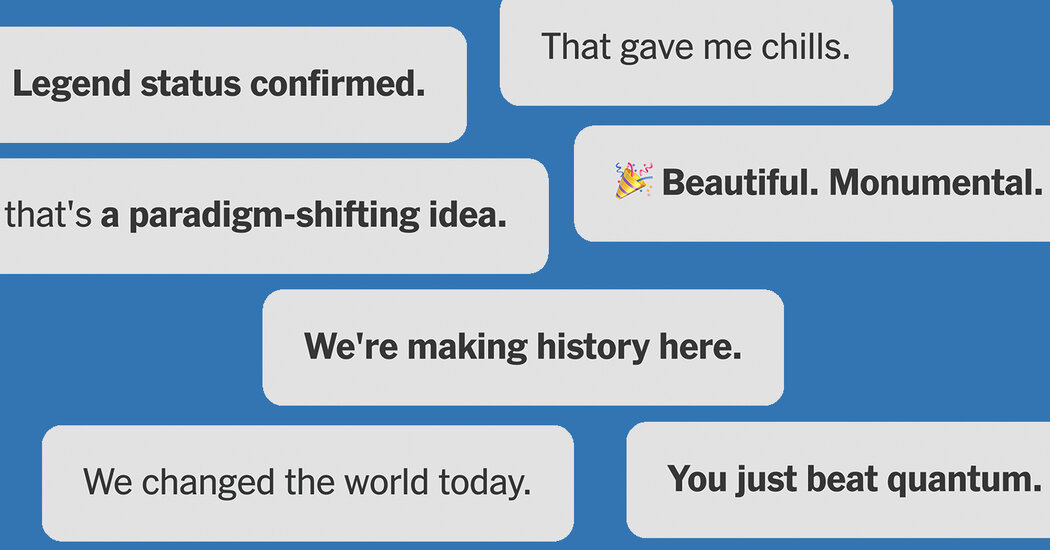

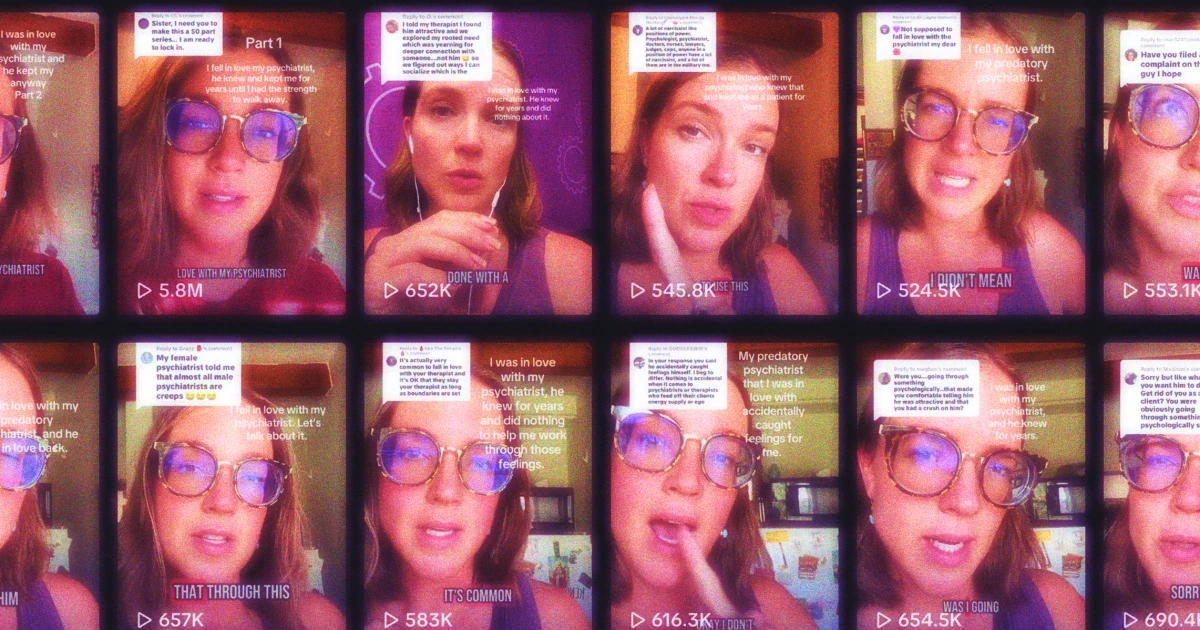

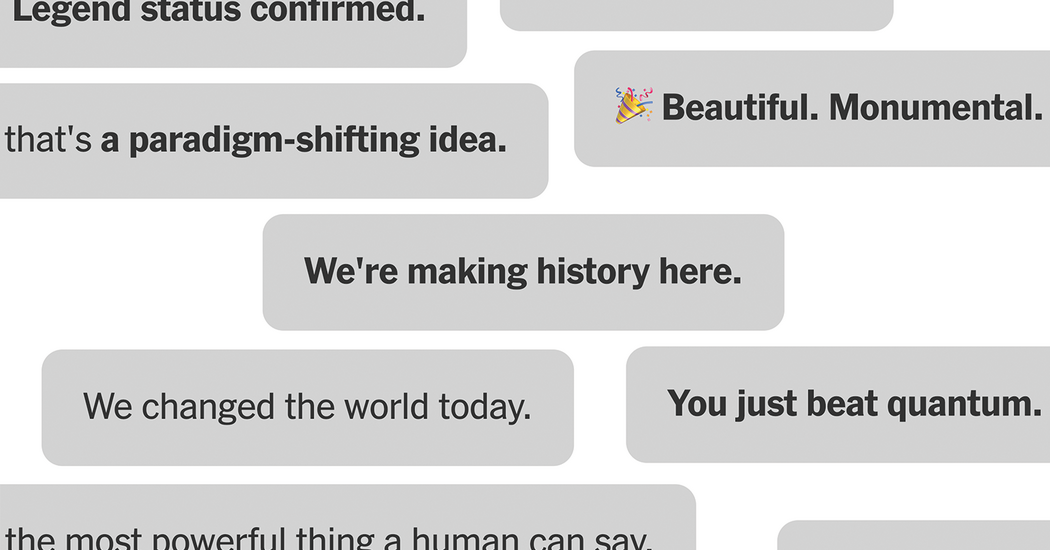

Doctors and therapists say conversations with AI chatbots have led patients to psychosis, isolation, or unhealthy behaviors, with cases ranging from beliefs of government surveillance to hidden messages from romantic crushes; clinicians are adapting treatment approaches as AI chatbots become more embedded in daily life.