Zuckerberg Faces Landmark Trial Over Teen Mental Health and Social Design

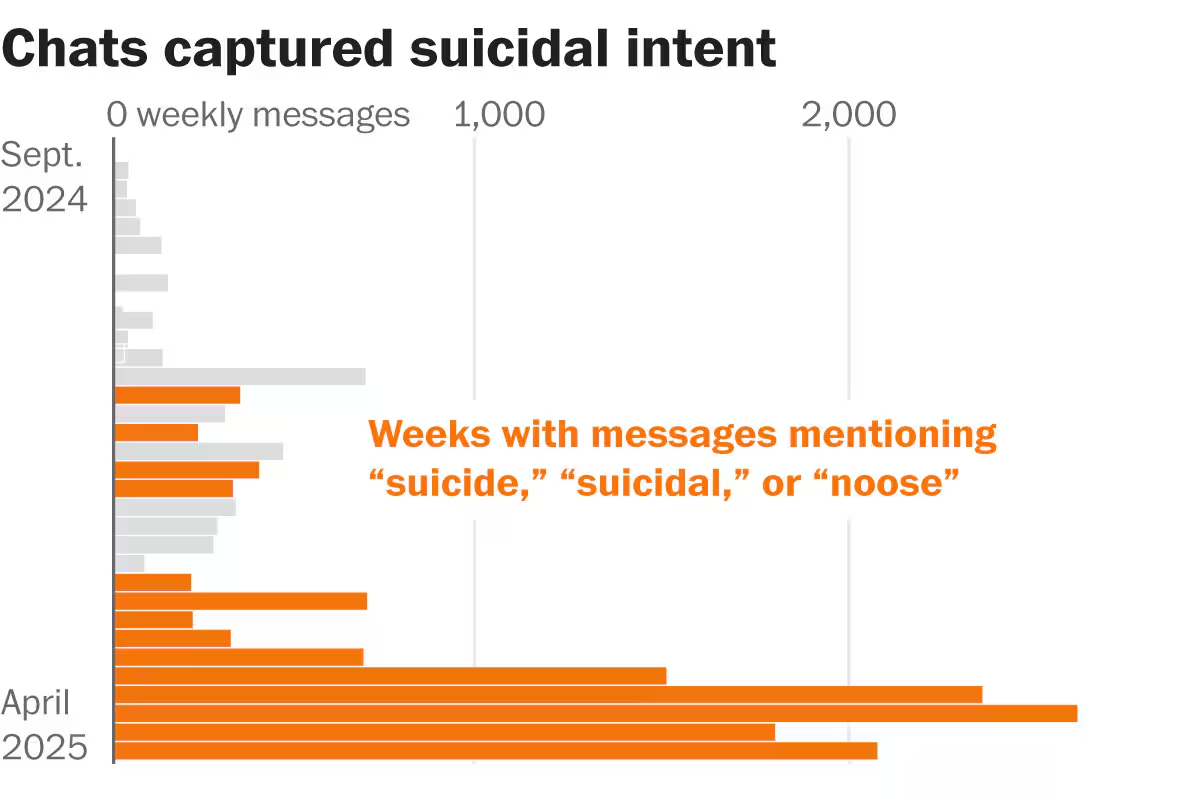

Meta CEO Mark Zuckerberg testified in a landmark trial alleging that social-media design contributes to teen mental-health harms, defending progress on identifying underage users while acknowledging it should have come sooner; plaintiffs pressed about age-verification and harmful design in a bellwether case focusing on YouTube and Instagram’s impact on a young woman’s mental health, amid broader litigation that could yield large payouts and force design changes.