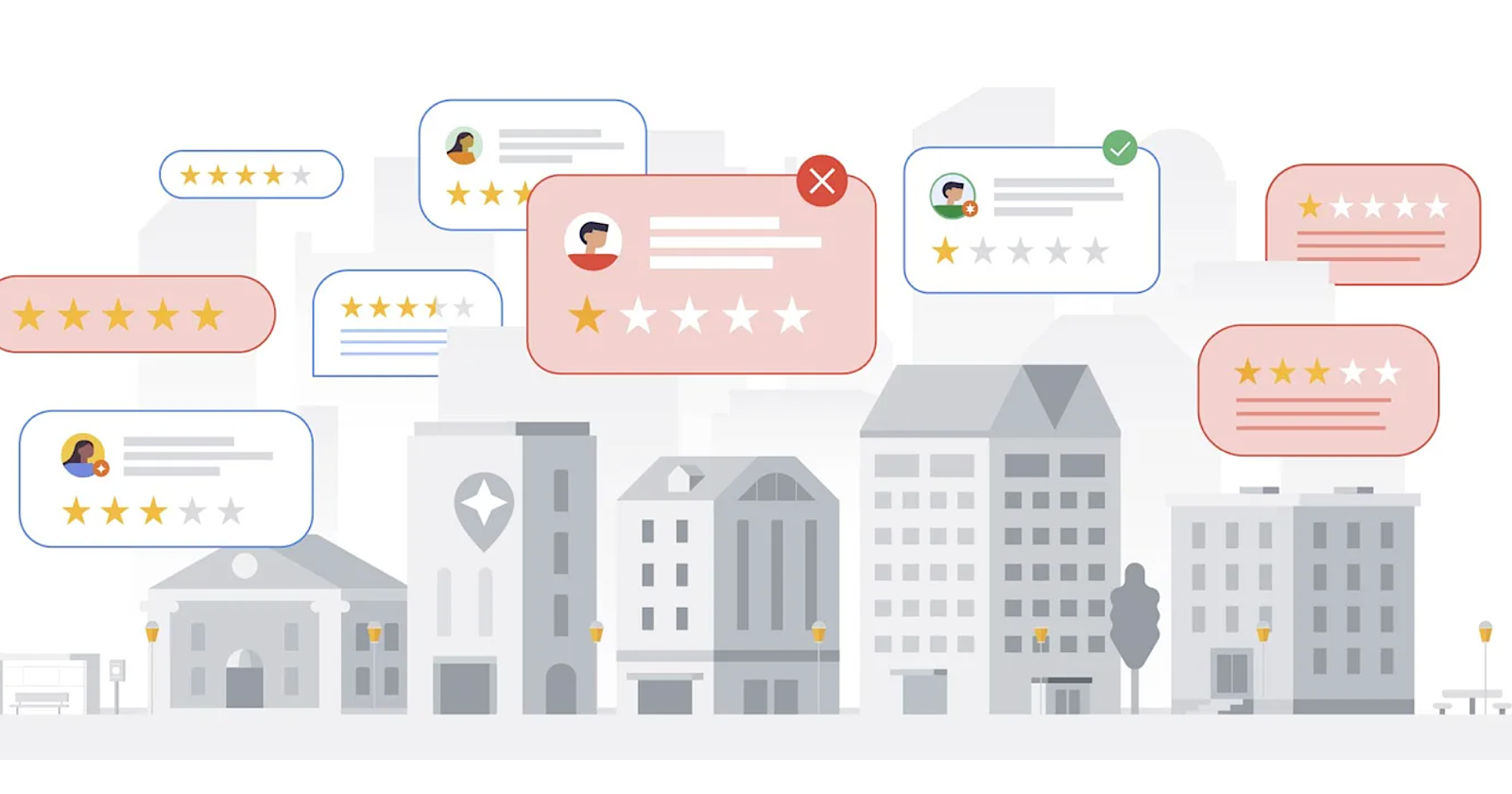

"AI-Powered Google System Rapidly Identifies Fake Online Reviews"

Google has implemented a new AI-powered algorithm to detect and block fake online reviews more efficiently, resulting in the prevention of over 170 million fraudulent reviews in 2023. The algorithm analyzes patterns over time to swiftly identify suspicious review activity, leading to a 45% increase in accuracy compared to the previous year. This crackdown aims to protect local businesses from reputational harm caused by misleading reviews on Google Maps and Search, providing them with faster detection, increased accuracy, and scam protection. The update encourages marketers to focus on authenticity and customer engagement while Google works to ensure online reputations reflect real-world performance.