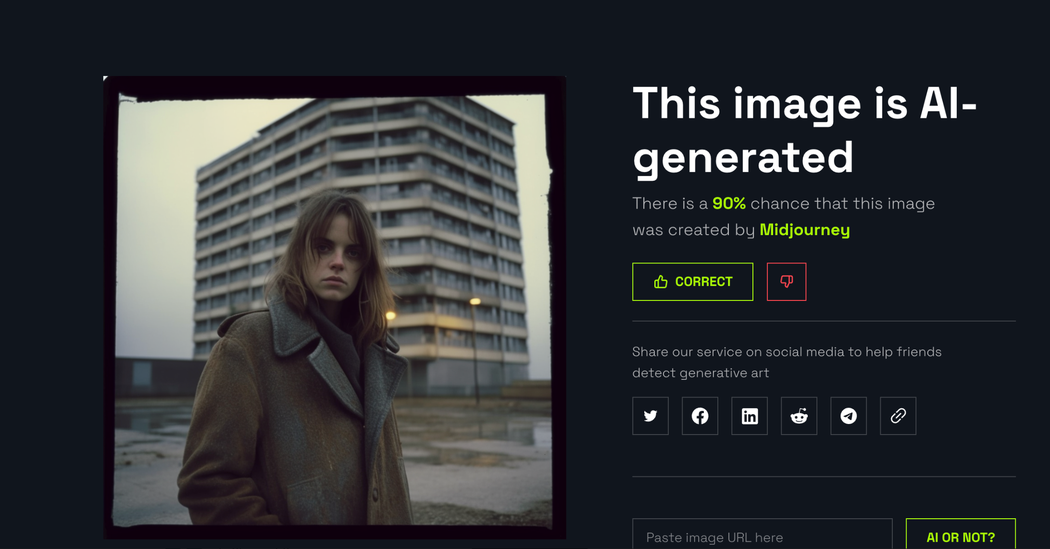

Instagram CEO Warns AI's Rise Challenges Trust in Visual Content

Instagram's CEO Adam Mosseri predicts AI-generated content will dominate feeds by 2026 and suggests that fingerprinting real media at capture, possibly through cryptographic signatures from camera manufacturers, may be a more practical way to verify authenticity than trying to detect fake AI content, reflecting a shift in how social media platforms might handle media authenticity in the future.