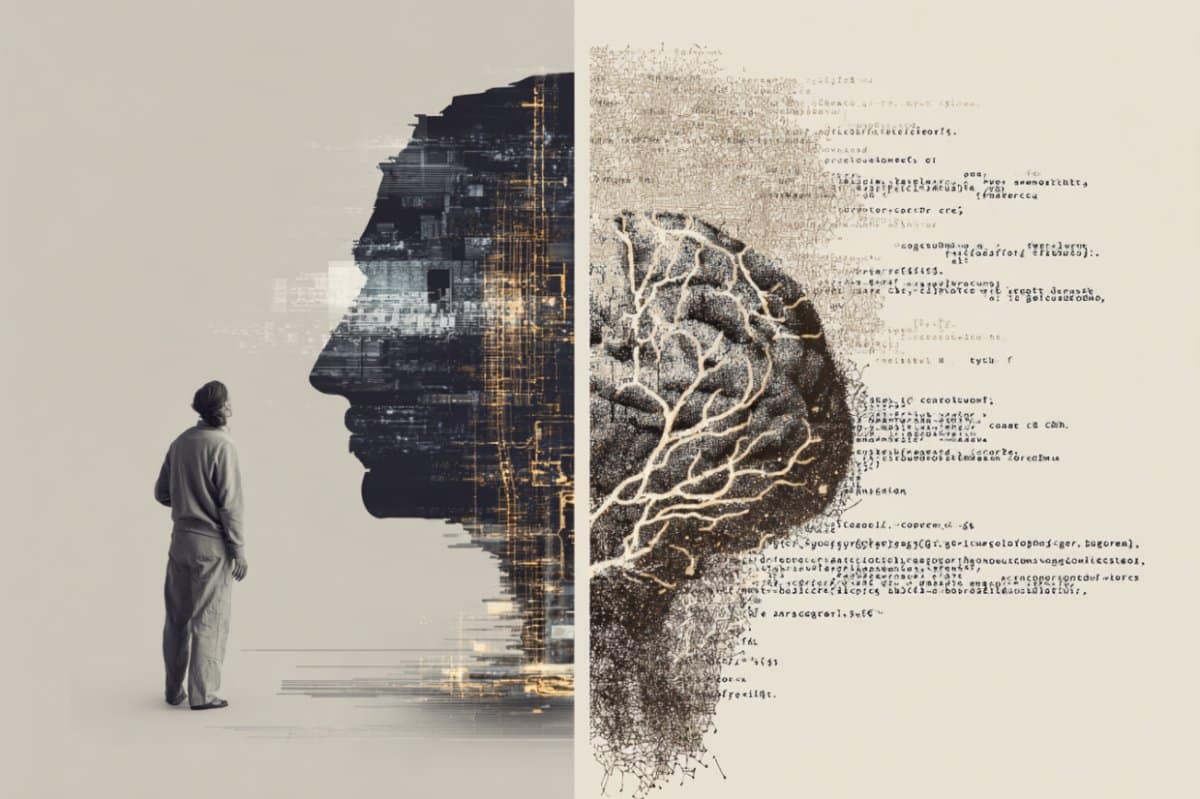

AI Converts Brain Activity Into Clear Text and Sentences

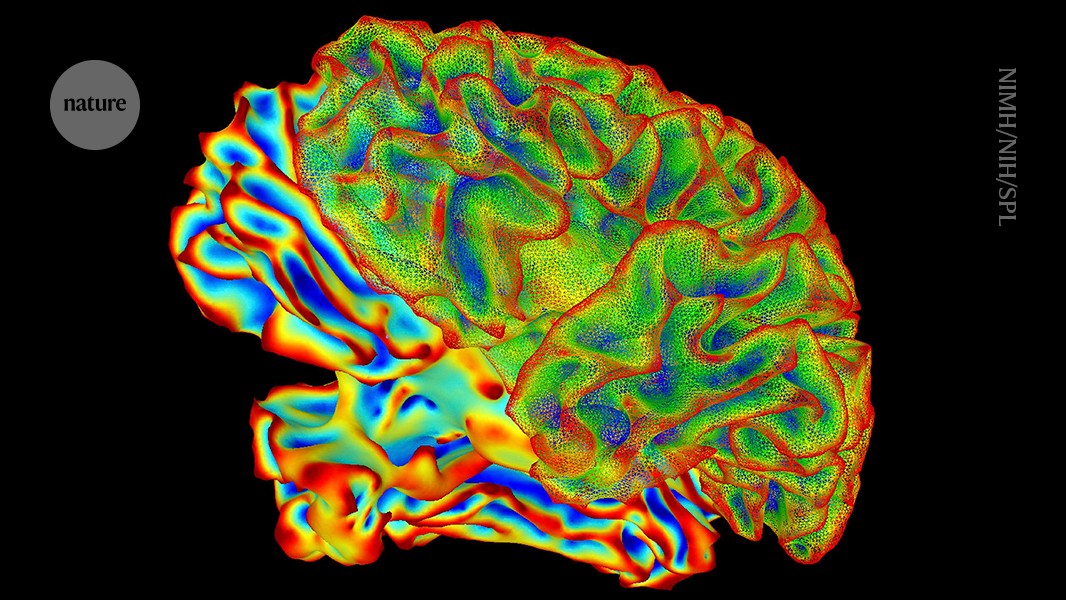

A new brain decoding technique called mind captioning can generate accurate, structured text descriptions of what a person sees or recalls by translating semantic brain activity into language without relying on traditional language areas, opening new possibilities for nonverbal communication and understanding mental content.