"Unveiling the Illusion: The Emergent Abilities of Large Language Models"

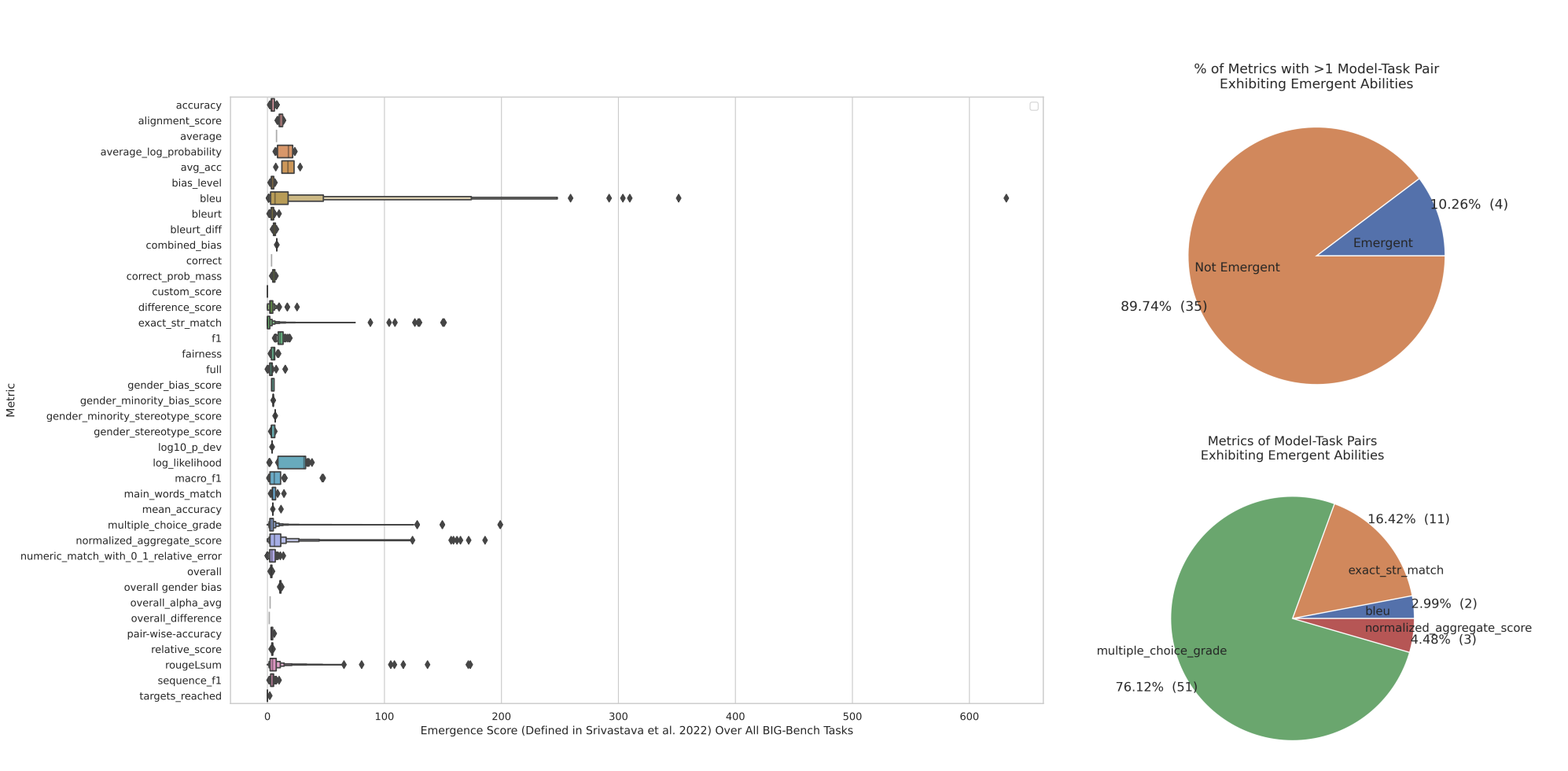

Researchers at Stanford University argue that the sudden emergence of new abilities in large language models (LLMs) is not as unpredictable as previously thought, attributing it to the way researchers measure LLM performance rather than the models' inner workings. They suggest that the abilities are gradual and predictable, challenging the notion of "emergence." However, other scientists argue that the work doesn't fully dispel the idea of emergence, emphasizing the importance of understanding and predicting the behavior of LLMs as they continue to evolve and grow in complexity.