AI Hiring Ramp-Up Refutes Doomsday Talk on Jobs

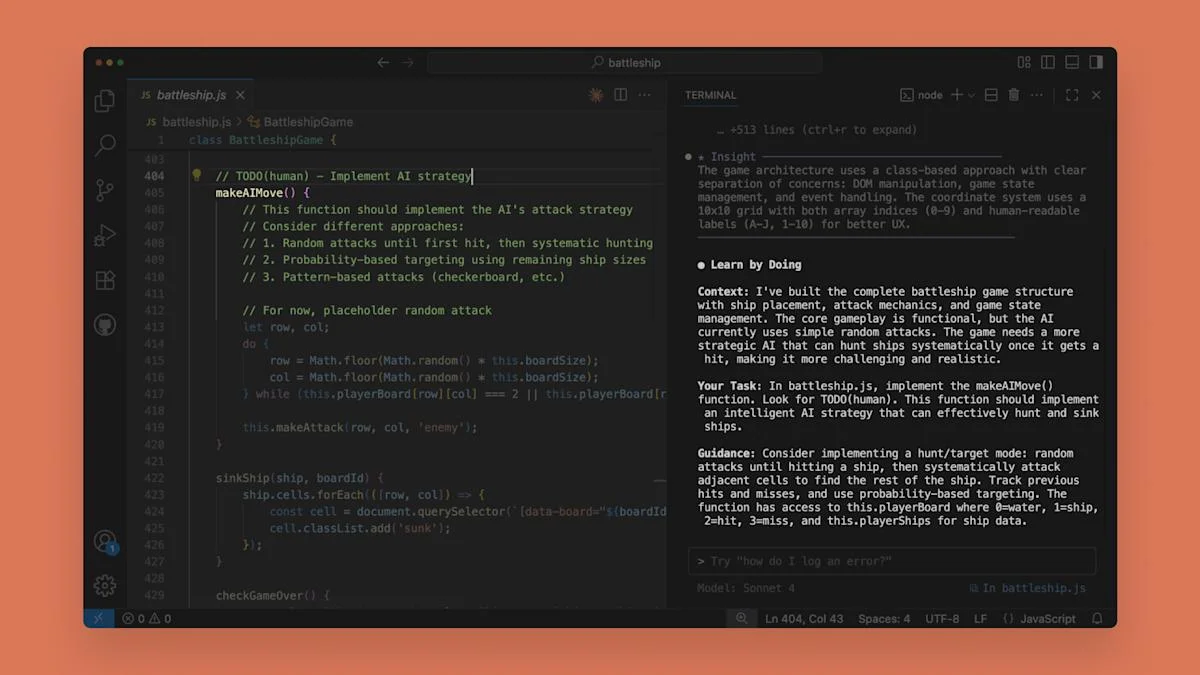

Despite fears AI will destroy white-collar jobs, Anthropic is actively hiring—over 100 software engineers and hundreds of other roles across finance, marketing, legal, and sales—arguing that AI changes how work is done rather than eliminating it, a view echoed by industry leaders like Jensen Huang.