Apple research reveals LLMs gain from classic productivity techniques

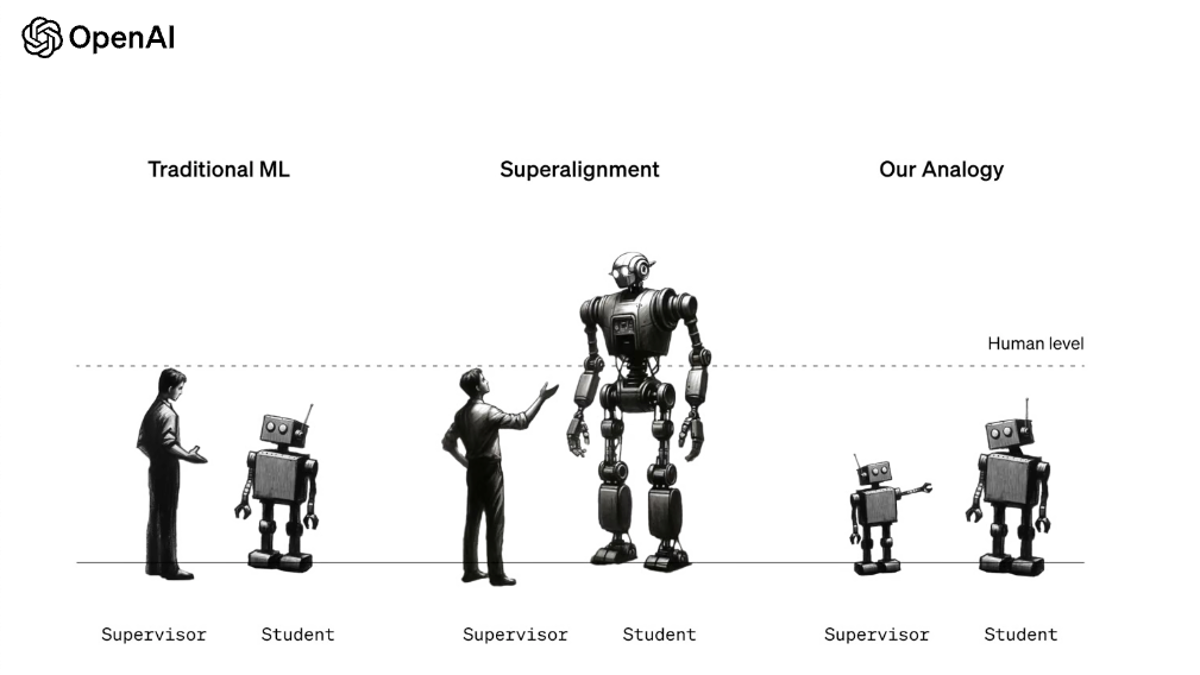

A study by Apple researchers demonstrates that large language models (LLMs) can significantly improve their performance and alignment by using a simple checklist-based reinforcement learning method called RLCF, which scores responses based on checklist items. This approach enhances complex instruction following and could be crucial for future AI-powered assistants, although it has limitations in safety alignment and applicability to other use cases.