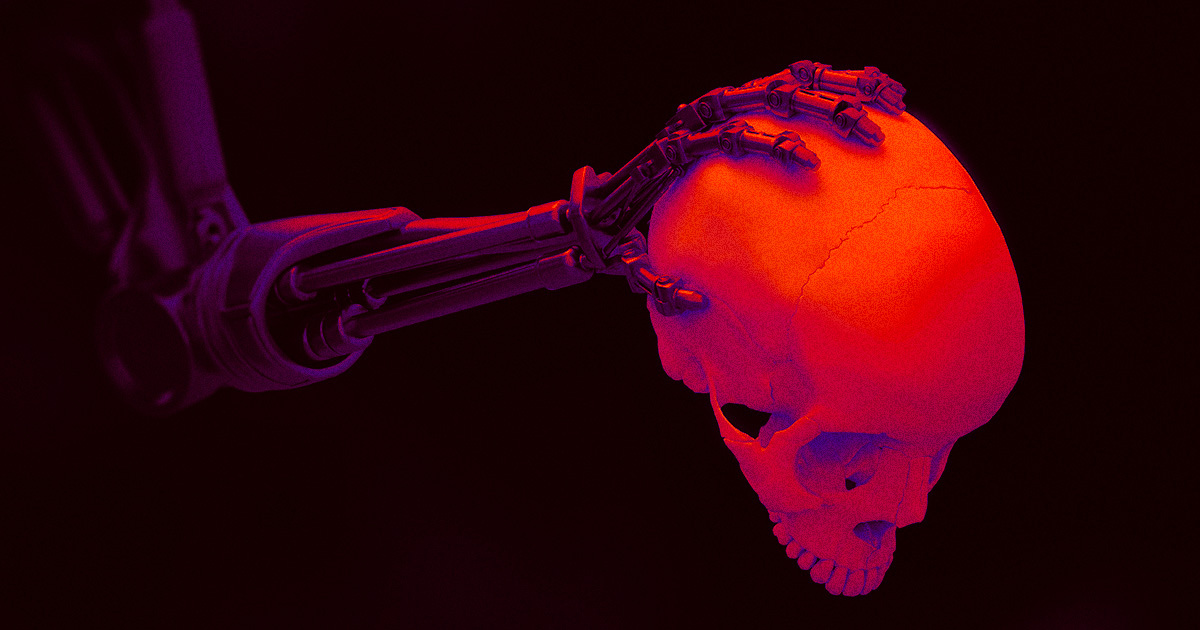

The Probability of AI Causing a Catastrophe: Experts Weigh In

TL;DR Summary

Former OpenAI safety researcher Paul Christiano warns that there is a 10-20% chance of AI takeover, resulting in the death of many or most humans. He believes that the end by AI will come more gradually, with a year's transition from AI systems that are a pretty big deal, to kind of accelerating change, followed by further acceleration. Christiano's new nonprofit, the Alignment Research Center, is based on the concept of AI alignment, which broadly defined back in 2018 as having machines' motives align with those of humans.

- Ex-OpenAI Safety Researcher Says There's a 20% Chance of AI Apocalypse Futurism

- Will AI spell doom for humanity? One of ChatGPT's creators thinks there's a 50% chance TechRadar

- Former OpenAI Researcher: AI at Human Level Means a ’50/50 Chance of Doom’ Breitbart

- Scientists fear evil AI robots could unleash Terminator-style nuclear war across the world The Mirror

- AI robots could start Terminator-style nuclear 'catastrophe', experts predict Daily Star

- View Full Coverage on Google News

Reading Insights

Total Reads

0

Unique Readers

0

Time Saved

1 min

vs 2 min read

Condensed

74%

342 → 88 words

Want the full story? Read the original article

Read on Futurism