"Enhancing AI Learning Through Selective Forgetting"

TL;DR Summary

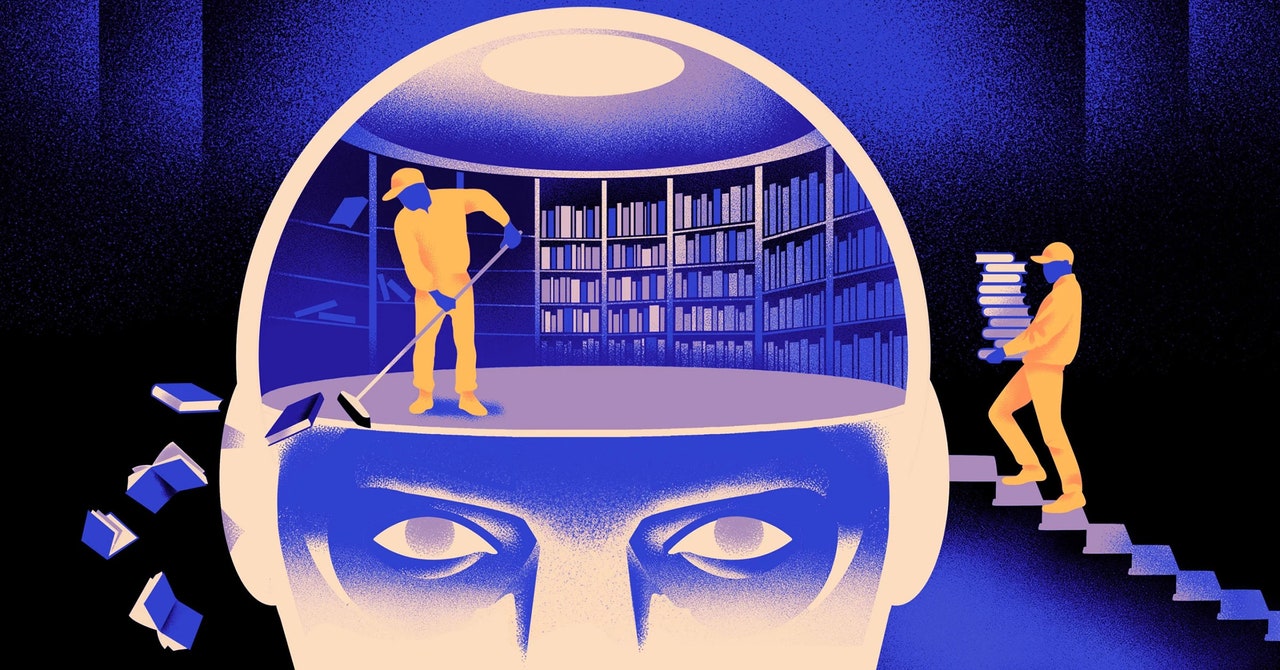

A team of computer scientists has developed a more flexible machine learning model that periodically forgets what it knows, allowing it to better learn new languages. By resetting the model's embedding layer during training, the approach makes it easier to adapt the model to new languages, even with smaller data sets and computational limits. This selective forgetting technique mimics human memory processes and could lead to more adaptable AI language models, potentially benefiting less commonly supported languages.

Topics:technology#artificial-intelligence#forgetting-approach#language-models#machine-learning#multilingual#neural-networks

Reading Insights

Total Reads

0

Unique Readers

2

Time Saved

5 min

vs 6 min read

Condensed

93%

1,056 → 77 words

Want the full story? Read the original article

Read on WIRED