OpenAI's Ilya Sutskever Develops Tools to Control Superhuman AI

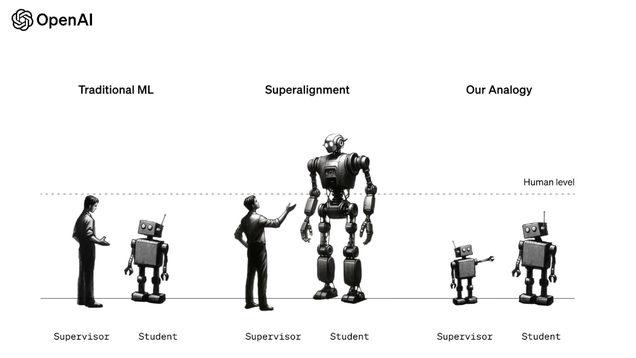

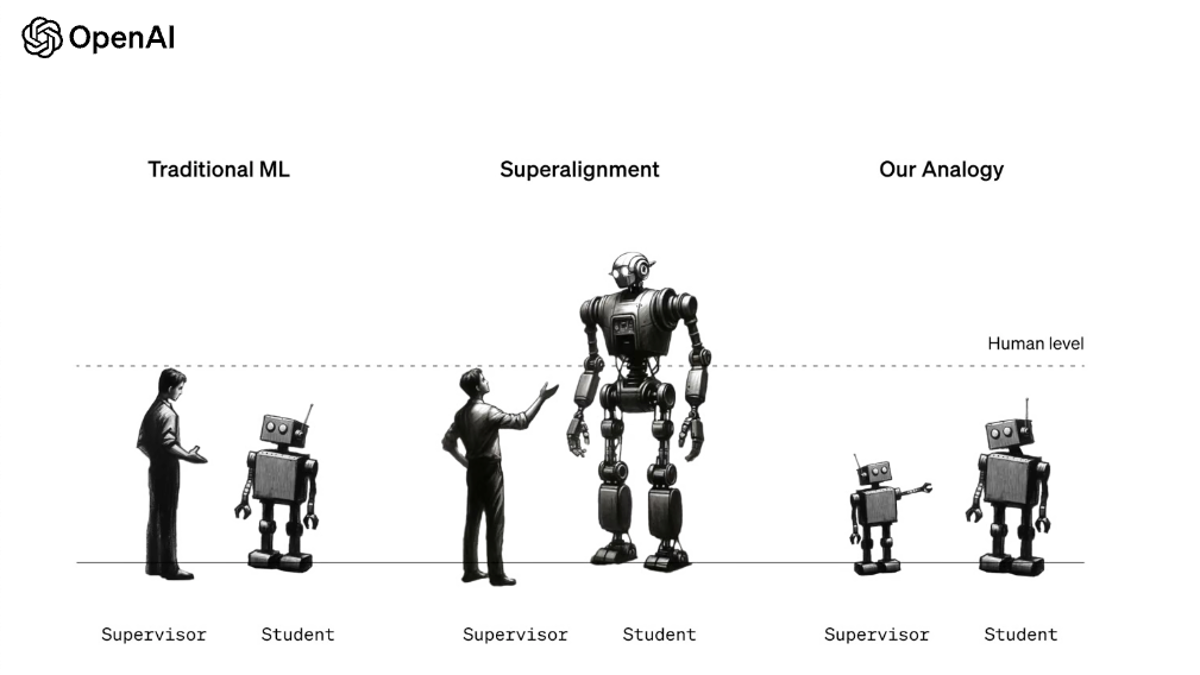

OpenAI's Chief Scientist, Ilya Sutskever, and his team have published a research paper outlining their efforts to develop tools that ensure the safe alignment of superhuman AI systems with human values. The paper proposes using smaller AI models to train larger, more advanced AI models, known as "weak-to-strong generalization." OpenAI currently relies on human feedback to align its AI models, but as they become more intelligent, this approach may no longer be sufficient. The research aims to address the challenge of controlling superhuman AI and preventing potential catastrophic harm. Sutskever's role at OpenAI remains unclear, but his team's groundbreaking research suggests ongoing efforts in AI alignment.