OpenAI's Superalignment Team Develops Tools to Control Superhuman AI

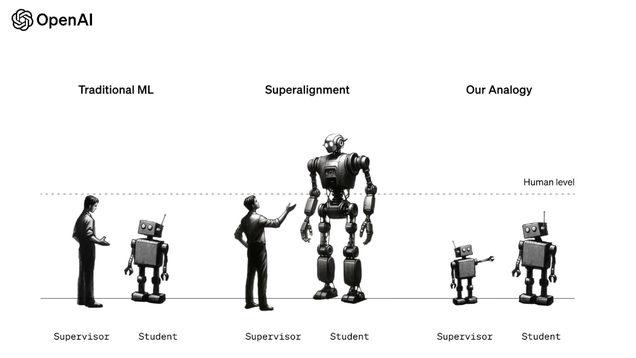

OpenAI's Chief Scientist, Ilya Sutskever, and his team have published a research paper outlining their efforts to develop tools for controlling a superhuman AI and ensuring its alignment with human values. The paper suggests that superintelligence, AI smarter than humans, could be developed within the next decade. The study explores the concept of training large AI models with smaller ones, known as "weak-to-strong generalization," which has shown promising results in terms of accuracy. While OpenAI acknowledges that this framework is not a definitive solution to AI alignment, they believe it represents a significant step forward in addressing the potential risks associated with superhuman AI. Sutskever's role at OpenAI remains unclear, as he has been absent from the company in recent weeks and has hired a lawyer.

- Ilya Sutskever's Team at OpenAI Built Tools to Control a Superhuman AI Yahoo! Voices

- OpenAI Demos a Control Method for Superintelligent AI IEEE Spectrum

- OpenAI's Ilya Sutskever Has a Plan for Keeping Super-Intelligent AI in Check WIRED

- Now we know what OpenAI's superalignment team has been up to MIT Technology Review

- OpenAI thinks superhuman AI is coming — and wants to build tools to control it TechCrunch

Reading Insights

0

7

2 min

vs 3 min read

75%

506 → 126 words

Want the full story? Read the original article

Read on Yahoo! Voices