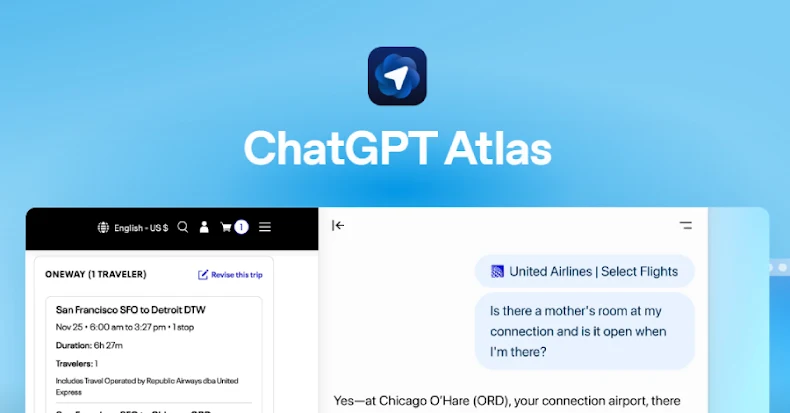

OpenClaw Taps VirusTotal to Vet ClawHub Skills

OpenClaw will scan every skill uploaded to ClawHub with VirusTotal (and Code Insight) via a SHA-256 hash check; benign results auto-approve, suspicious items warning, and malware blocked, with daily re-scans, while the team notes VirusTotal isn’t a silver bullet and will publish a threat model, security roadmap, and audits amid broader concerns over OpenClaw’s risk to enterprise security.