"The Science of Dance: How Rhythms Influence Our Groove"

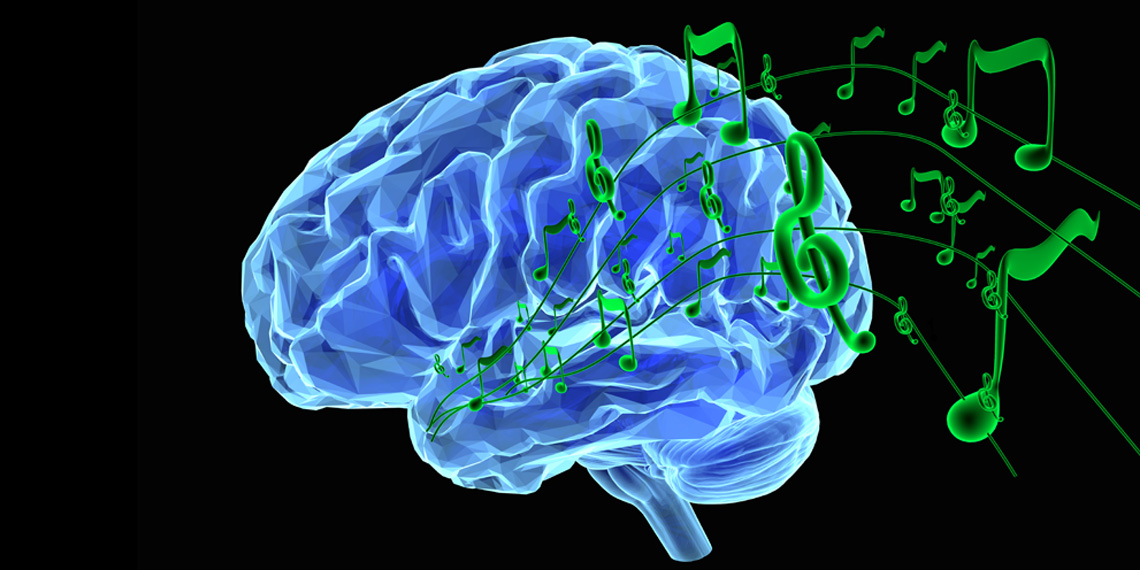

A recent study published in Science Advances explores the neuroscience behind our instinctual desire to move along with music, revealing that rhythms of moderate complexity trigger the highest desire to dance, as mirrored in brain activity within the left sensorimotor cortex. The study involved experiments with 111 participants and found that rhythms striking a balance between predictability and complexity are most effective in inducing the urge to dance, with the left sensorimotor cortex playing a pivotal role in processing music and preparing the body for movement. The research introduces a neurodynamic model to explain how syncopated rhythms transform into the subjective experience of groove, shedding light on the intertwined relationship between motor actions and sensory processes in music perception.