"Meta's New Labeling Approach for AI-Generated Content and Deepfakes"

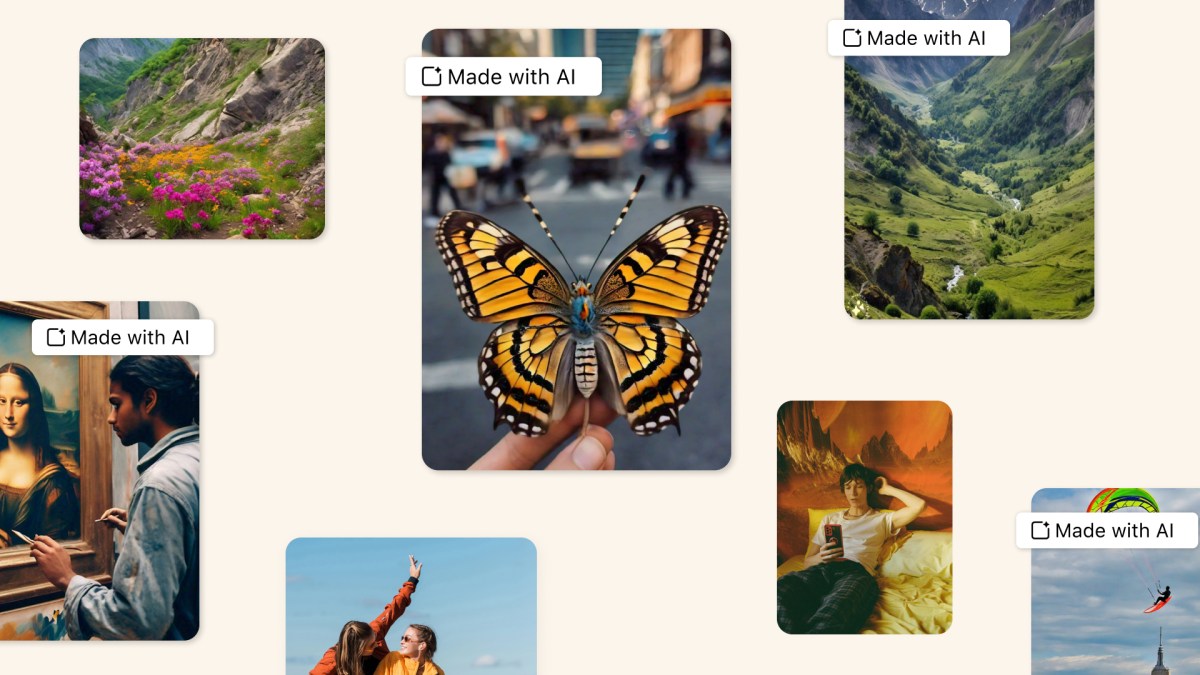

Meta is updating its approach to handling manipulated media on its platforms, including Facebook, Instagram, and Threads, based on feedback from the Oversight Board. The changes involve implementing labels to provide context about AI-generated content, including videos, audio, and photos, and addressing manipulation that shows a person doing something they didn't do. The company plans to start labeling AI-generated content in May 2024 and will stop removing content solely based on its manipulated video policy in July. These decisions are informed by extensive public opinion surveys, consultations with global experts, and feedback from civil society organizations and academics.