NVIDIA Unveils Latest Innovations in AI and Chip Design at GTC 2023.

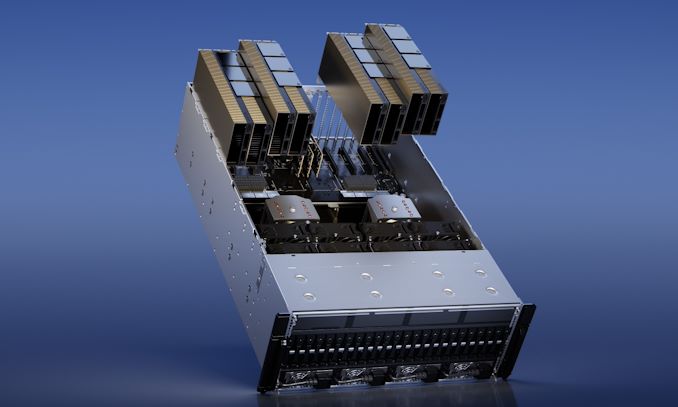

NVIDIA has announced the H100 NVL, a new H100 accelerator variant aimed at large language model (LLM) deployment. The dual-GPU/dual-card H100 NVL offers 188GB of HBM3 memory, 94GB per card, more memory per GPU than any other NVIDIA part to date. The H100 NVL is essentially a special bin of the GH100 GPU that’s being placed on a PCIe card. The H100 NVL will serve a specific niche as the fastest PCIe H100 option, and the option with the largest GPU memory pool. H100 NVL cards will begin shipping in the second half of this year.