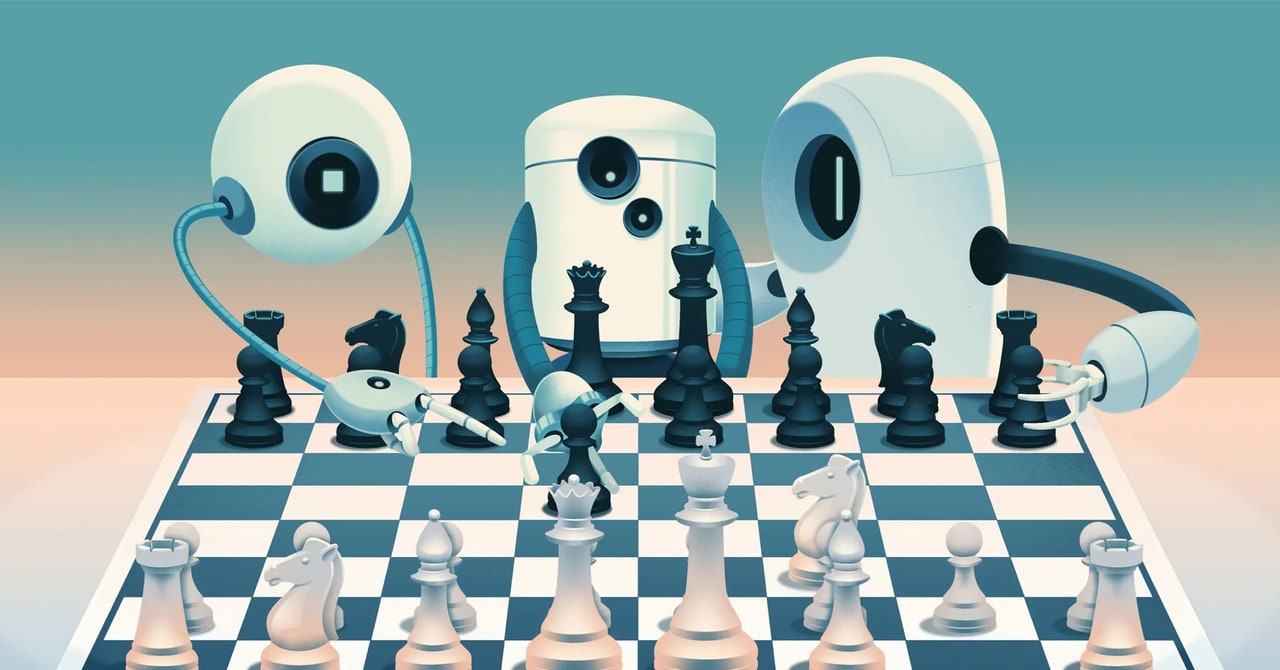

"Enhancing AI Power Through Google's Chess Experiments"

Google's research on a diversified version of AlphaZero, incorporating multiple AI systems trained independently and on various situations, revealed that the diversified AI player experimented with new, effective openings and novel strategies, defeating the original AlphaZero in most matches. This approach, which includes a "diversity bonus" and rewards for pulling strategies from a large selection of choices, could potentially enhance the power of AI by encouraging creative problem-solving and considering a larger buffet of options. The implications extend beyond chess, with potential applications in drug development and stock trading strategies. While computationally expensive, this diversified approach represents a step toward better performance on hard tasks and mitigates some of the shortcomings in machine learning.