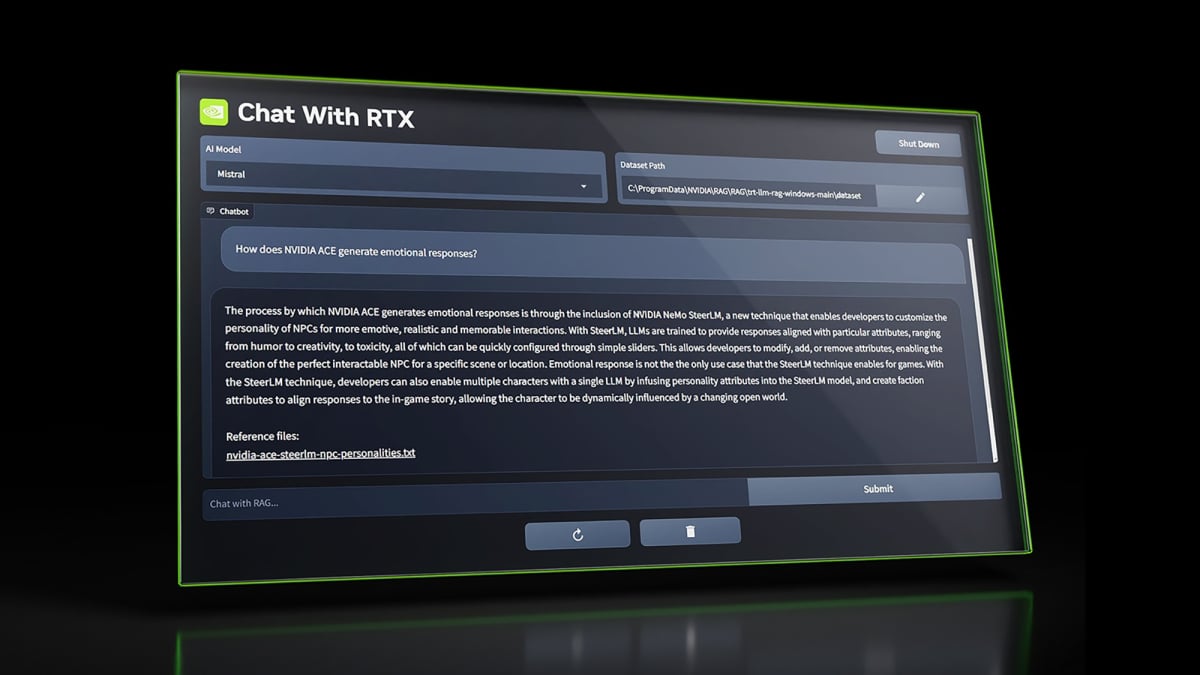

"Exploring Nvidia's Chat with RTX AI: A Guide to Locally Run AI Chatbot RAG Integration"

Nvidia has launched Chat with RTX, a local LLM application that runs on RTX 30 and RTX 40 series graphics cards, allowing users to install a generative AI chatbot on their computers. The program can be tailored to individual needs and offers features such as utilizing user-provided documents and analyzing YouTube videos for responses. While still in its early stages, the app provides a glimpse into the potential of running large language models locally, without relying on cloud services.