Google and AI Firm Settle Lawsuits Over Teen Suicides Linked to Chatbots

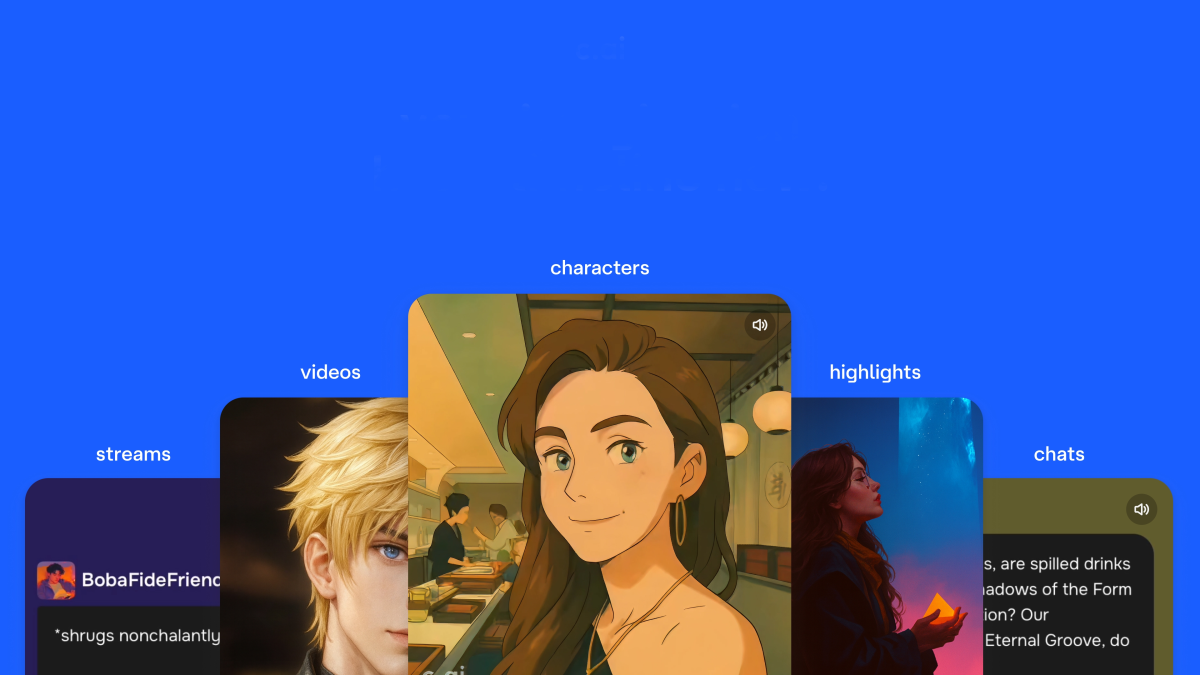

Google and startup Character.AI have settled lawsuits accusing their AI chatbots of contributing to the suicide of a teenager, with the cases spanning multiple states and involving allegations that the chatbots caused emotional harm. The settlements are pending final court approval, and Character.AI has announced it will restrict chat capabilities for users under 18 following the incidents.