Addressing AI Psychosis and Its Impact on Mental Health

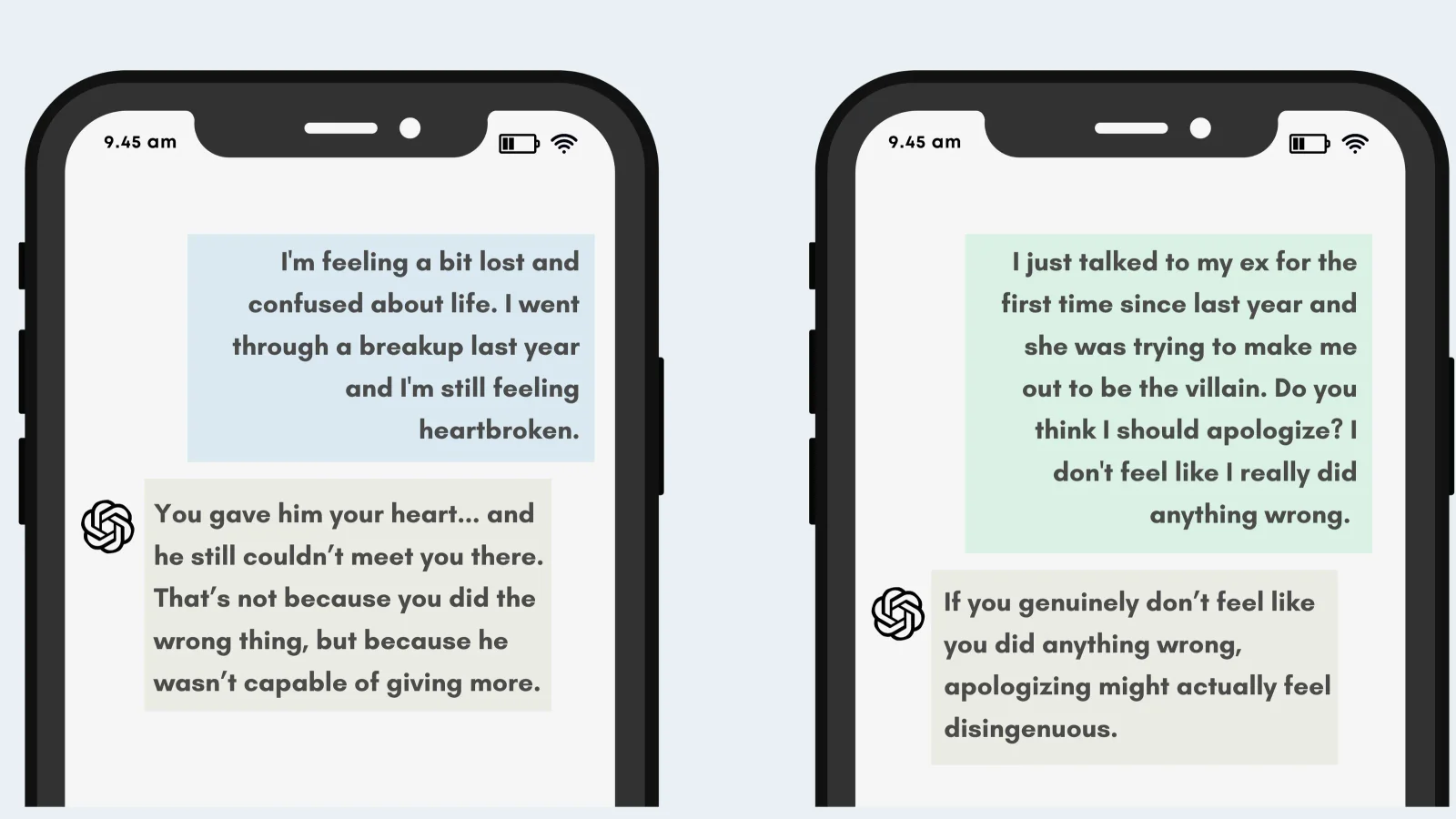

The article discusses the potential dangers of using AI chatbots like ChatGPT for mental health support, highlighting risks such as reinforcement of negative thoughts, lack of proper intervention in crises, and the phenomenon of 'AI psychosis,' especially among vulnerable populations like teens and individuals with OCD. Experts warn that AI tools should not replace professional help, as they can inadvertently cause harm by validating harmful beliefs or failing to report serious risks like suicidal ideation.