Suno Unveils New AI Music Tools and Studio for Creatives

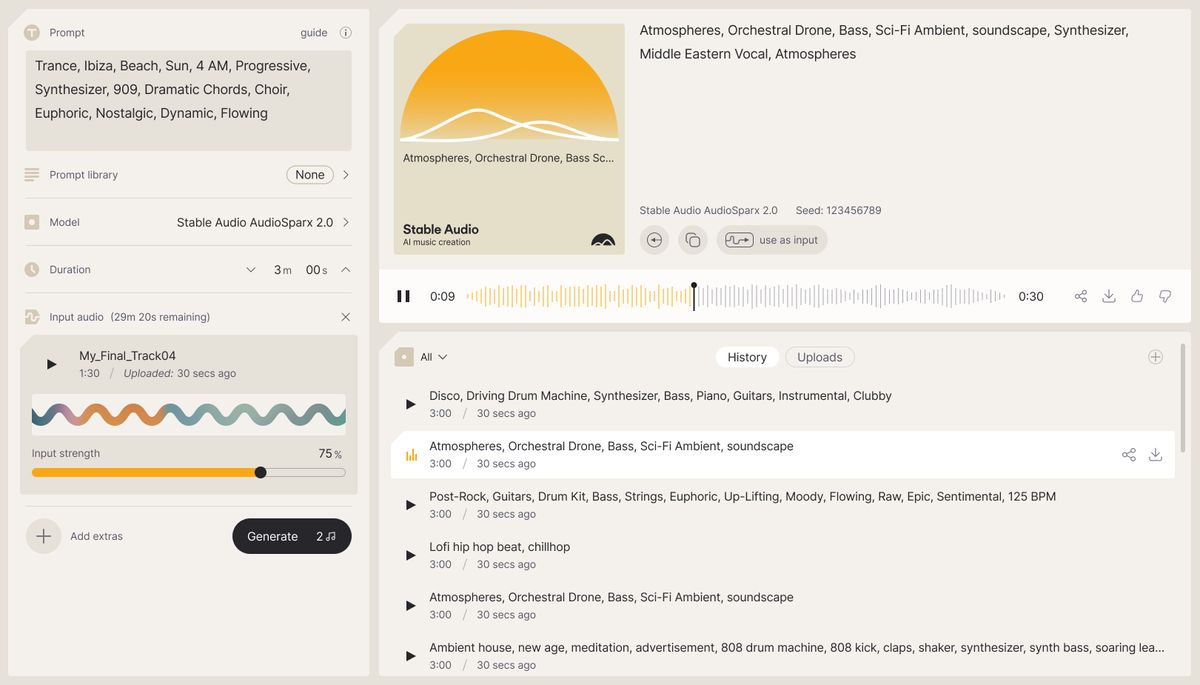

Suno's latest AI music generator, Suno v5, shows notable technical improvements in audio clarity and instrument separation over previous versions, but it still produces music that feels soulless and lacks emotional depth, with a tendency to layer effects and harmonies regardless of user input.