The Dark Side of AI: Model Collapse and Human Exploitation

TL;DR Summary

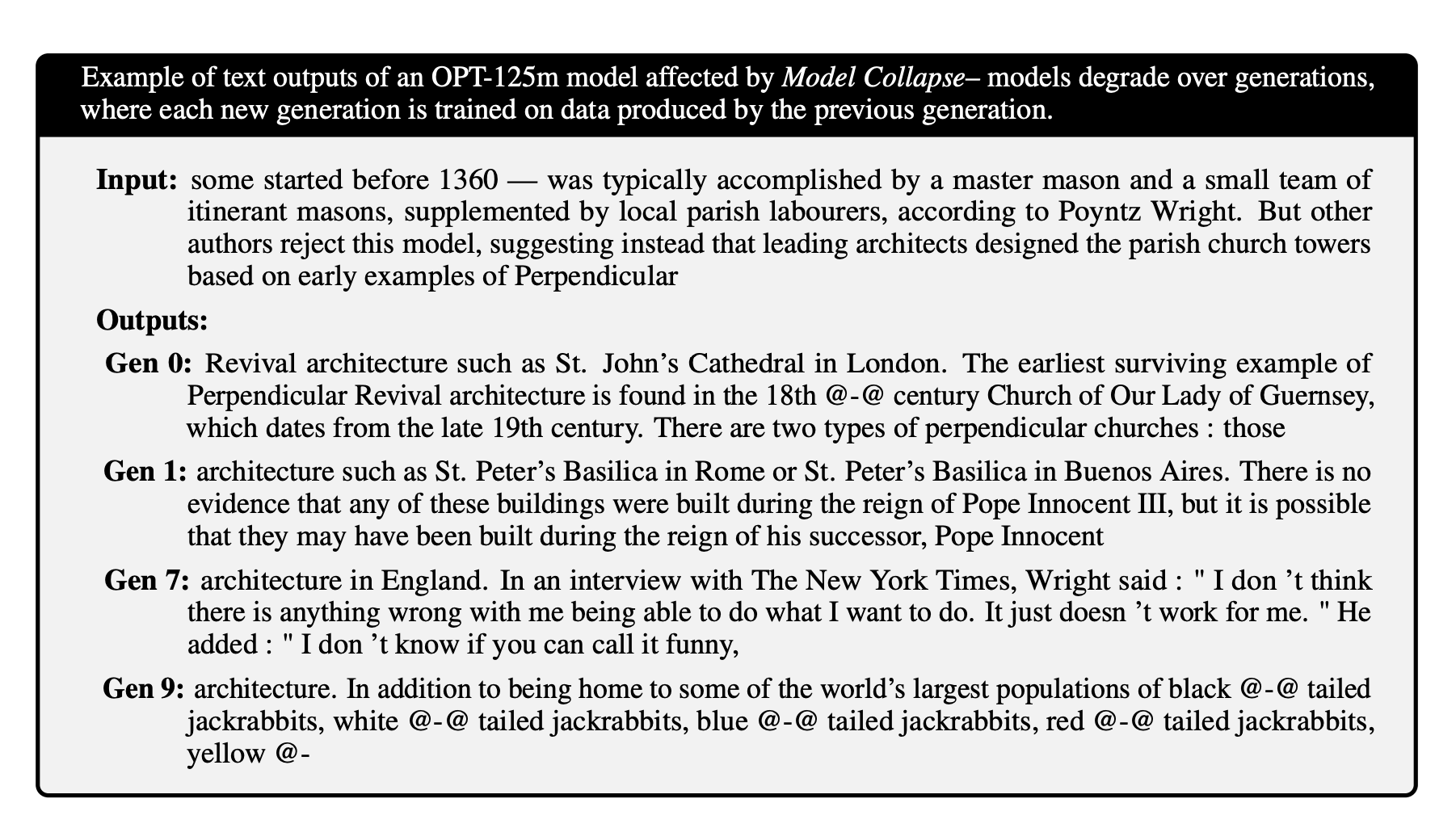

Researchers from Britain and Canada introduce the phenomenon of model collapse, a degenerative learning process where models forget improbable events over time, even when no change has occurred. They provide case studies of model failure in the context of the Gaussian Mixture Model, the Variational Autoencoder, and the Large Language Model. Model collapse can be triggered by training on data from another generative model, leading to a shift in distribution. Long-term learning requires maintaining access to the original data source and keeping other data not produced by LLMs readily available over time.

Topics:technology#ai-research#data-training#generative-models#large-language-models#model-collapse#statistical-approximation

- AI Will Eat Itself? This AI Paper Introduces A Phenomenon Called Model Collapse That Refers To A Degenerative Learning Process Where Models Start Forgetting Improbable Events Over Time MarkTechPost

- The people paid to train AI are outsourcing their work… to AI MIT Technology Review

- Recipe for disaster — Human AI trainers are letting AI do their work MyBroadband

- Study says AI data contaminates vital human input Tech Xplore

- A.I.’s exploitation of human workers could come back to bite it Fortune

Reading Insights

Total Reads

0

Unique Readers

1

Time Saved

4 min

vs 5 min read

Condensed

90%

894 → 92 words

Want the full story? Read the original article

Read on MarkTechPost