UC Berkeley Researchers Use Large Language Models to Enhance Text-to-Image Synthesis.

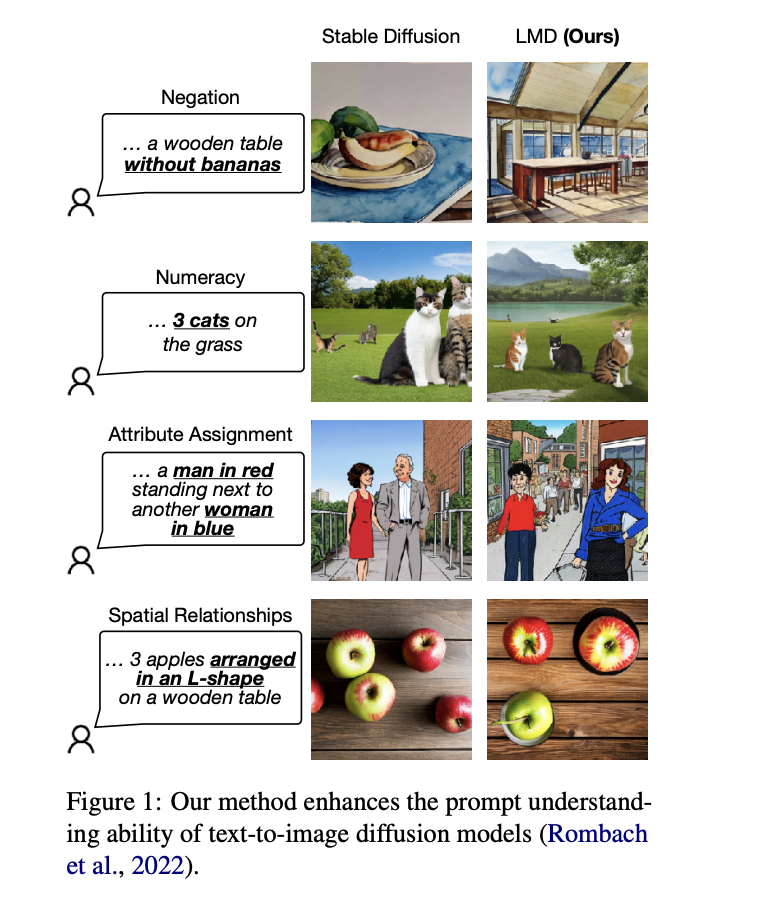

UC Berkeley and UCSF researchers have proposed a novel LLM-grounded Diffusion (LMD) approach that enhances prompt understanding in text-to-image generation. LMD integrates off-the-shelf frozen LLMs into diffusion models, resulting in a two-stage generation process that provides enhanced spatial and common sense reasoning capabilities. LMD offers several advantages beyond improved prompt understanding, including dialog-based multi-round scene specification and handling prompts in unsupported languages. The research team’s work opens new possibilities for improving the accuracy and diversity of synthesized images through the integration of off-the-shelf frozen models.

Reading Insights

0

1

2 min

vs 3 min read

85%

555 → 85 words

Want the full story? Read the original article

Read on MarkTechPost