Meta Unveils Next-Gen AI Chip and Datacenter Technologies.

TL;DR Summary

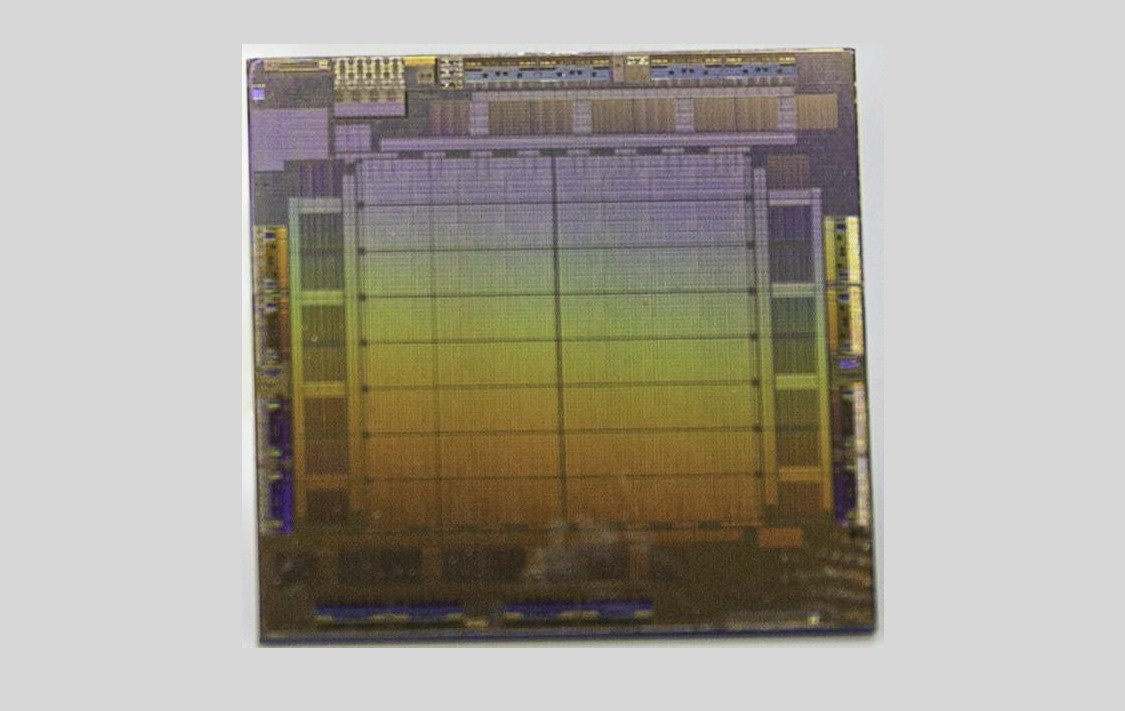

Meta Platforms, formerly known as Facebook, has unveiled its homegrown AI inference and video encoding chips at its AI Infra @ Scale event. The company has created its own hardware to drive its software stacks, top to bottom, and can do whatever it wants to create the hardware that drives it. The Meta Training and Inference Accelerator (MTIA) AI inference engine is based on a dual-core RISC-V processing element and is wrapped with a whole bunch of stuff but not so much that it won’t fit into a 25 watt chip and a 35 watt dual M.2 peripheral card.

- Meta Platforms Crafts Homegrown AI Inference Chip, AI Training Next The Next Platform

- Meta pulls the curtain back on its A.I. chips for the first time CNBC

- Meta Made Its AI Tech Open-Source. Rivals Say It’s a Risky Decision. The New York Times

- Meta announces AI training and inference chip project Nasdaq

- Meta Completes Research SuperCluster, Announces Next-Gen Datacenter HPCwire

- View Full Coverage on Google News

Reading Insights

Total Reads

0

Unique Readers

0

Time Saved

8 min

vs 9 min read

Condensed

94%

1,755 → 99 words

Want the full story? Read the original article

Read on The Next Platform