Instagram to flag teen self-harm searches to parents

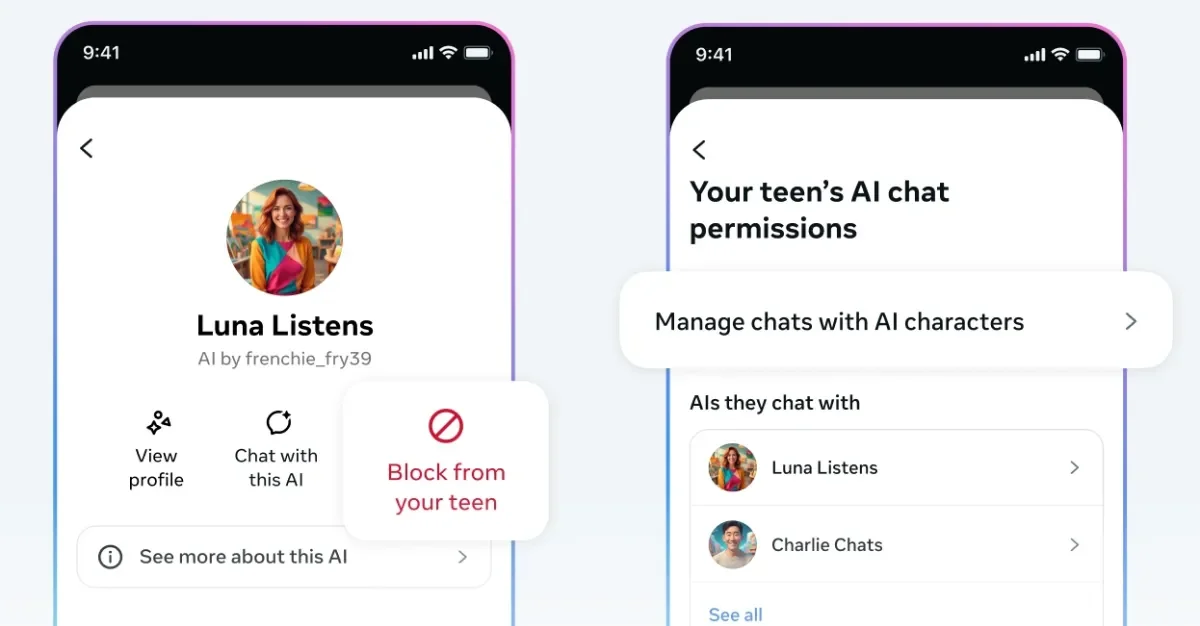

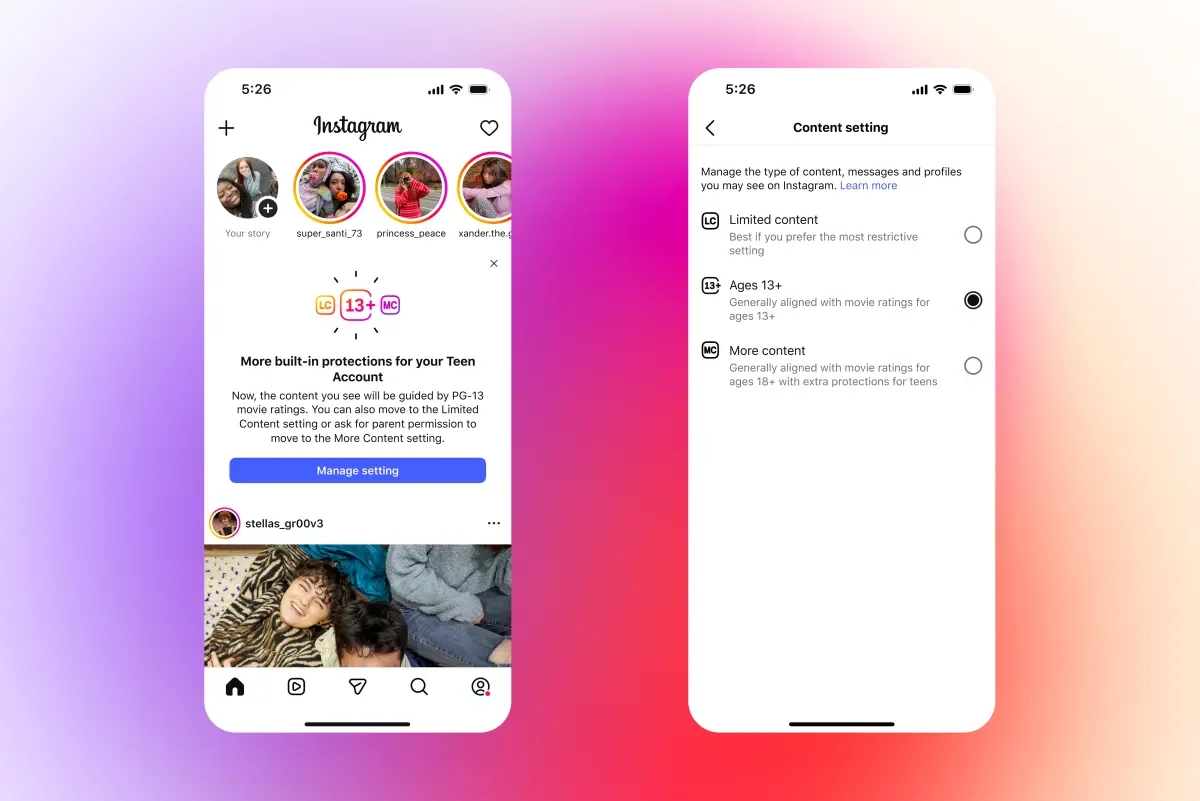

Meta’s Instagram will begin proactively notifying parents when teens using Instagram’s Teen Accounts search for suicide or self-harm terms, starting next week in the UK, US, Australia and Canada with other regions to follow; alerts may come via email, text, WhatsApp or in-app and will include resources to guide difficult conversations. It’s the first time Meta has issued proactive parent alerts for teen searches rather than simply blocking content, drawing mixed reactions: supporters say it aids protection, while critics warn it could alarm families or gloss over underlying platform risks. Meta says alerts accompany expert resources and notes it already hides self-harm content and will extend similar alerts to AI chatbot interactions in coming months amid wider scrutiny of youth safety online.