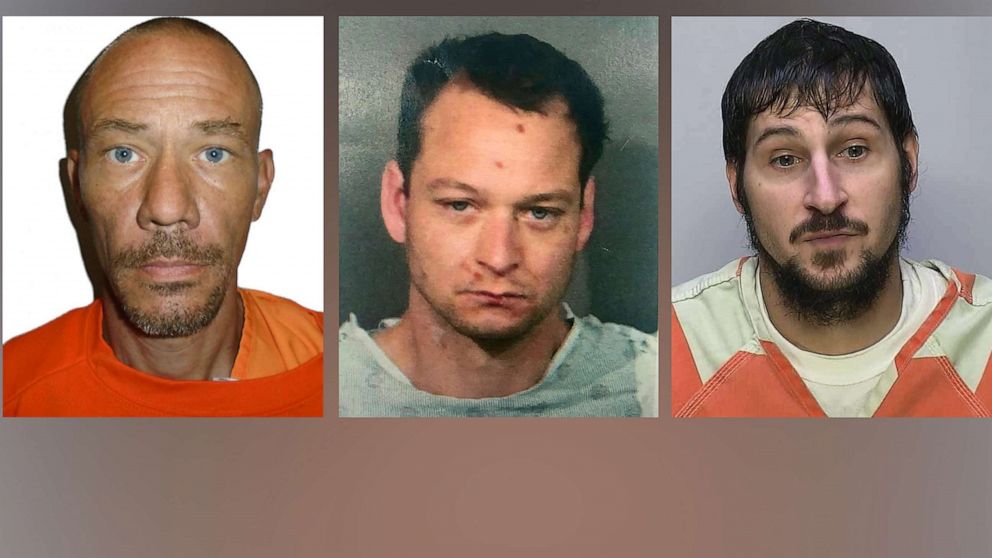

Escaped Murderers Spark Jailers' Scrutiny and Public Fear

The recent escapes of three suspected murderers have raised concerns about the ability of law enforcement to keep prisoners secure. While two of the escapees have been captured, the third remains at large. Experts suggest that inmates serving long sentences or facing serious charges are the most likely to attempt an escape, often taking advantage of opportunities and lack of oversight. Most escapes occur at county jails, where staffing shortages and budget constraints pose challenges. Safety measures and structural changes are being implemented to prevent further escapes. Research shows that most escapees are recaptured within a few days, but there are cases where individuals remain at large for longer periods.