"Revolutionary Method Doubles Computer Speed Without Hardware Upgrades"

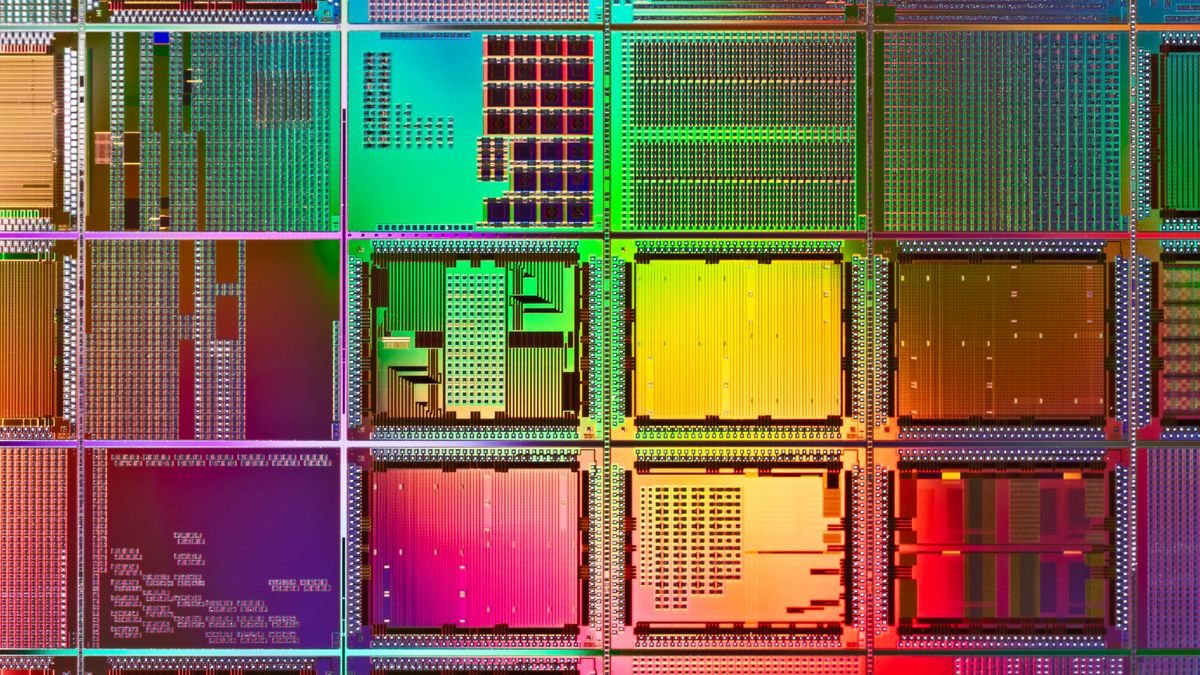

A new computing approach called "simultaneous and heterogeneous multithreading (SHMT)" could potentially double the processing speed of devices like phones and laptops without replacing any components. This method allows different processing units to work on the same code region simultaneously, resulting in faster performance and 51% less energy consumption in tests. The approach could also reduce hardware costs, carbon emissions, and water usage in data centers, but further research is needed to determine practical implementation and specific use cases.