"Unveiling the Inner Workings of AI: Insights into LLM Neural Networks"

TL;DR Summary

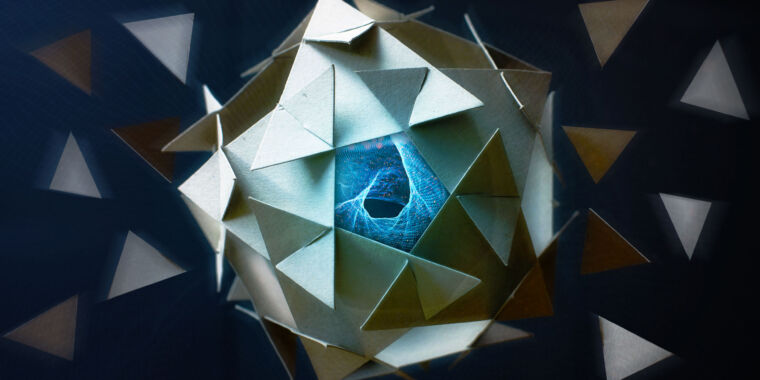

Anthropic's new research offers insight into the inner workings of LLMs, using a method to extract interpretable features from the neural network. By analyzing the activation of specific neurons in response to queries, the research reveals how concepts are represented across multiple neurons and languages. This process creates a rough conceptual map of the LLM's internal states, showing how it links keywords and concepts and organizes them based on semantic relationships. The study also demonstrates how identifying specific LLM features can help map out the chain of inference the model uses to answer complex questions.

- Here's what's really going on inside an LLM's neural network Ars Technica

- A.I.'s Black Boxes Just Got a Little Less Mysterious The New York Times

- AI Is a Black Box. Anthropic Figured Out a Way to Look Inside WIRED

- New Anthropic Research Sheds Light on AI's 'Black Box' Gizmodo

- How does ChatGPT 'think'? Psychology and neuroscience crack open AI large language models Nature.com

Reading Insights

Total Reads

0

Unique Readers

1

Time Saved

3 min

vs 4 min read

Condensed

87%

708 → 95 words

Want the full story? Read the original article

Read on Ars Technica