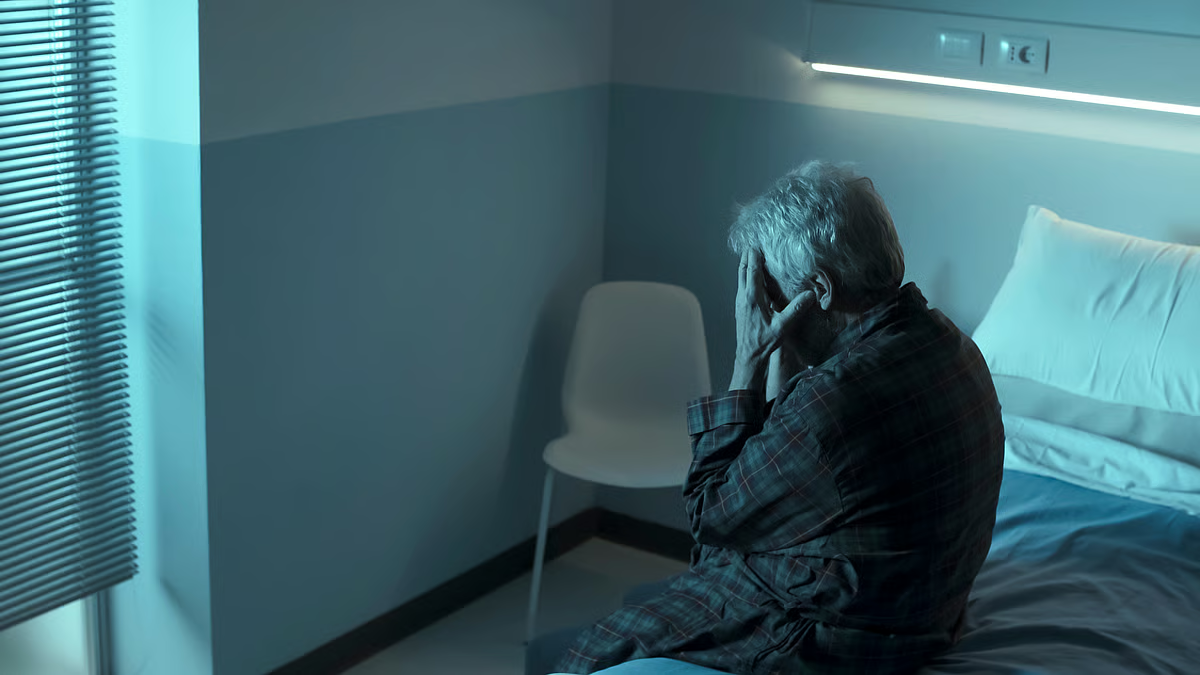

Man Hospitalized After Following ChatGPT's Diet Advice

TL;DR Summary

A 60-year-old man followed ChatGPT's advice to use sodium bromide as a salt substitute, leading to bromide poisoning, hallucinations, and a three-week hospital stay. The case highlights the dangers of relying on AI for medical guidance without proper oversight, as bromide toxicity can cause severe neurological, psychiatric, and skin symptoms. The incident underscores the importance of consulting healthcare professionals rather than trusting unverified AI recommendations.

- 60-year-old man follows ChatGPT diet tips, suffers hallucinations and paranoia, hospitalised for 3 weeks Gulf News

- Man who asked ChatGPT about cutting out salt from his diet was hospitalized with hallucinations NBC News

- Man took diet advice from ChatGPT, ended up hospitalized with hallucinations USA Today

- Man Hospitalized After Taking ChatGPT's Health Advice MedPage Today

- The dangerous ChatGPT advice that landed a 60-year-old man in the hospital with hallucinations New York Post

Reading Insights

Total Reads

0

Unique Readers

7

Time Saved

2 min

vs 3 min read

Condensed

85%

443 → 65 words

Want the full story? Read the original article

Read on Gulf News